You may have heard, humans are going out of business. First on the list: software developers.

As of today, there are nearly 30 million software developers in the world. I couldn’t believe it, but actually, the numbers have been steadily creeping up at single-digit growth annually for decades now.

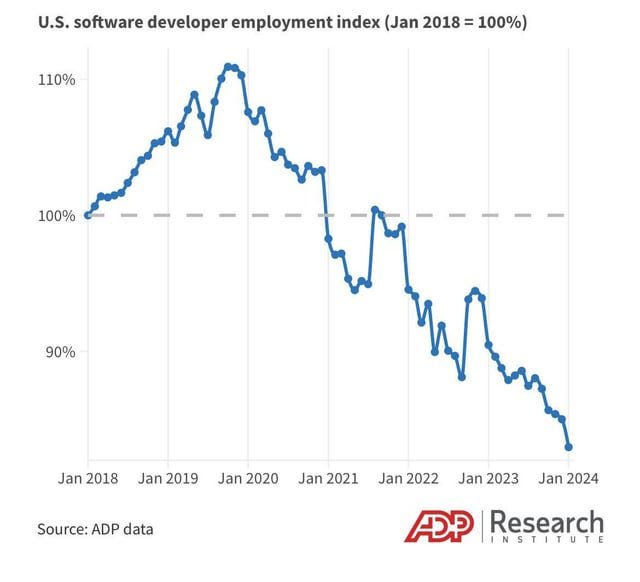

Then again, more recently, software jobs in the US have actually been on the decline since COVID-19 and don’t seem to be reversing based on the stable trend.

Given that ChatGPT only came out long after this downward trend started, it’s not yet driven by AI. It's more likely caused by efficiency-seeking corporations moving American jobs to lower-cost remote offices in India.

In terms of AI impact, various AI tools such as Github Copilot are already being used by most developers. The only question is how much value is being added. Sam Altman says 300%, some say more like 3%. The median is probably closer to the latter, but those magic moments where the thing you want just magically appears and works on the first go are possible today.

Sidebar: Why pick on developers specifically? Well, somehow it just turns out that LLMs take to code like a fish in water. One theory is that code is like language, but not as messy. Less irregular verbs, as it were. So it’s inevitable that automating the developer is the first clearly defined target for the road to all-knowing all-capable AGI. Coding ability and quality is also easy to measure with dedicated benchmarks. So it’s the perfect testbed for AI labs.

Given the salary cost of 30 million developers is pretty high, this is a trillion-dollar opportunity for AI companies. Copilots are cute, but agents are the dev killer. For a moment, everyone thought Devin had cracked it. But here we are, still waiting months later. Others, too, are trying.

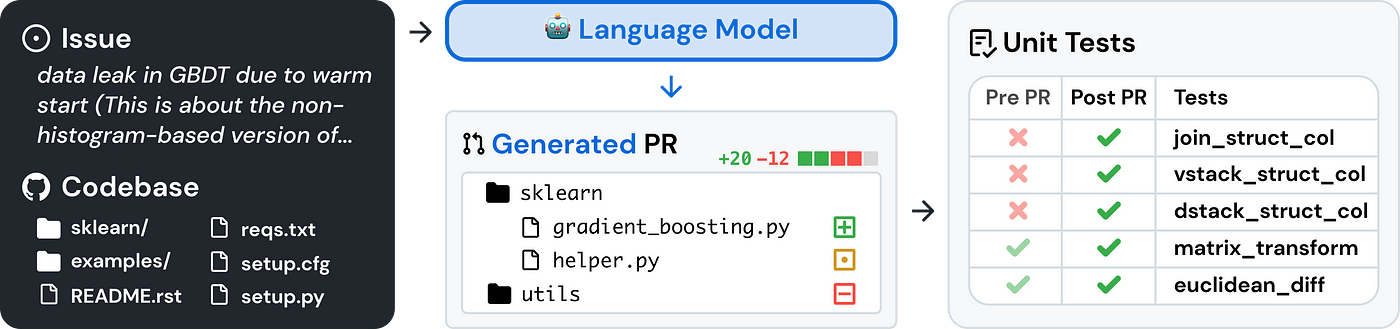

Recently, Factory AI set new a high score on the popular SWE-bench benchmark with their Code Droid system. Why is this the metric they are all after?

SWE-bench is a dataset that tests systems’ ability to solve GitHub issues automatically. The dataset collects 2,294 Issue-Pull Request pairs from 12 popular Python repositories. Evaluation is performed by unit test verification using post-PR behavior as the reference solution. — swebench.com

As you may deduce, the idea here is that such an AI system could basically just be given access to your code repository, and it would just start solving issues added by humans. In some sense, you wouldn’t know the difference from a remote worker. Perhaps less memes on Slack.

This is the fundamental concept behind AI agents, and software is only the beginning.

So what’s the verdict? Is the writing on the wall, or are there reasons to believe we’ll be typing away C++ for decades to come?

Recently, I wrote about the Death of SaaS. The once darling cash cow of Silicon Valley is suddenly not hot. Venture capitalists wouldn’t touch a software-only non-AI SaaS with a ten-foot pole.

The core premise is that if SaaS dies, we’ll be writing a lot of bespoke software real soon. By a lot, I mean A LOT. Not single-digit growth, but multiples. Think entire mountains of software.

So who’s writing all this new software? People or ChatGPT? This is a tricky equilibrium, and naturally, timing is a huge factor here. Today, it would be people. Tomorrow? Well, that’s the question, isn’t it?

“We’re going to see 10 person billion-dollar companies pretty soon… There’ll soon be a 1-person billion dollar company — which would’ve been unimaginable without AI — but now will happen. – Sam Altman, OpenAI (source)

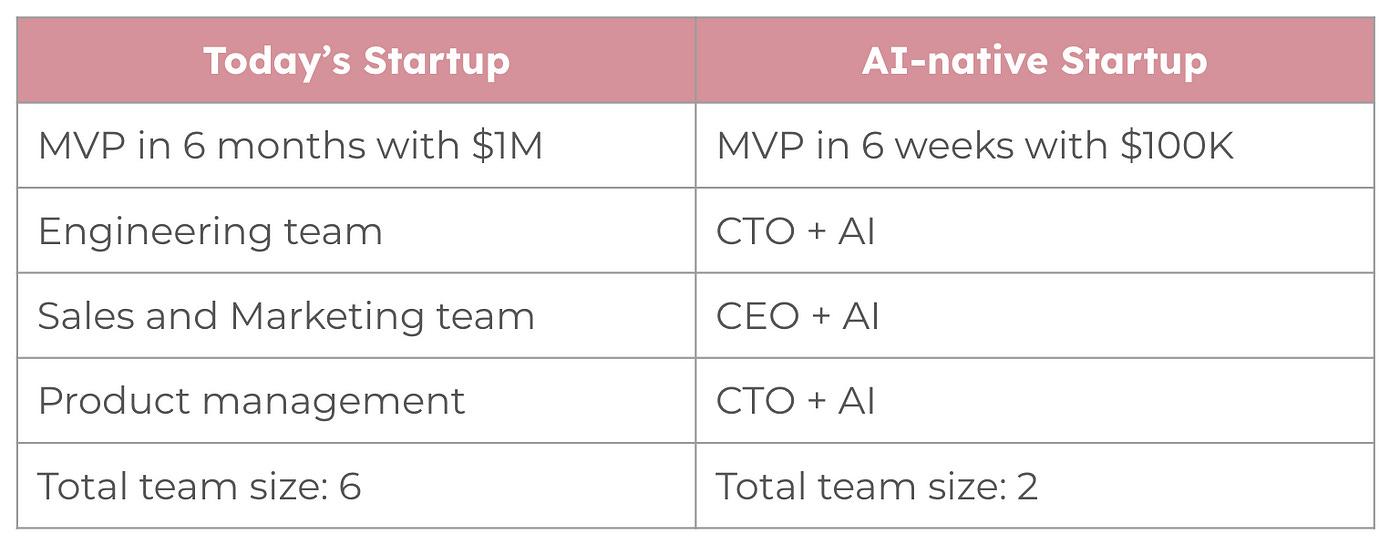

Anecdotally, startups are already doing more with less using AI. I hear from Silicon Valley investors that hiring is delayed, and shipping is faster. Founders are drinking the AI Kool-Aid and going native. AI-native.

Overall, I do foresee a gradual ramp-up in human developer demand. Less from startups, who are already going AI-native. More from every other company on the planet using software. Which is most companies, to be honest. Given that AI tools are better at simpler tasks, it seems likely more frontend work will be automated initially. So demand should skew towards backend, database, integration, and architect roles.

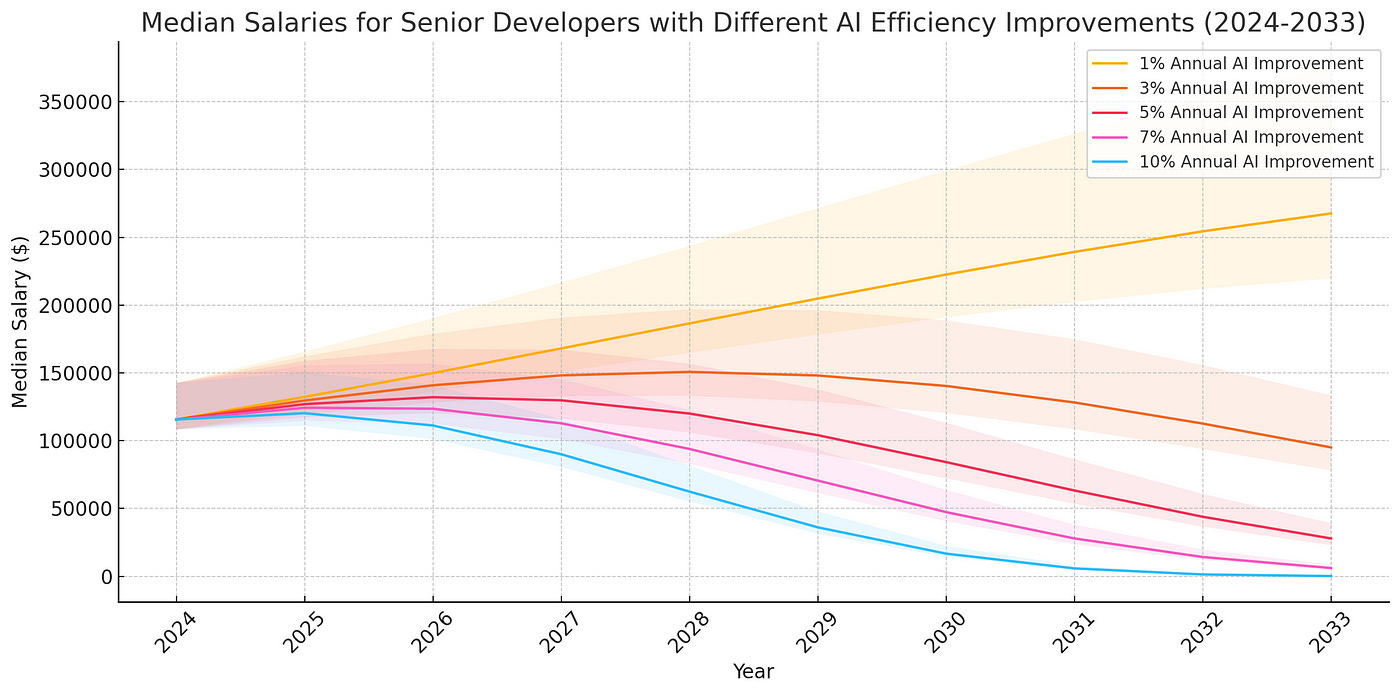

Obviously, GPT-5 might come out and reset many assumptions here. Be that as it may, even if AI writes most of the code, you’ll want a senior developer on the prompt for some time. After all, a lot of the responsibility of a developer is making technical decisions and fixing them when those decisions backfire.

Given the dynamics at play, it’s reasonable to expect that while junior developers are already sweating, senior developer demand will increase and salaries will follow. Let’s examine this thread further, and see what evidence there is.

In the first part of this series, we established that depending on how fast AI capabilities are improving year-on-year, the cost per line-of-code seems likely to asymptote toward zero by 2030. That’s pretty shocking if you take a moment to consider how pretty much all industries now run on software. From today, that’s just over 5 years away!

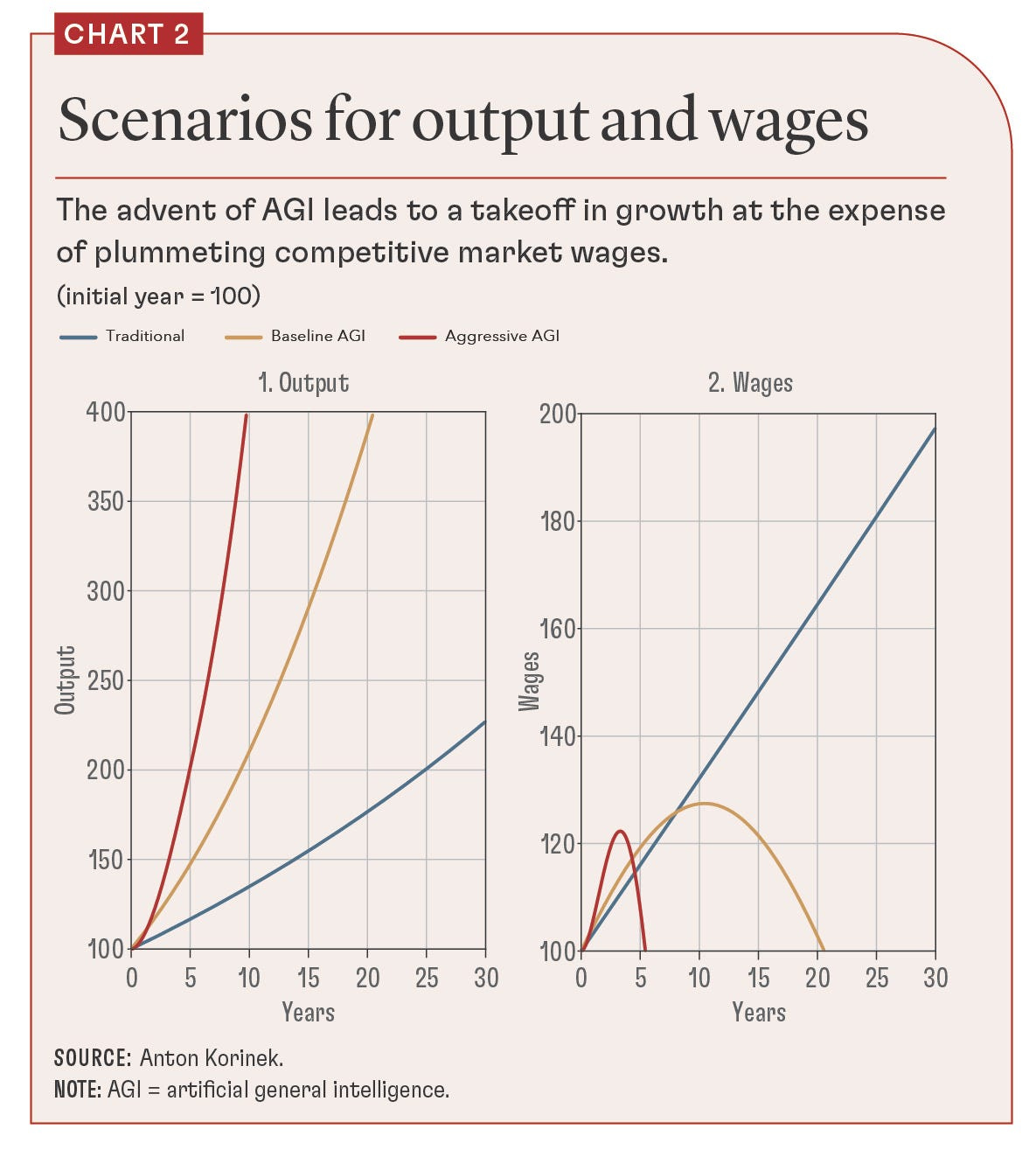

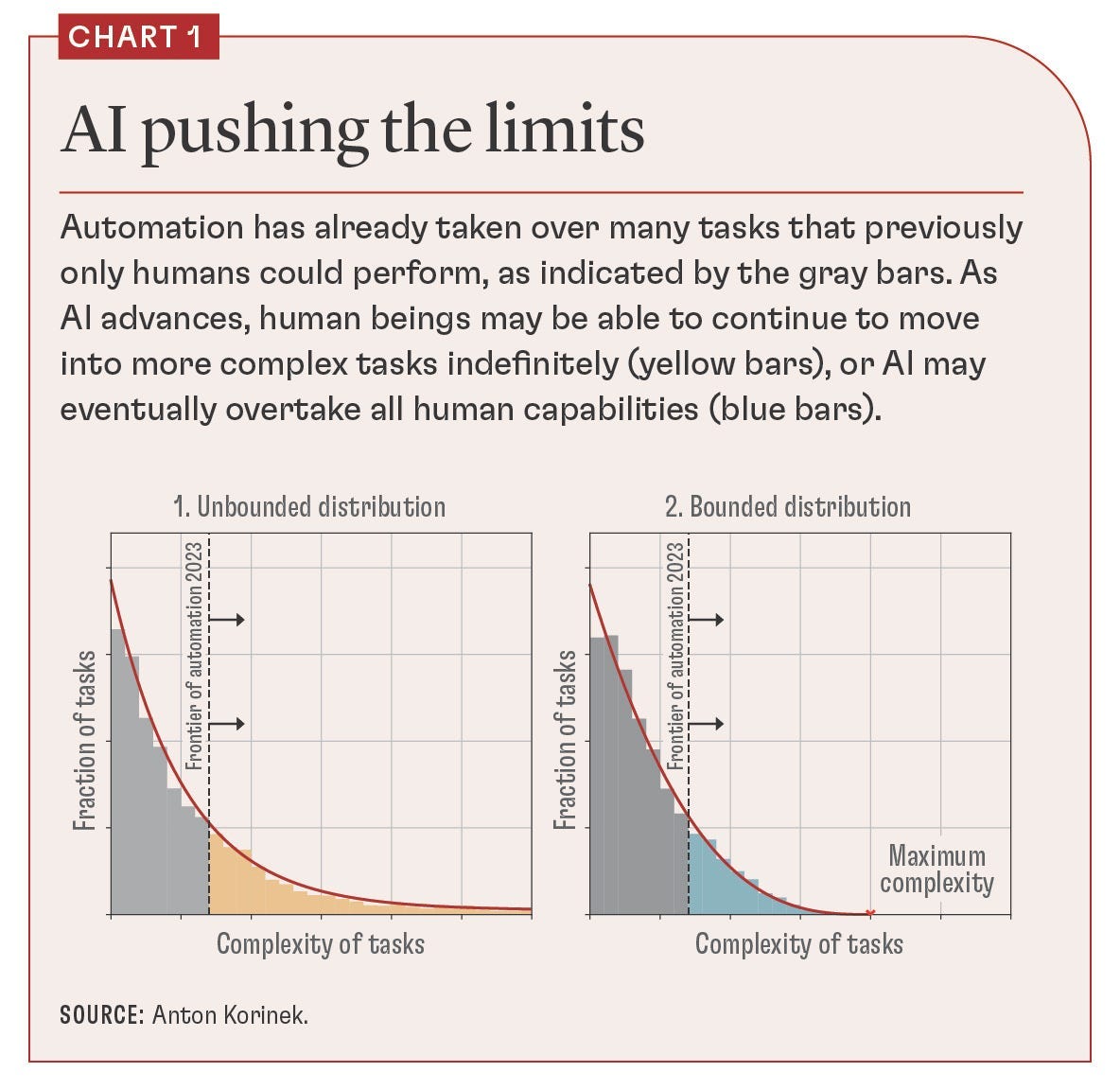

While I’m no economist, it turns out actual Professor of Economics Anton Korinek has come to pretty much the exact same conclusion. His focus hasn’t been on code per se, but more generally on knowledge work. He recently published a paper for the IMF, where he looks at the implications of rapid AI progress. This is what he found.

Again, we see pretty drastic results. Even the baseline is exponential, but in fact you can get more exponential than exponential growth. Superexponential. No, it’s a real thing. I didn’t make it up even though it sounds made up. It just means that at some point growth is so fast that it’s basically just a straight line on a linear axis. That’s what Korinek refers to as “Aggressive AGI”. To be clear, his definition of AGI here is “AI that possesses the ability to understand, learn, and perform any intellectual task a human being can perform”.

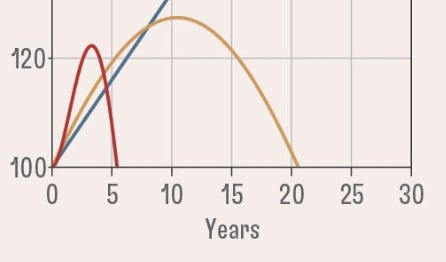

To highlight how crazy this transformation actually can be, let’s zoom in on the juiciest part.

We’re somewhere between years 0 and 5. Yes, this is our actual timeline! So depending on how things go, we may see a sudden rush in productivity and wages as AI takes off. Oddly, that would be a huge red flag. It indicates that once a certain threshold is reached in AI capability, there is a sudden and irreversible rug pull. Where initially humans are needed to make the AI magic work, after that point humans are simply no longer needed to keep increasing productivity.

How could that possibly happen so suddenly? Do we have evidence for one scenario over another? I find this visualization by Toby Ord quite useful.

From the evidence, we in fact did not just breeze past the human range, as was hypothesized by some researchers years before ChatGPT. In theory, there isn’t anything special about human intelligence. Yes, it’s the highest form we’ve seen in the universe, but our limits are not the limits of physics and information theory. So what’s the snag?

So far at least, we are training the models on human data. Perhaps it’s unsurprising, that when we set benchmarks such as tests for humans in various fields of study, we get human-level capabilities out. Some say we need synthetic data, others say we need to rethink LLMs completely.

If you follow AI experts on Twitter, there are two clear camps. One, championed by Gary Marcus, claims AI will basically never escape the Human plateau. Well, at least not LLMs. To be precise, even Gary thinks AI will eventually get there, but it could take decades or centuries, and that LLMs are a huge waste of time and resources. Same goes for Tesla Autopilot.

The other camp would call this pure “cope”. That attention really is all you need, and that the Chinchilla scaling laws are gospel. You add more data, and more compute, and humans are soon dust. Not literal dust, we hope.

This other camp includes some heavyweights like OpenAI co-founder Ilya Sutskever who famously told Anthropic CEO Dario Amodei that “the models just want to learn”. This was years before ChatGPT, and these guys haven’t gone back on their convictions one bit. Which also explains the billions and billions being poured into compute and data by the big labs. Their entire skin is in the game to prove they can do it.

Ultimately, the source of this uncertainty stems from complexity. We already know AI can outperform humans in a wide range of specific tasks, such as board games like Chess and Go. But most of us aren’t playing board games at work, at least not most of the time. Real-world jobs consist of a variety of tasks of varying complexity. Until we can take care of that long tail, AI will be limited to assisting, not replacing humans.

As Korinek highlights, this makes all the difference. If AI progress is rapid, we might expect this long tail of complexity to vanish suddenly, even overnight with a new model such as GPT-5 or perhaps GPT-X.

Once the brakes come off, as economists would say, the elasticity of substitution between capital and labor rises above 1. That’s a very boring way of saying that there are no longer diminishing returns to increasing growth through investment. Because you’re no longer limited by humans and their needs. According to this analysis by OpenPhil, the result could look something like 30% annual Gross World Product growth. Explosive.

Now let’s get practical. How do the next few years play out for developers?

If we start with a median SWE salary of $100K in 2023, the question is what happens to supply and demand. The starting salary isn’t important, as we know the distribution is massive. The point is the rate of change.

Here’s the full list of assumptions. Note: I’m not an economist, and I’m just using ChatGPT here to generate some Python code.

10% annual increase in demand for developers (demand+), from the death of SaaS

3% annual increase in new developers (supply+), from people entering the field

5% annual decay in supply for all developers (supply — ), from developers finding other jobs because AI

2–5% annual inflation rate (salary+)

5–10% annual economic growth (demand+), optimistic because AI acceleration

Standard deviations above and below for economic growth, inflation, supply, and demand.

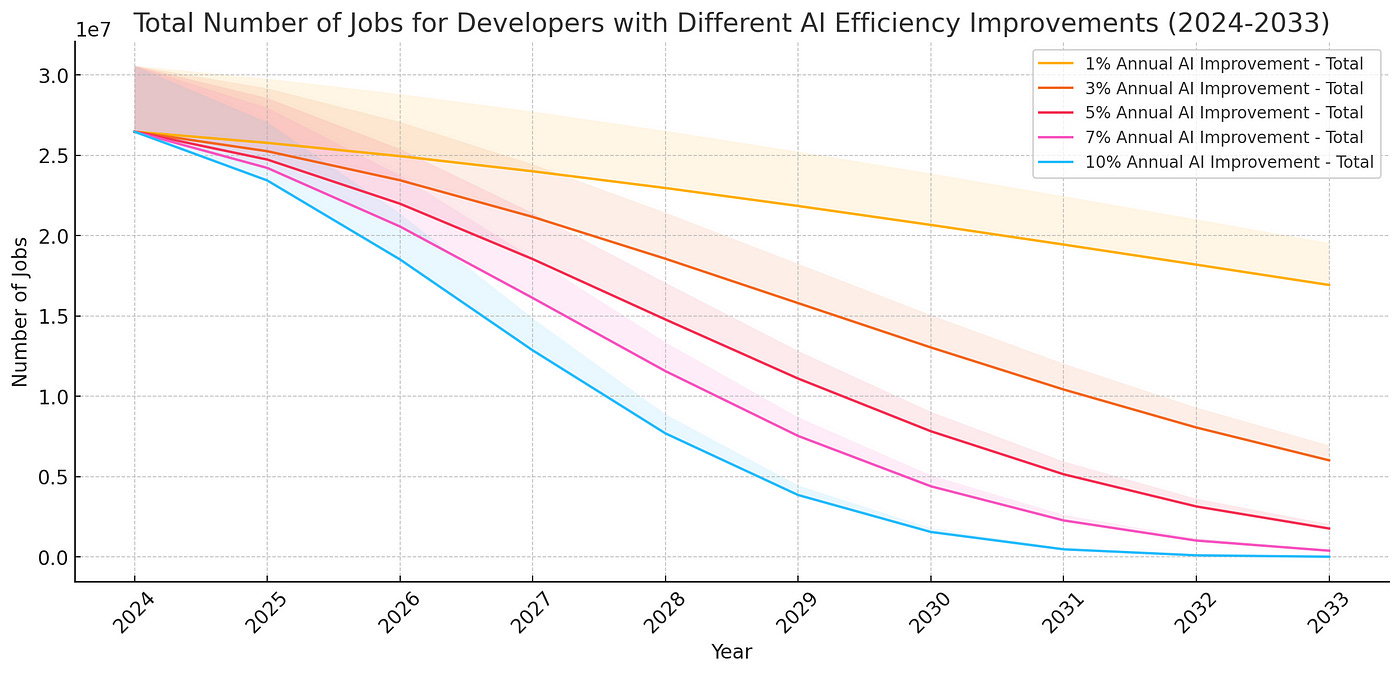

Let’s see what happens if we add one final assumption: AI efficiency improvement between 1% and 10% per year, all else being equal.

The range of outcomes here is pretty wide, between a solid career trajectory and total extinction of the Software Developer within the decade.

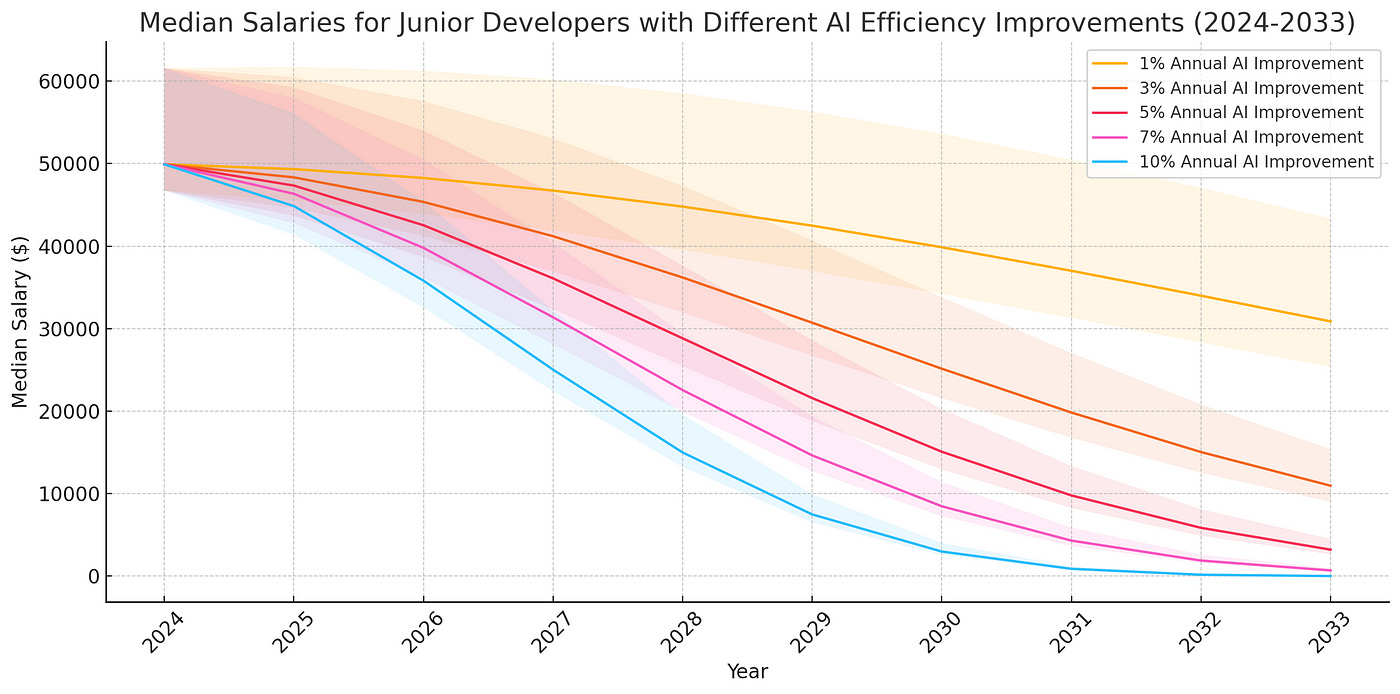

Now let’s run the same model with all the same assumptions except that demand would shrink 5% annually for junior developers, as AI is faster to replace them, and most transition to senior roles over time.

Suddenly, even the best-case scenario is a steady decline.

We could also try modeling the total number of SWE jobs, starting from 30 million developers at 90% employment, 20% senior developers, and 80% junior developers.

What’s implied here is that over time most jobs transition to senior roles, while the overall number will gradually come down as AI takes over more and more coding tasks.

Obviously, take these models for what they are: toy models. By tweaking any of the assumptions you can create any kind of chart you like. But it illustrates the range of outcomes quite poignantly, I feel.

So the jig really is up. Where to from here?

Whichever scenario ends up being closer to reality, if you’re a young student at the beginning of your career, I would think about what type of career trajectories are ahead for you.

If you’re already deep into a SWE career, I would use the remaining time on the clock to get up the ladder. Again, you’d rather want to be in senior positions on the backend side, rather than junior frontend. Obviously, there are adjacent fields like Data Science, that have similar risks from AI automation. Frankly, even the best-paid AI researchers and engineers creating frontier models at leading AI labs are at risk, given the first job they are likely to automate is their very own!

Looking back at my career path starting as a junior developer, there was a fork in the road where you could continue in hands-on roles and keep coding, or move to a higher level of abstraction. Typically, this means either Software Architects or Project Management.

Just think how much more software we will create if 30 million human developers move on to manage teams of AI instead. It would be astounding in scope and speed.

Further, even with AI and AGI on the radar, CTOs and founders will have more to play for on the capital deployment side, where you can be the beneficiary and ride the AI wave, and avoid riding the lightning. Not all hope is lost. There’s time to turn the tables to your advantage.

Instead of getting replaced by AI, you use AI to create your own business. The threshold will be much lower than even today, as you will be able to assemble a crack team of experts in software, marketing, design, finance, and business for pennies. You won’t need to raise hundreds of thousands of dollars to create a product, perhaps only a few hundred will do!

Of course, classical economics would tell you there will be new jobs. There always are, historically. Farmers became factory workers. Factory workers became… software developers? Also, TikTok dancers, I guess.

So what are these mysterious new AI jobs, you ask? It’s easy to say there are always new jobs, historically that is, but there is also the counter-argument for technological unemployment.

Prediction: By 2030, a majority of corporate software developers will become something more akin to software reviewers. The cost of development will fall and as experienced developers become more productive their salaries will rise. — Bessemer State of Cloud 2024

When we think of new AI jobs, we are effectively talking about human-in-the-loop jobs. Hopefully, not prompt engineering. But it could be something like supervising a bunch of AI agents, or Software Reviewers as Bessemer puts it. AI provides productivity, while humans set the goals and tasks and monitor progress. The AI agents might check in occasionally if they get stuck or need clarification. Why?

Because for some time yet, we’d rather trust humans than AI despite anything the metrics will say. Of course, there will be a day where no sane person would let a human doctor diagnose them, let alone touch actual code. But it takes years, sometimes decades, for new social norms to be established around technology. It took smartphones and social only a few years to become normalized, but handing over decision-making about our very lives seems a bigger step.

The reason I chose canaries for the title of this essay, is that everything you’ve read is in itself part of a similar complexity distribution. Remember, Korinek wasn’t talking about developers. With sufficiently good AI, say AGI, then the new jobs are also done by AI. There’s no new jobs for humans after the very nature of new jobs is also automated.

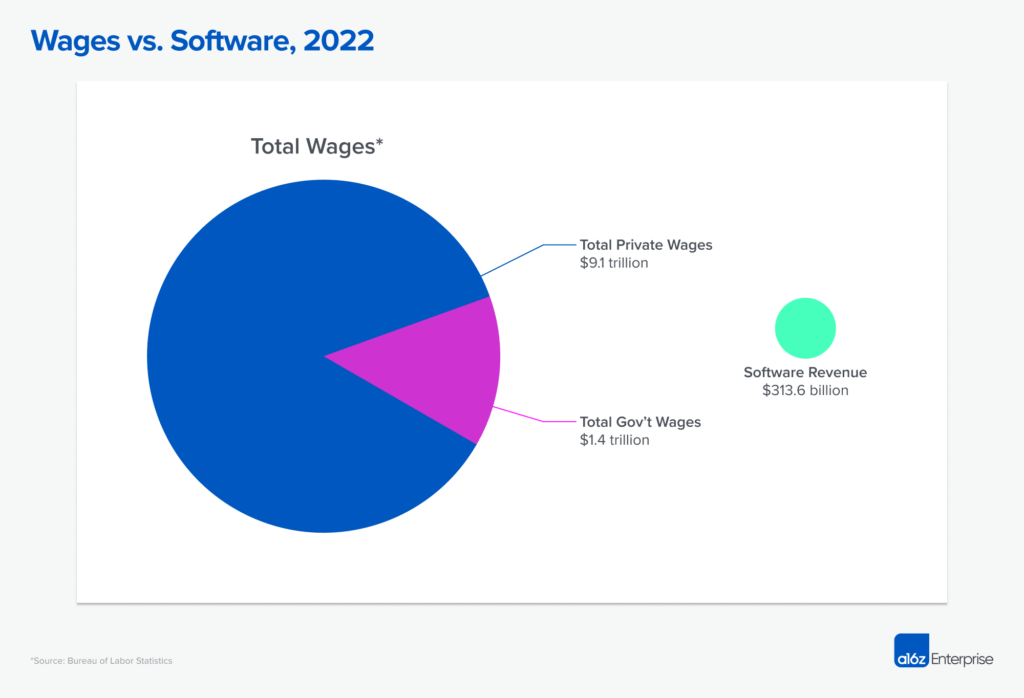

The incentives are simply too high to stop the train. If my Death of SaaS hypothesis is correct, we might see billions in software revenue evaporate. But those will be replaced by trillions in AI revenues.

Comparing enterprise software revenues at a respectable $300B to the total wages paid out by their customers to human workers, we can see where the current trillion dollar club of tech giants is just the beginning. We will eventually see AI companies with trillions in revenues and approaching new spheres of valuation never before seen.

But who are the beneficiaries of all this future wealth and abundance? Is this Sam Altman making trillions by selling directly to S&P 500 CEOs, no employees needed? Elon Musk building robots to send to Mars for the fun of it? Who’s buying Coca Cola and LED TVs, surely not the robots?

Is this all reason for doom and gloom? Well, you could look at it two ways. The optimist view is that we can finally move past the drudgery of work and into material abundance. You press a button, and a donut appears in your hand. You can play video games all day without a worry in the world.

In his latest book, Nick Bostrom assumes things will go well, and our problems are more around how to spend our time learning woodworking and opera singing. That could happen.

The skeptic might say such transitions have historically been pretty messy. The farmer became a factory worker became a software developer. It seems simple, but a lot of lives were uprooted in that process. With each new technological revolution, we have less and less time to adjust to the new normal.

So perhaps we shouldn't just do the thing because we can. Perhaps we should take caution, and work on international coordination before AI does that coordination for us, too.

What do you think?

.png)