Since 2008, Spotify has grown from 6 million to 600+ million users through patient geographic expansion and gradual catalog building. Each new market taught them about licensing, each user cohort revealed listening patterns. The vision was revolutionary (replace music ownership with streaming), but the execution was evolutionary. This combination of bold vision and gradual deployment built a $60-billion business.

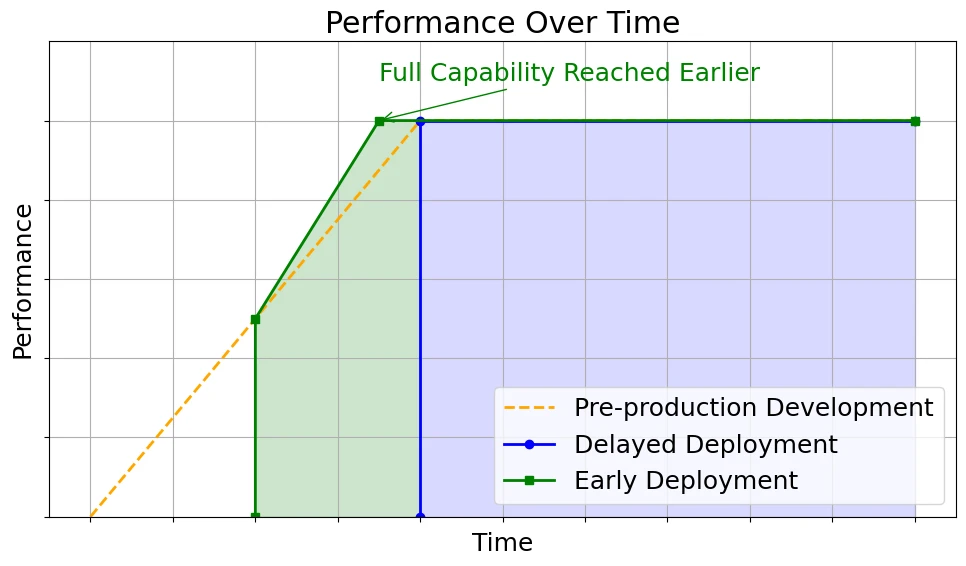

Most enduring technologies follow this pattern. They rarely succeed through single, spectacular leaps but through iterative feedback loops that teach founders and users how to adapt to one another. Revolutionary deployments (following step functions) sometimes succeed spectacularly: the iPhone eliminated keyboards overnight, ChatGPT reached 100 million users in two months.

The rule is ramp functions (as wonderfully explained by Josie Boloski) that build capability, trust, and understanding over time, not step functions.

AI pushes this pattern to its extreme. You must deploy before you’re ready, because readiness itself can only be learned through deployment. Yet you’re asking users to trust a system that will fail them sometimes, in ways you can’t fully predict beforehand. Each release is both a product launch and a social experiment in trust calibration.

As AI systems shift from tools that suggest to agents that act, the stakes multiply. A writing assistant that suggests an edit can be ignored. A trading or scheduling agent that executes autonomously can spend money, commit resources, or damage relationships. When models call models, errors cascade. When agents make decisions, accountability blurs. Each layer of autonomy compounds the uncertainty.

This creates a unique strategic dilemma. You need to expose the system to the world to learn its real behaviour, but the very exposure that generates learning also exposes users to risk. The tighter the feedback loops you need for improvement, the higher the potential cost of early errors. And the higher the stakes, the more reluctant users become to participate in that learning process.

This tension sits at the heart of what I call the Deployment Paradox.

The Deployment Paradox Defined

Here’s the paradox: You need trust to deploy, but you need deployment to earn trust.

The data from real usage enables model improvement and product refinement, yet you can’t access that data without first convincing users to engage. Trust, deployment, and quality form a circular dependency.

In traditional software, this loop doesn’t exist. Deterministic systems produce consistent outputs (input X yields output Y) thus trust is binary: it works or it doesn’t. AI systems are probabilistic. The same input can yield multiple outcomes, and even an 85-percent accurate system fails 15 percent of the time. Users must learn when to trust, when to verify, and when to ignore. That intuition can’t be engineered in advance; it requires experience across many interactions in diverse contexts.

Autonomy then amplifies the problem. The further AI moves from assisting to acting, the more its failures carry material consequences. Wrong suggestions can be dismissed; wrong actions cost money, time and reputation. Networks of agents interacting with each other multiply both complexity and uncertainty.

The paradox intensifies in high-stakes domains (legal, medical, financial), where trust is needed before deployment. These are precisely the fields where learning through mistakes is least tolerable, and yet where deployment-based learning is most required.

In practice, however, this loop is easier to navigate in sectors with robust risk governance. Health and finance organizations, for example, have spent decades developing data governance frameworks and clear thresholds for what constitutes acceptable risk.

That’s why the central question in AI strategy isn’t “when is the technology ready?” but “how do we deploy imperfect AI that will make it ready?”

This reframes AI deployment as a strategic and psychological problem rather than a purely technological one. Success depends less on having a perfect model than on sequencing deployment to compound trust, through constrained features, transparency, and recoverability.

AI deployment is less a technological problem than a strategic one: how to deploy imperfect systems in ways that compound trust faster than they fail.

TL;DR

Complex systems emerge through feedback loops and adjacent possibilities over time, not through revolutionary deployment. For AI systems, this pattern intensifies because probabilistic outputs and increasing autonomy make trust calibration essential.

The mechanism is trust compounding through three sequential psychological shifts that are fundamentally constraint design strategies: from skepticism to confidence (prove reliability in constrained domains), from opacity to calibration (communicate constrained promises honestly), and from critic to collaborator (create constrained failure environments). Each shift strategically limits scope to build intentional perceived control.

Success comes from (i) honest constraint assessment, (ii) strategic sequencing based on these trust mechanisms, and (iii) recognising the rare moments when revolutionary launch becomes viable.

1.1. Complexity Science: Why Step Functions Are Nowhere in the Wild

In 1992, Mitchell Waldrop published “Complexity: The Emerging Science at the Edge of Order and Chaos,” documenting how physicists, economists, and biologists at the Santa Fe Institute discovered a shared principle: complex systems cannot be designed top-down or deployed all at once. They must evolve through feedback loops, adaptation, and time. Bill Gurley once called it “the most important book for understanding technology markets,” because it shows that the same principles governing natural evolution also govern how innovation reaches scale.

Crucially, we must distinguish complex systems from merely complicated ones. The Panama Canal is complicated (many interdependent parts) but not complex: its behaviour is deterministic and fully specifiable. Markets and AI systems are complex: behaviour emerges from countless interactions that cannot be (fully) predicted. Revolutionary deployment works for complicated systems where outcomes are specifiable, but fails for complex ones where outcomes must emerge through use.

Three converging insights explain why:

-

Innovation Moves Through Stepping Stones (Kauffman’s “Adjacent Possible”): Innovation advances one adjacency at a time. Stuart Kauffman showed that life couldn’t leap from simple molecules to DNA overnight; each reaction only made nearby reactions possible. The same law governs technology.

Even when breakthroughs seem sudden (penicillin, the transistor, CRISPR), their deployment required enabling conditions already in place. The iPhone felt revolutionary but depended on a web of mature adjacencies: capacitive touchscreens, ARM processors, 3G networks, lithium batteries. Without them, it would have been impossible in 1995.Operational takeaway: You can’t deploy into non-existent adjacencies. Each product release must prepare the substrate (data pipelines, infrastructure, user understanding) that makes the next adjacency reachable.

-

Systems Balance Structure and Fluidity (Langton’s “Edge of Chaos”): Chris Langton demonstrated that adaptive systems survive in a narrow zone between rigidity and randomness. Too rigid, and they stagnate; too chaotic, and they disintegrate.

Applied to technology, rigidity is over-engineering (frozen specs, long release cycles); chaos is perpetual pivoting and breaking changes. Success lies in structured fluidity: core interfaces remain stable while internals evolve.Operational takeaway: Design deployments with a stable core (APIs, mental models) and flexible periphery (features, models, UI). Users can adapt when structure anchors change.

-

Complexity Emerges From Iteration (Holland’s Genetic Algorithms): John Holland showed that complex behaviour can’t be pre-specified; it emerges through variation, selection, and heredity across iterations. Each generation encodes what worked before, producing designs no human could have planned in advance.

Applied to technology, innovation scales through the same evolutionary loop. Products, teams, and models evolve by recombining what has proven effective. Each iteration encodes new knowledge about users, data, and edge cases, gradually refining both capability and resilience.

Operational takeaway: Complexity isn’t delivered; it’s discovered through iteration. Each deployment tests an assumption, and the tighter the feedback and inheritance loops, the faster capability compounds.

The Unified Principle: Complex systems must evolve through structured iteration, not revolutionary design. Kauffman explains why (you can only move through adjacent possibilities). Langton explains how (balance structure and fluidity). Holland explains the mechanism (variation, selection, heredity over time).

For AI systems, these constraints intensify. Probabilistic outputs mean more uncertainty to resolve. User adaptation requires developing mental models of capability boundaries. The system itself learns from deployment, creating genuine emergence rather than revealing pre-programmed behavior. Step functions don’t just risk failure for AI, they contradict fundamental principles of how these systems develop.

1.2 Trust Accumulation: The Psychological Shifts

The paradox resolves through strategic constraint design. If you can’t deploy without trust, constrain what must be trusted: limit scope, clarify boundaries, and make mistakes survivable.

These three levers: domain, promise, and consequence, turn circular dependency into compounding confidence.

AI systems require two kinds of trust simultaneously:

-

Accumulation: belief that the system works reliably across time.

-

Calibration: intuition about when to trust specific outputs.

Traditional software needs only accumulation (it either works or doesn’t). AI needs both, and both emerge only through interaction. Effective deployment builds them sequentially through three shifts.

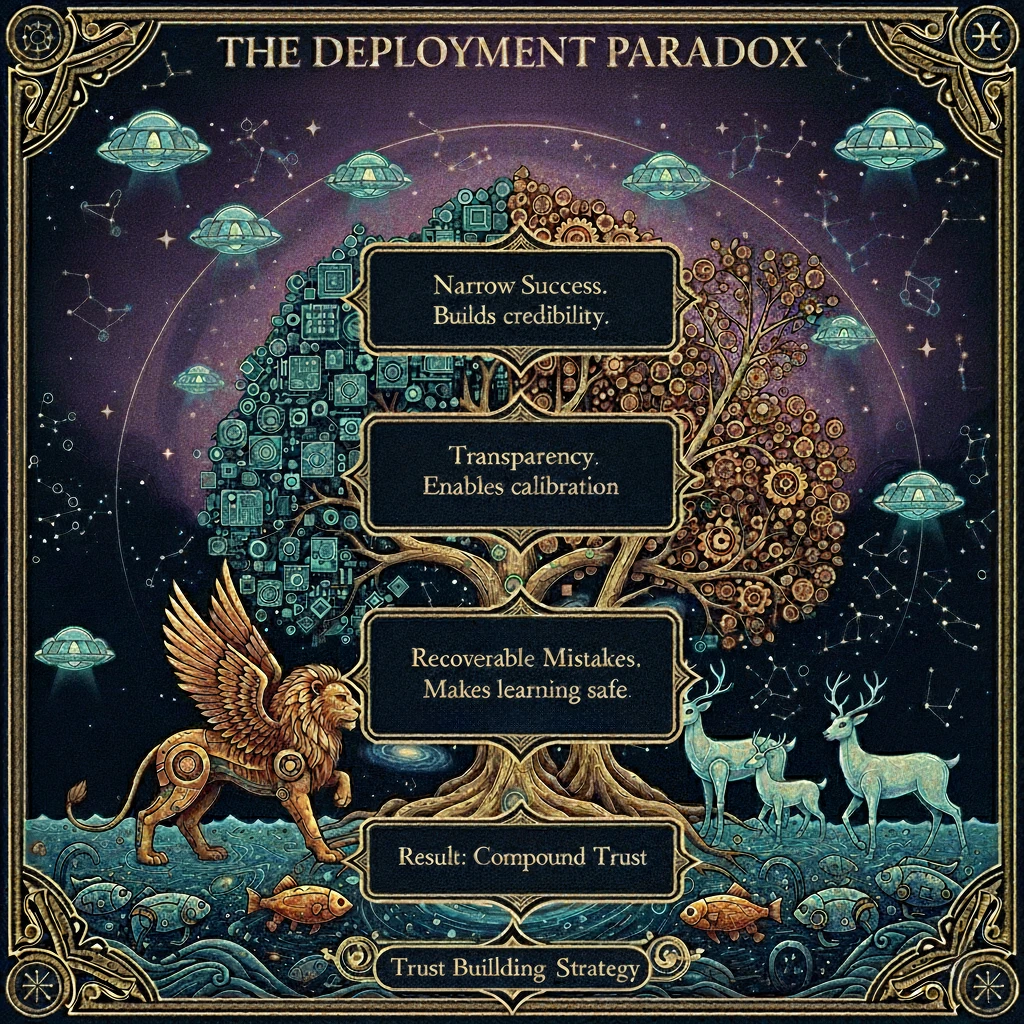

Effective constraint design builds both dimensions through three sequential mechanisms. Each mechanism constrains a different aspect to create intentional perceived control:

-

Narrow success constrains domain (where you deploy): prove reliability in focused contexts

-

Transparency constrains promises (what you claim): communicate boundaries honestly

-

Recoverable mistakes constrain impact (consequence of failure): create safe learning environments.

These aren’t independent tactics but compounding mechanisms. Each enables the next, creating trust that expands across progressively broader capability.

.webp)

Shift 1: From Skepticism to Confidence - Narrow Success

When an AI performs consistently in a constrained domain, users infer its underlying competence. Empirical reliability replaces abstract promises.

Jimini.ai exemplifies this in legal AI. Rather than claiming general legal capability, they focused narrowly on document productivity: drafting, summarisation, extraction. Every AI suggestion includes citations showing exactly which document sections support each claim. Lawyers verify sources instantly, turning traceability into trust. The narrow focus creates a learning loop: the AI learns lawyers’ terminology and reasoning patterns, improving relevance, which drives more usage and richer training data. The mechanism works because narrow competence is legible: users verify claims directly rather than trusting credentials.

Operational takeaway: Start narrower than feels comfortable. Domain precision makes reliability legible.

Shift 2: From Opacity to Calibration - Transparent Boundaries

Acknowledging what your system cannot do increases trust more than emphasising capabilities. Signaling self-awareness implies reliability under uncertainty.

Sierra, the AI customer service platform valued at $10 billion, uses a multi-model architecture monitors confidence in real time. High confidence (account queries, order tracking): AI handles fully. Low confidence (complex complaints, nuanced policy, emotional situations): the system explicitly says “I want to make sure you get the best help; let me connect you to a specialist” and escalates. This transparency signals calibrated capability. Users learn to trust AI for routine matters while complex issues receive human attention. The explicit acknowledgment of limitation increases trust more than attempting universal competence.

Research validates this counterintuitive insight**.** Teams at DeepMind and Inflection discovered through deployment experience, and Ehsan et al. confirmed through formal research, that users don’t need AI to hide its failures: they need AI to be useful despite them (see our article on systemic errors). Instead of concealing imperfections, designers should expose them in useful ways so users can understand, anticipate, and mitigate failures.

Operational takeaway: Expose uncertainty instead of concealing it. Users calibrate through visibility, not perfection.

Shift 3: From Critic to Collaborator - Recoverable Mistakes

In early deployment, every error is data, but only if it doesn’t destroy trust. Gradual, low-stakes contexts make mistakes recoverable, and turn users into co-learners.

Runway, the AI video generation platform valued at $3 billion, launched with constraints that made errors recoverable. Rather than promising production-quality output, they started with 3-second clips, basic tools, simple edits. When outputs missed the mark, creators regenerated clips or refined prompts. Low stakes (music videos, social content, VFX tests) meant errors rarely blocked production.

This created a dynamic where users became collaborators. Wrong motion artifact? Try again. The constrained outputs meant mistakes had limited blast radius. A failed 3-second clip doesn’t ruin a project. This recoverability gave Runway permission to expand progressively: from 3-second clips to 10+ second videos, from simple edits to full scene generation, from individual creators to major studio partnerships with Lionsgate. Early users who experienced recoverable mistakes at small scale trusted the expansion to higher stakes applications.

Recoverability depends on three factors: stakes magnitude, error observability, and correction feedback. Low-stakes domains permit frequent errors. High observability allows quick correction. Tight feedback loops turn errors into assets. Gradual deployment lets teams select contexts where all three factors align favourably.

Operational takeaway: Design for reversible consequences. Low-cost errors fuel compounding insight.

Together, these shifts form a progressive sequence: narrow success earns credibility, transparency converts credibility into calibrated trust, and recoverable mistakes turn calibration into confidence.

Gradual deployment builds both accumulation (confidence over time) and calibration (judgment across contexts). The next section explores how these mechanisms compound to create durable, system-level trust.

1.3. The Compound Effect: Why Sequential Trust Beats Simultaneous Trust

These mechanisms don’t operate independently; they compound through reinforcing dynamics:

-

Narrow success makes users receptive to transparency about limitations. When users see the system excel in a constrained domain, they develop confidence in its underlying capability. This confidence makes them willing to accept stated limitations in adjacent areas. Jimini’s lawyers trust document citations in productivity tasks, which makes them comfortable when Jimini says “I don’t yet handle legal research.” The narrow success proves the team knows what they’re doing; the limitation transparency proves they know what they’re not yet doing. Without the initial narrow success, limitation acknowledgment reads as excuse-making. With it, limitation acknowledgment reads as honest calibration.

-

Transparency about limitations makes mistakes recoverable. When users understand a system’s boundaries, errors within those boundaries don’t destroy trust - they confirm the system’s self-awareness. AI customer service that says “I handle account queries, not complex complaints” and then makes an error on an account query is forgivable - it failed within its stated competence zone. The same error from a system claiming universal capability would be unforgivable. The transparency creates a safety net: mistakes become data points refining understanding rather than trust violations demanding abandonment.

-

Recoverable mistakes enable expansion to adjacent domains. When errors generate learning rather than abandonment, teams can progressively expand scope with user confidence intact. Each mistake teaches both the system (better models) and users (refined mental models of capability). This creates permission for incremental capability expansion: “They mastered document drafting, acknowledged limitations in research, and fixed issues quickly when they arose. Now they’re adding research capability - I’ll try it carefully.” The recovery pattern established in the initial narrow domain carries forward.

The compounding creates trust that expands across progressively broader capability**: narrow success** (linear trust in one domain) + transparency (extends trust to adjacent assessment) + recoverable mistakes (maintains trust through errors) = expanding confidence.

Revolutionary launch tries to build all trust simultaneously: competence across all domains, transparency about all capabilities, recovery from all mistakes. This simultaneous trust requirement explains why revolutionary launches fail more often than their capabilities would predict. The product might be technically excellent but trust-deficient because users haven’t had time to develop confidence through experience. More critically, users haven’t experienced the reinforcing pattern that makes each mechanism amplify the others.

Gradual deployment sequences trust building: first narrow competence (prove underlying quality), then transparent boundaries (prove calibrated self-awareness), then recovery confidence (prove learning from errors). Each stage enables the next. The sequence creates compound trust that revolutionary launch cannot replicate.

What This Means: From Theory to Practice

We’ve established the foundation: complexity science reveals why step functions contradict natural law, trust accumulation explains how gradual deployment creates compound confidence, and AI’s probabilistic nature amplifies both challenges.

But understanding why gradual deployment works doesn’t tell you how to execute it within your specific constraints.

Indeed, every AI deployment faces a unique constraint profile that determines which strategies are viable. Some systems can iterate daily. Others face months between experiments. Some failures affect only your team. Others cascade through ecosystems. Understanding your specific constraints reveals whether rapid iteration or deliberate planning matches your reality.

Part 2 explores the strategic framework: the five forces that define your deployment metabolism, how constraints combine across product physics, customer archetype, and lifecycle stage, and when revolutionary launch actually beats gradual deployment despite everything we’ve covered here.

The deployment paradox resolves when you match strategy to constraints. Theory illuminates what’s possible. Constraint diagnosis reveals what’s viable. Strategic execution determines what succeeds.

.png)