The future belongs to people who can just do things

There, I said it. I seriously can’t see a path forward where the majority of software engineers are doing artisanal hand-crafted commits by as soon as the end of 2026. If you are a software engineer and were considering taking a gap year/holiday this year it would be an

Geoffrey HuntleyGeoffrey Huntley

Geoffrey HuntleyGeoffrey Huntley

I've been a software engineer in various capacities since the 1980s. Even while running conferences for the past 20 years, I've never stopped coding—whether building platforms, developer tools, or systems to support our events. Over the decades, I've witnessed revolutions in software engineering, like computer-aided software engineering, which always struck me as an oxymoron. After all, isn't all software engineering computer-aided? However, back then, before the advent of personal computers and workstations, we had batch computing, and software engineering was a distinct process for programming remote machines.

These revolutions in software engineering practices have been transformative; however, the last major shift occurred nearly 40 years ago. I believe we're now in the midst of another profound revolution in how software is created. This topic has been on my mind a lot, and Geoff's article resonated deeply with me. Intrigued, I looked him up on LinkedIn and was surprised to find he’s based in Sydney. The next day, we were on the phone—and thank goodness long-distance calls are no longer billed by the minute, because Geoff and I have had many lengthy conversations since.Geoff has been incredibly generous with his time. He kindly joined us in Melbourne a few weeks ago for an unconference at Deakin, which some of you attended. More importantly, he’s not just theorising about the future of software engineering—he’s actively putting those ideas into practice. His deep thinking and hands-on approach make him the perfect person to explore what lies ahead for our field.So I've asked him to come here to talk about that. We may never see him again. He's off to San Francisco to work for Sourcegraph.

Thank you all for joining us on this Friday. This talk will be somewhat intense, but it follows a clear arc and serves a purpose.

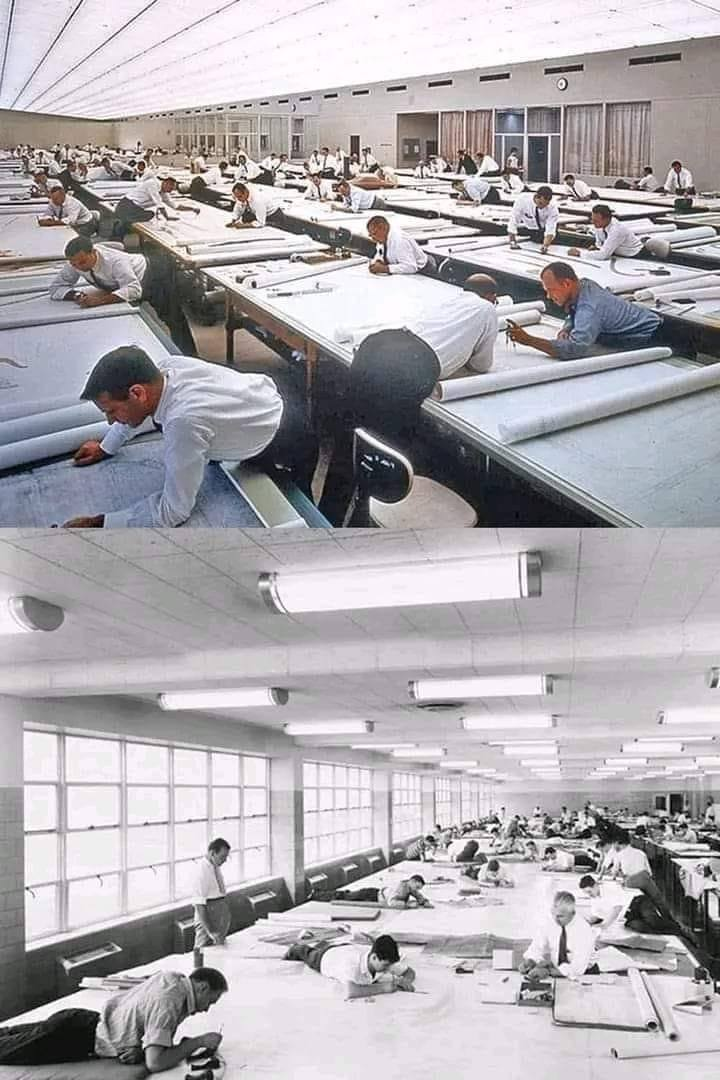

I see software engineering transforming in a similar way to what happened in architecture. Before tools like AutoCAD, rooms full of architects worked manually. Afterwards, architects continued to exist, but their roles and identities evolved. We’re experiencing a similar shift in our field right now.

I’d like to thank today’s speakers. Giving talks is always challenging, no matter how experienced you are. It gets easier with practice, though, so if you’re considering delivering one, I encourage you to go for it - it’s incredibly rewarding.

Let’s get started. About six months ago, I wrote a blog post titled The Future Belongs to People Who Do Things. Despite any confidence I may project, I don’t have all the answers about where this is heading. What I do know is that things are changing rapidly. Faster than most people realise. If AI and AI developer tooling were to cease improving today, then it would already be good enough to disrupt our profession completely.

The future belongs to people who can just do things

There, I said it. I seriously can’t see a path forward where the majority of software engineers are doing artisanal hand-crafted commits by as soon as the end of 2026. If you are a software engineer and were considering taking a gap year/holiday this year it would be an

Geoffrey HuntleyGeoffrey Huntley

Geoffrey HuntleyGeoffrey Huntley

We are in an "oh fuck" moment in time. That blog post, published in December, was my first on the transformations AI will have for software engineers and businesses. As we go through this talk, you might find yourself having one of those moments, too, if you haven’t already.

An “oh fuck” moment in time

Over the Christmas break, I’ve been critically looking at my own software development loop, learning a new programming language, and re-learning a language I haven’t used professionally in over seven years. It’s now 2025. Software assistants are now a core staple of my day-to-day life as a staff

Geoffrey HuntleyGeoffrey Huntley

Geoffrey HuntleyGeoffrey Huntley

It all began when an engineering director at Canva approached all the principal engineers and said, “Hey, can you dive deep into AI over the Christmas break?” My initial reaction was, “Okay, I’ve tried this all before. It wasn’t that interesting.”

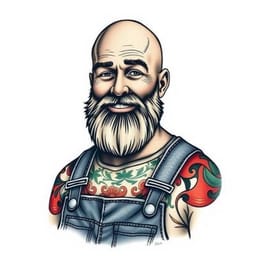

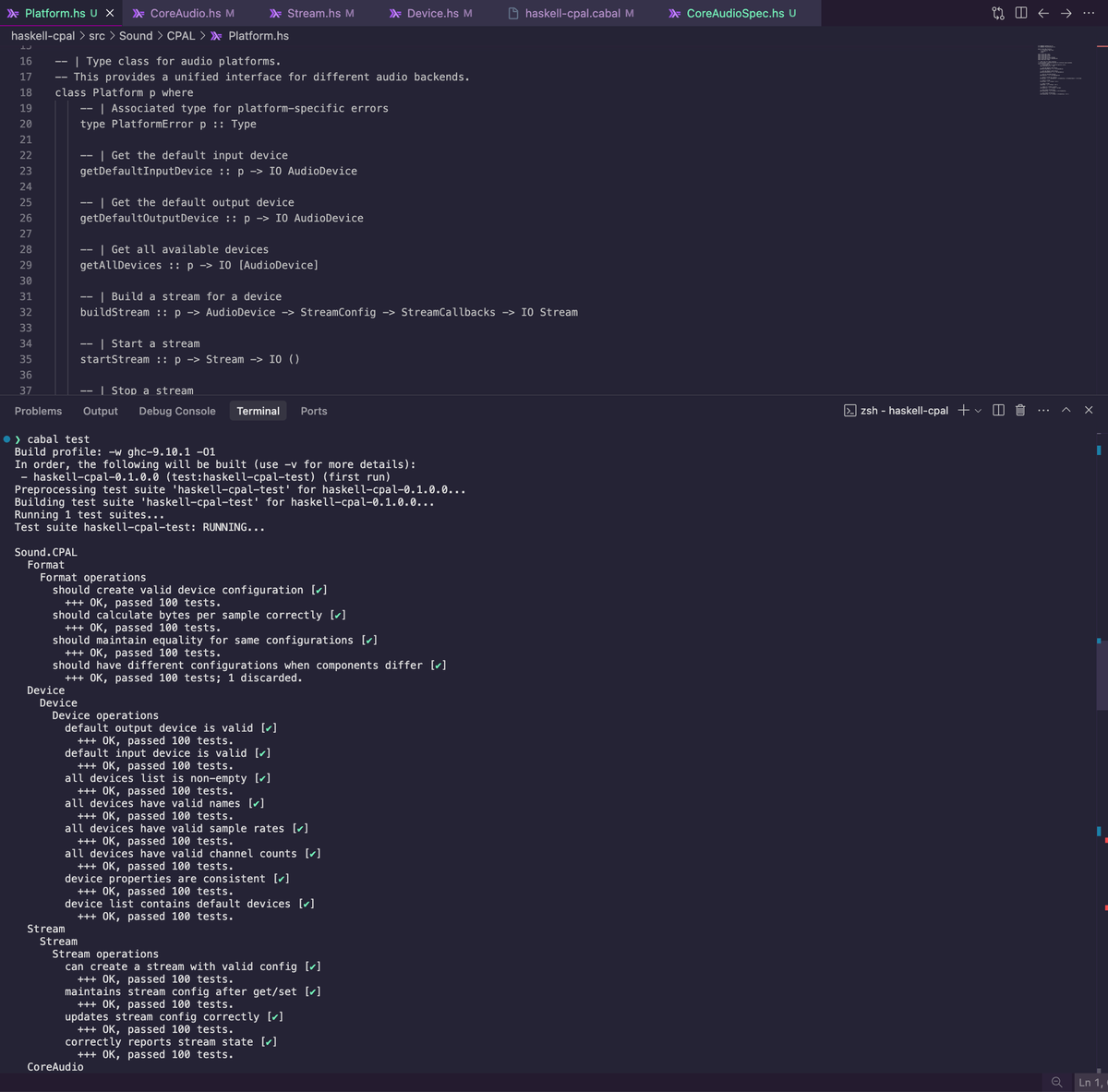

So, I downloaded Windsurf and asked it to convert a Rust audio library to Haskell using GHC 2024.

I told it to use Hoogle to find the right types and functions, and to include a comprehensive test suite with Hspec and QuickCheck

Instructed it to run a build after every code change when making modifications.

I also instructed it to write tests and automate the process for me. I had heard it was possible to set up a loop to automate some of these tasks, so I did just that.

I took my kids to the local pool, left the loop running,

and when I returned, I had a fully functioning Haskell audio library.

Now, that’s wild. Absolutely wild.

You’re probably wondering why I’d build an audio library in Haskell, of all things, as it’s arguably the worst choice for audio processing. The reason is that I knew it wasn’t trivial. I’m constantly testing the limits of what’s possible, trying to prove what this technology can and cannot do. If it had just regurgitated the same Rust library or generated something unoriginal, I wouldn’t have been impressed. But this?

This was a Haskell audio library for Core Audio on macOS, complete with automatically generated bindings to handle the foreign function interface (FFI) between functional programming and C. And it worked.

I was floored.

An “oh fuck” moment in time

Over the Christmas break, I’ve been critically looking at my own software development loop, learning a new programming language, and re-learning a language I haven’t used professionally in over seven years. It’s now 2025. Software assistants are now a core staple of my day-to-day life as a staff

Geoffrey HuntleyGeoffrey Huntley

Geoffrey HuntleyGeoffrey Huntley

So, I wrote a blog post about the experience and with this as the conclusion...

From this point forward, software engineers who haven’t started exploring or adopting AI-assisted software development are, frankly, not going to keep up. Engineering organizations are now divided between those who have had that "oh fuck" moment and those whom have not.

In my career, I’ve been fortunate to witness and navigate exponential change. With a background in software development tooling, I began writing more frequently. I could see patterns emerging.

I realised we need better tools—tools that align with the primitives shaping our world today. The tools we currently rely on, even now, feel outdated. What we have today, even now, doesn't make sense for the primitives that presently exist. They have been designed for humans first and built upon historical design decisions.

I wrote a follow-up blog post, and back in January, my coworkers at Canva thought I was utterly crazy.

Multi Boxing LLMs

Been doing heaps of thinking about how software is made after https://ghuntley.com/oh-fuck and the current design/UX approach by vendors of software assistants. IDEs, since 1983, have been designed around an experience of a single plane of glass. Restricted by what an engineer can see on their

Geoffrey HuntleyGeoffrey Huntley

Geoffrey HuntleyGeoffrey Huntley

I was saying, "Hey, hold on, hold on. Why are we designing tools for humans?"

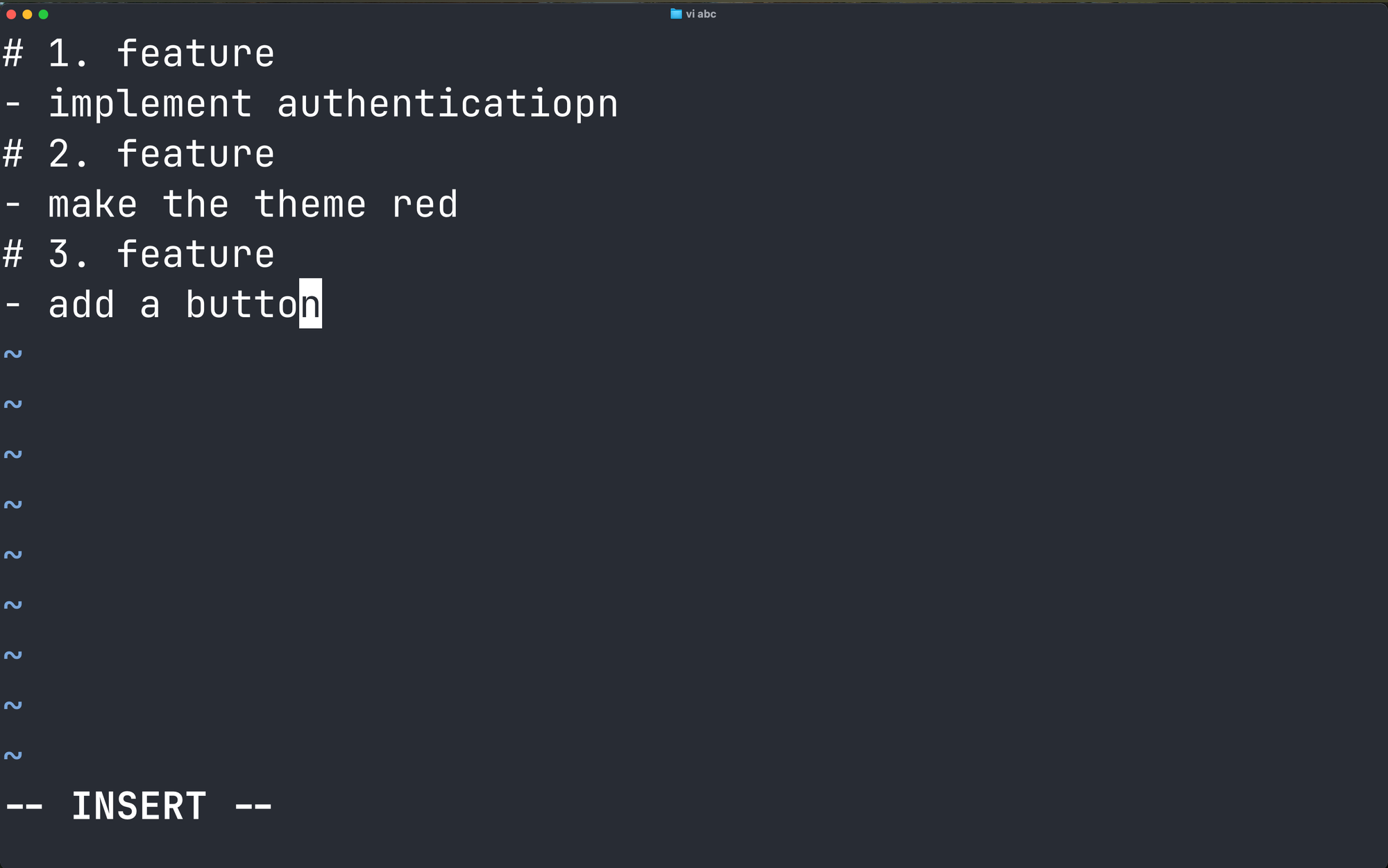

Think about it: what is an IDE?

An IDE is essentially a typewriter - a single pane of glass we type into.

It feels strange that the entire industry is cramming all these AI tools into the IDE.

It doesn't seem right.

It's like a high-powered tool paired with a low-powered interface.

This interface has remained essentially unchanged since Turbo Pascal was introduced in 1983.

Has AI rendered IDEs obsolete?

from Luddites to AI: the Overton Window of disruption

I’ve been thinking about Overton Windows lately, but not of the political variety. You see, the Overton window can be adapted to model disruptive innovation by framing the acceptance of novel technologies, business models, or ideas within a market or society. So I’ve been pondering about where, when and how

Geoffrey HuntleyGeoffrey Huntley

Geoffrey HuntleyGeoffrey Huntley

So, I started thinking along these lines:

What has changed?

What is changing?

What if we designed tools around AI first and humans second?

Then I dug deeper.

I thought, "Why does an engineer only work on one story at a time?"

In my youth, I played World of Warcraft. Anyone familiar with World of Warcraft knows about multi-boxing, where you control multiple characters simultaneously on one computer.

I realised, "Wait a second. What if I had multiple instances of Cursor open concurrently?

Multi Boxing LLMs

Been doing heaps of thinking about how software is made after https://ghuntley.com/oh-fuck and the current design/UX approach by vendors of software assistants. IDEs, since 1983, have been designed around an experience of a single plane of glass. Restricted by what an engineer can see on their

Geoffrey HuntleyGeoffrey Huntley

Geoffrey HuntleyGeoffrey Huntley

Why are we only working on one story?"

Why is this the norm?

When I discussed this with coworkers, they were stuck thinking at a basic level, like, "What if I had one AI coworker?"

They hadn't yet reached the point of, "No, fam, what if you had a thousand AI coworkers tackling your entire backlog all at once?"

That's where Anni Betts comes in.

Anni Betts was my mentor when I began my career in software engineering.

Much of the software you use daily - Slack, the GitHub Desktop app, or the entire ecosystem of software updaters - that's Annie's work.

She's now at Anthropic.

When certain people of her calibre say or do something significant, I pay attention.

Two people I always listen to are Annie Betts and Eric Meyer.

And here's the thing: all the biggest brains in computer science, the ones who were retired, are now coming out of retirement.

Big moves are happening here. Our profession stands at a crossroads. It feels like an adapt-or-perish moment, at least from my perspective.

It didn’t take long for founders to start posting blogs and tweets declaring, “I’m no longer hiring junior or mid-level software engineers.”

Shopify quickly followed suit, stating, “At Shopify, using AI effectively is no longer optional - it’s a baseline expectation for employment.”

A quote from the Australian Financial Review highlights how some divisions embraced this AI mandate a bit too enthusiastically. Last week, Canva informed most of its technical writing team that their services were no longer needed.

Let me introduce myself. I’m Geoff,

Previously, the AI Developer Productivity Tech Lead at Canva, where I helped roll out AI initiatives. Two weeks ago, I joined Sourcegraph to build next-generation AI tools. I'll be heading out to San Francisco tomorrow morning after this talk and will be joining the core team behind https://ampcode.com/.

Given that these tools will have significant societal implications, I feel compelled to provide clarity and guidance to help others adapt.

Regarding my ponderoos, it’s all available on my website for free. Today, I’ll be synthesising a six-month recap that strings them together into a followable story.

After publishing a blog post stating that some people won’t make it in this new landscape, colleagues at Canva approached me, asking, “Geoff, what do you mean some people won’t make it?” Let me explain through an example.

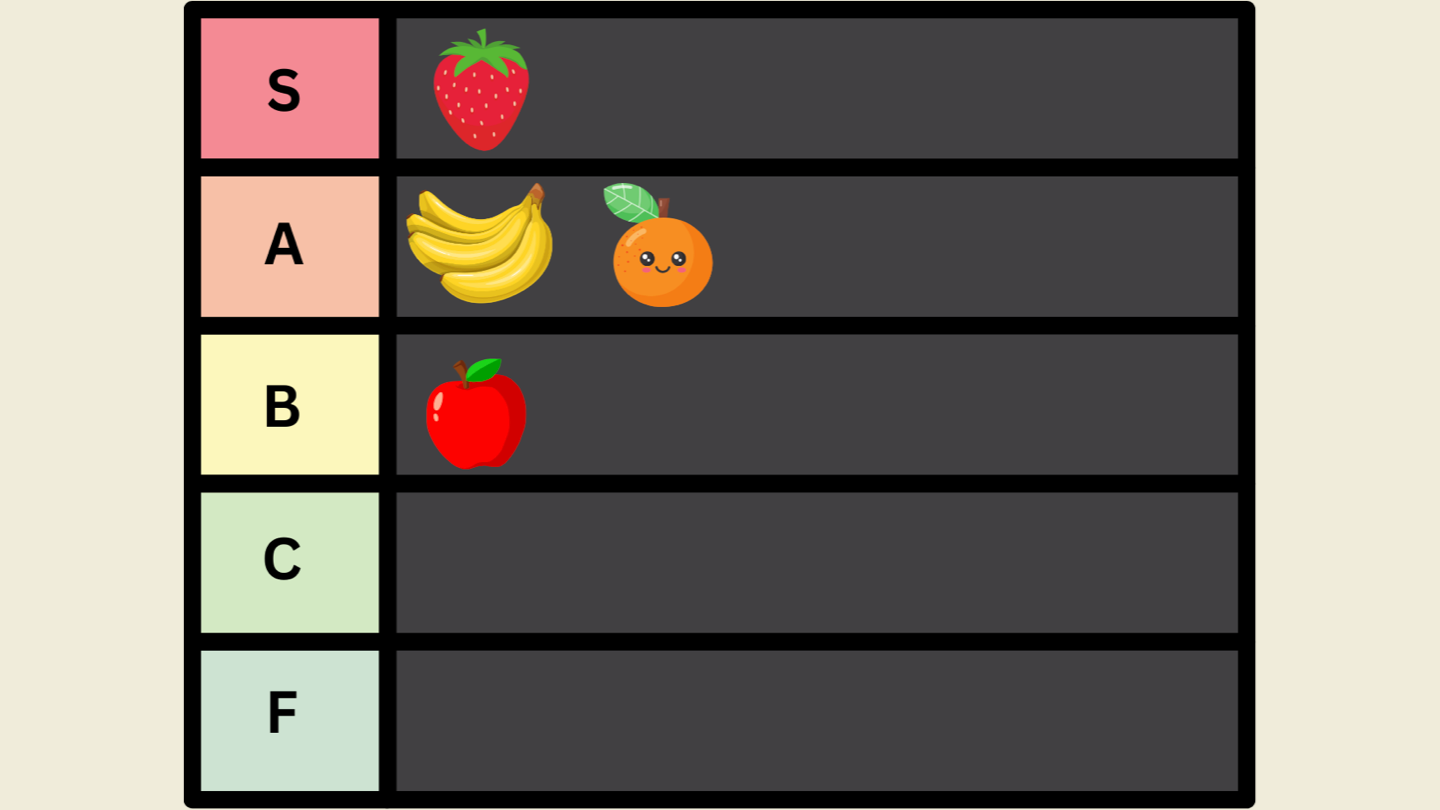

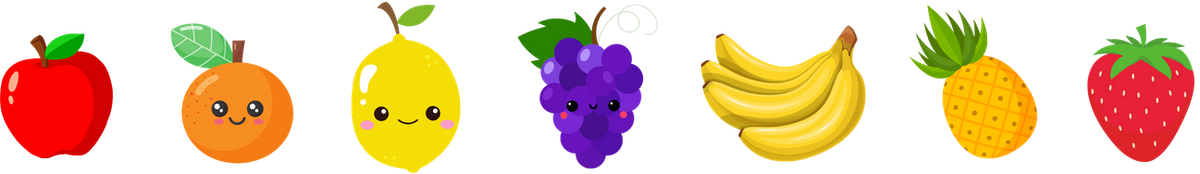

At Fruitco, a fictional company, there are seven software developers, and the company conducts six-month performance cycles, a common practice across industries. It’s tempting to blame a single company, but AI tools are now accessible with a credit card. These dynamics will unfold over time, faster at some companies, slower at others.

Unfortunately, Lemon doesn’t survive the performance cycle because they underperform.

Another cycle passes, and Orange and Strawberry, typically high performers, are shocked to receive low performance ratings. Stunned, they begin searching for ways to gain a competitive edge. They download tools like Cursor, Windsurf or Amp and start exploring their capabilities.

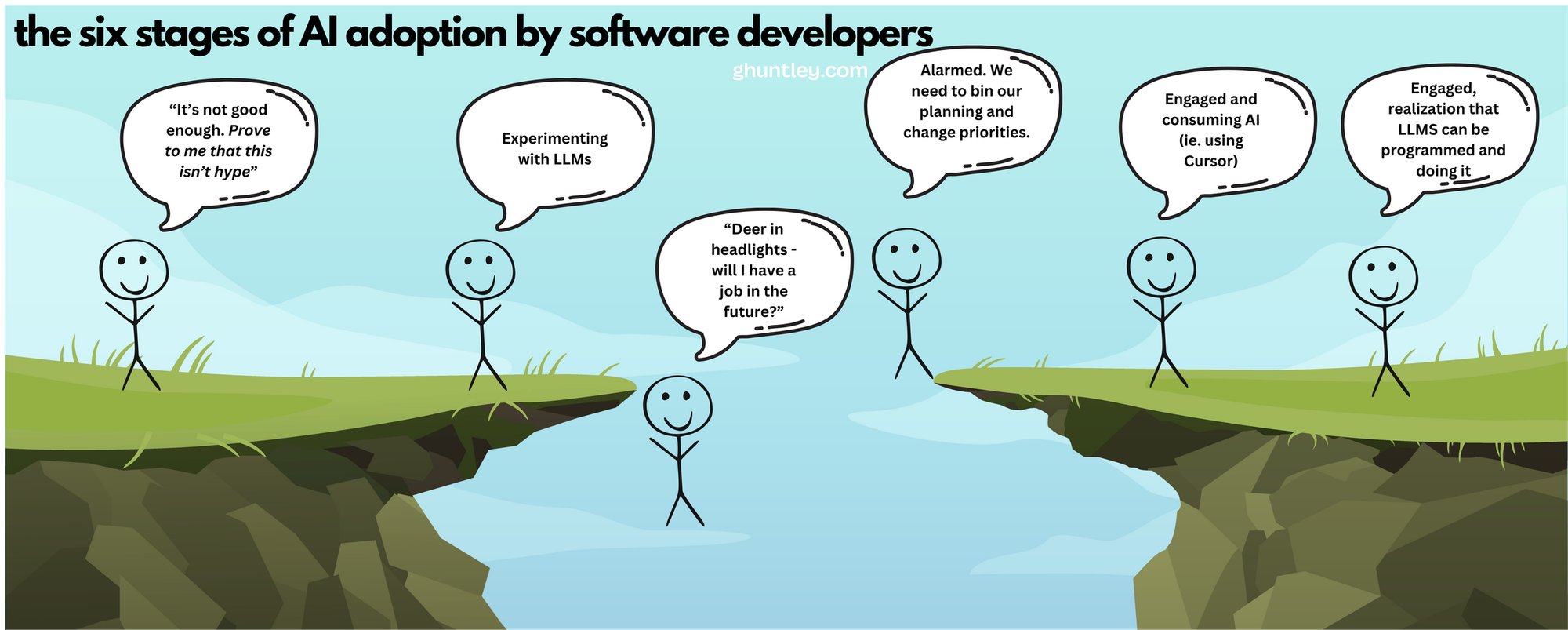

This is where it gets interesting. Through my research within the organisation, I mapped out the stages of AI adoption among employees. I was once like Pineapple, sceptical and demanding proof that AI was transformative. When I first tried it, I found it lacking and simply not good enough.

However, the trap for seasoned professionals, like a principal engineer, is trying AI once and dismissing it, ignoring its continuous improvement. AI tools, foundation models, and capabilities are advancing every month. When someone praises AI’s potential, it’s easy to brush it off as hype. I did that myself.

Six months later, at the next performance cycle, Pineapple and Grape find themselves at the bottom of the performance tier: surprising, given their previous top-tier status. Why? Their colleagues who adopted AI gained a significant productivity boost, effectively outpacing them. Naturally, Pineapple and Grape’s performance ratings suffered in comparison.

Banana, noticing this shift, begins to take AI seriously and invests in learning its applications. The earlier you experiment with AI, the greater the compounding benefits, as you discover its strengths and limitations.

Unfortunately, after the next performance cycle, the outcomes are predictable. Grape fails to adapt to the evolving engineering culture and is no longer with the company.

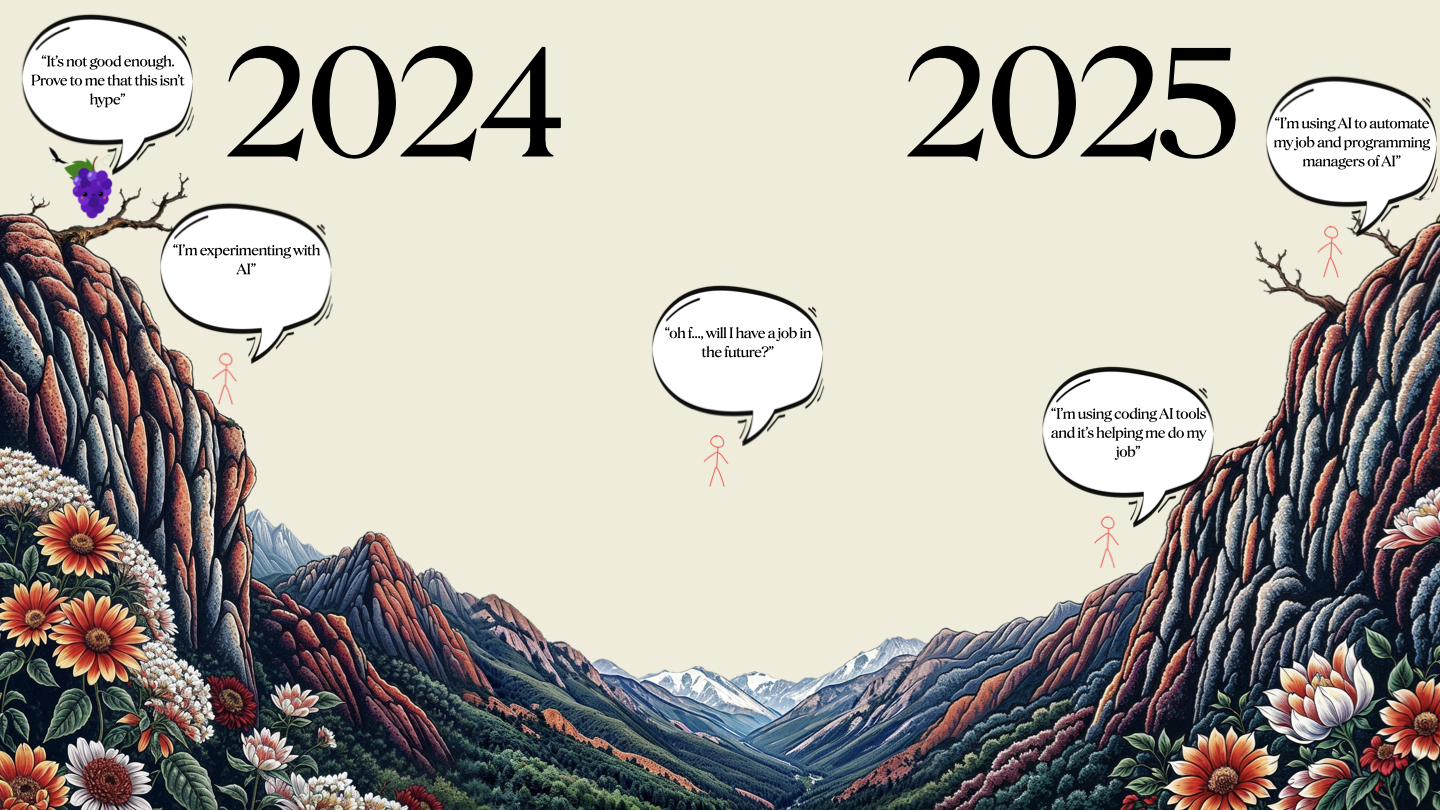

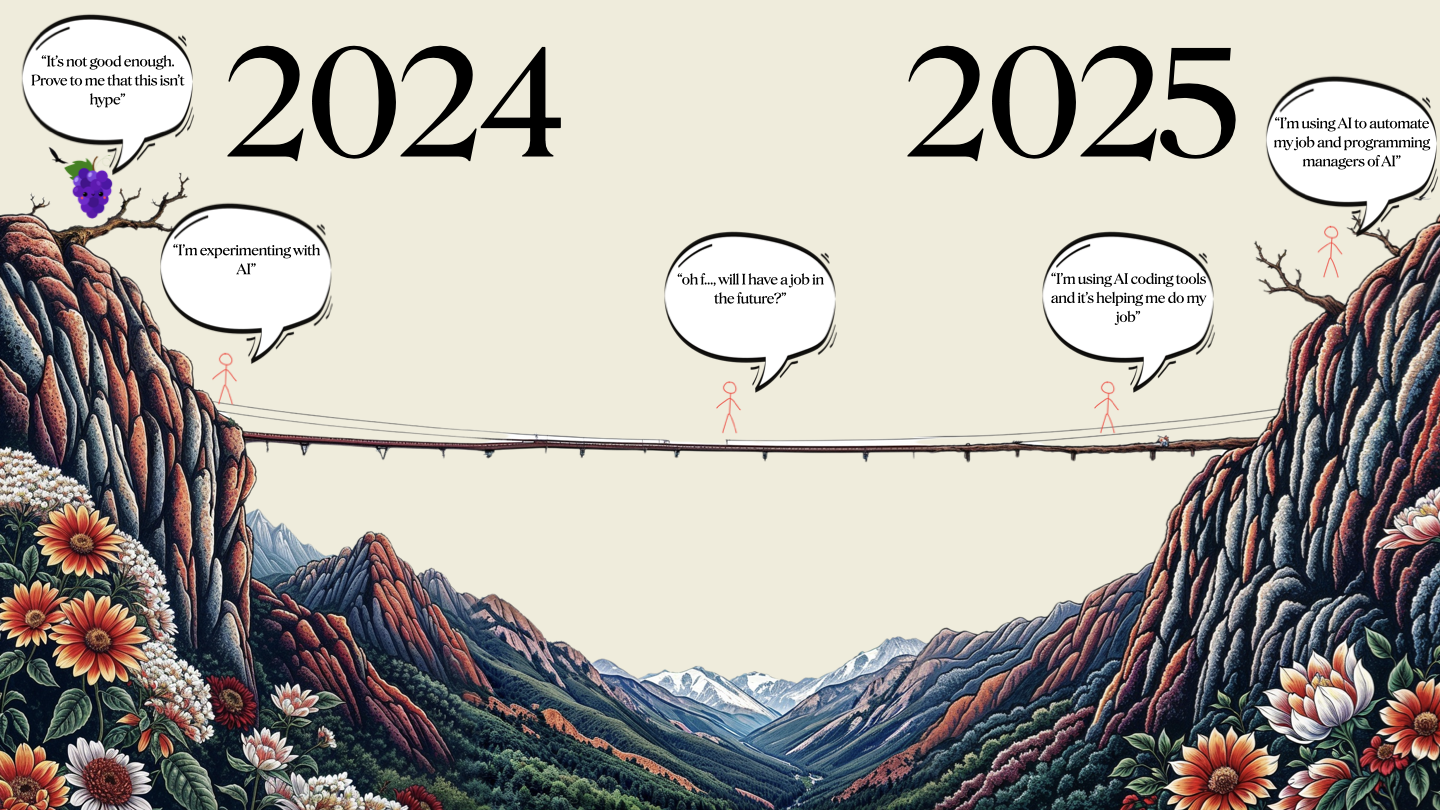

This pattern reflects what I’ve termed the “people adoption curve for AI”

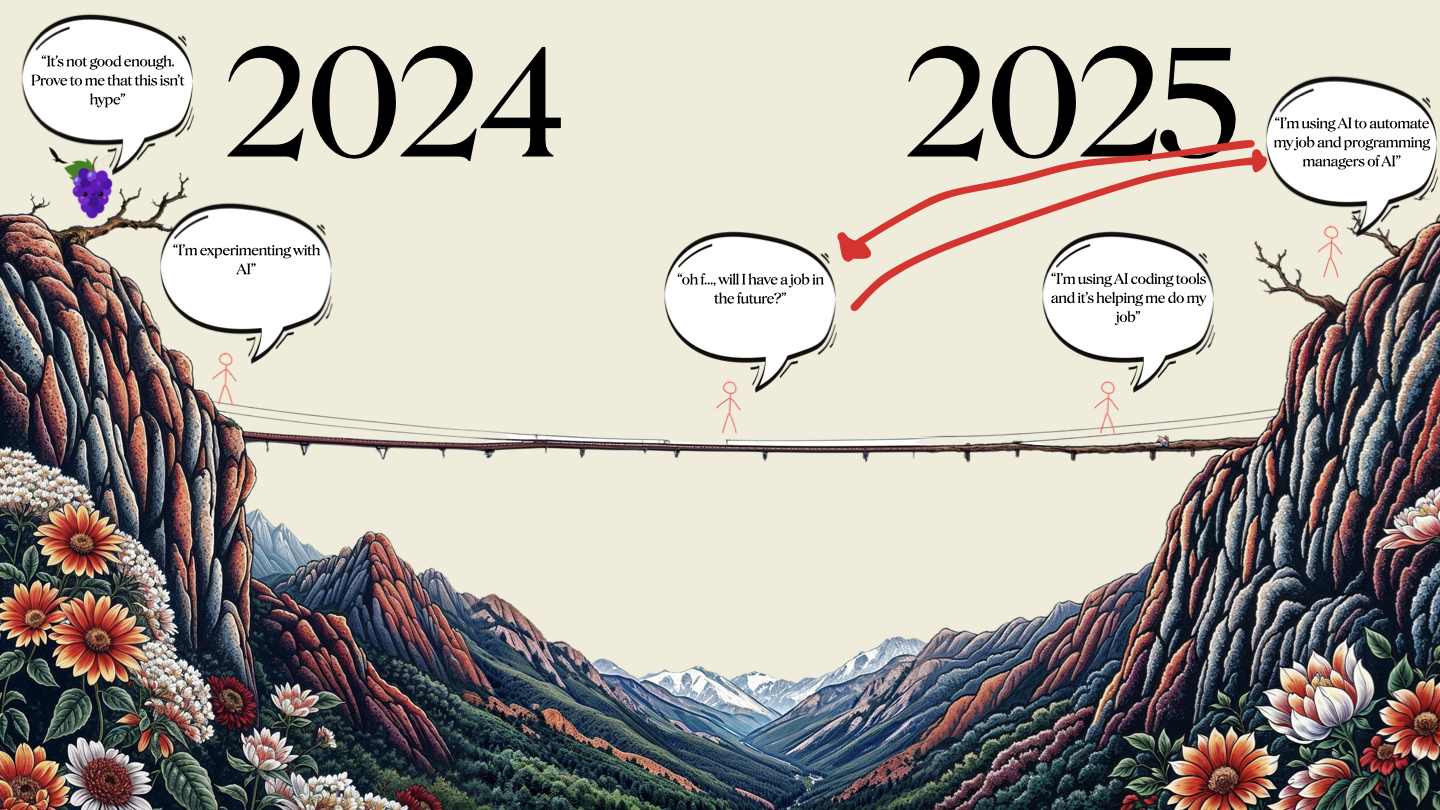

Grape’s initial stance was, “Prove it’s not hype.” Over time, employees move through stages: scepticism, experimentation, and eventually realisation. In the middle, there’s a precarious moment of doubt—“Do I still have a job?”—as the power of AI becomes clear. It’s daunting, even terrifying, to grasp what AI can do.

Yet, there’s a threshold to cross. The journey shifts from merely consuming AI to programming with it. Programming with AI will soon be a baseline expectation, moving beyond passive use to active automation of tasks. The baseline expectation of what constitutes high performance is going to shift rapidly, and as more people adopt these techniques and newer tools, what will happen is that what was once considered high performance without AI will now be viewed as low performance.

What do I mean by some software devs are “ngmi”?

At “an oh fuck moment in time”, I closed off the post with the following quote. N period on from now, software engineers who haven’t adopted or started exploring software assistants, are frankly not gonna make it. Engineering organizations right now are split between employees who have had that “oh

Geoffrey HuntleyGeoffrey Huntley

Geoffrey HuntleyGeoffrey Huntley

In my blog post, I concluded that AI won’t trigger mass layoffs of software developers. Instead, we’ll see natural attrition between those who invest in upskilling now and those who don’t. The displacement hinges on self-investment and awareness of these changing dynamics.

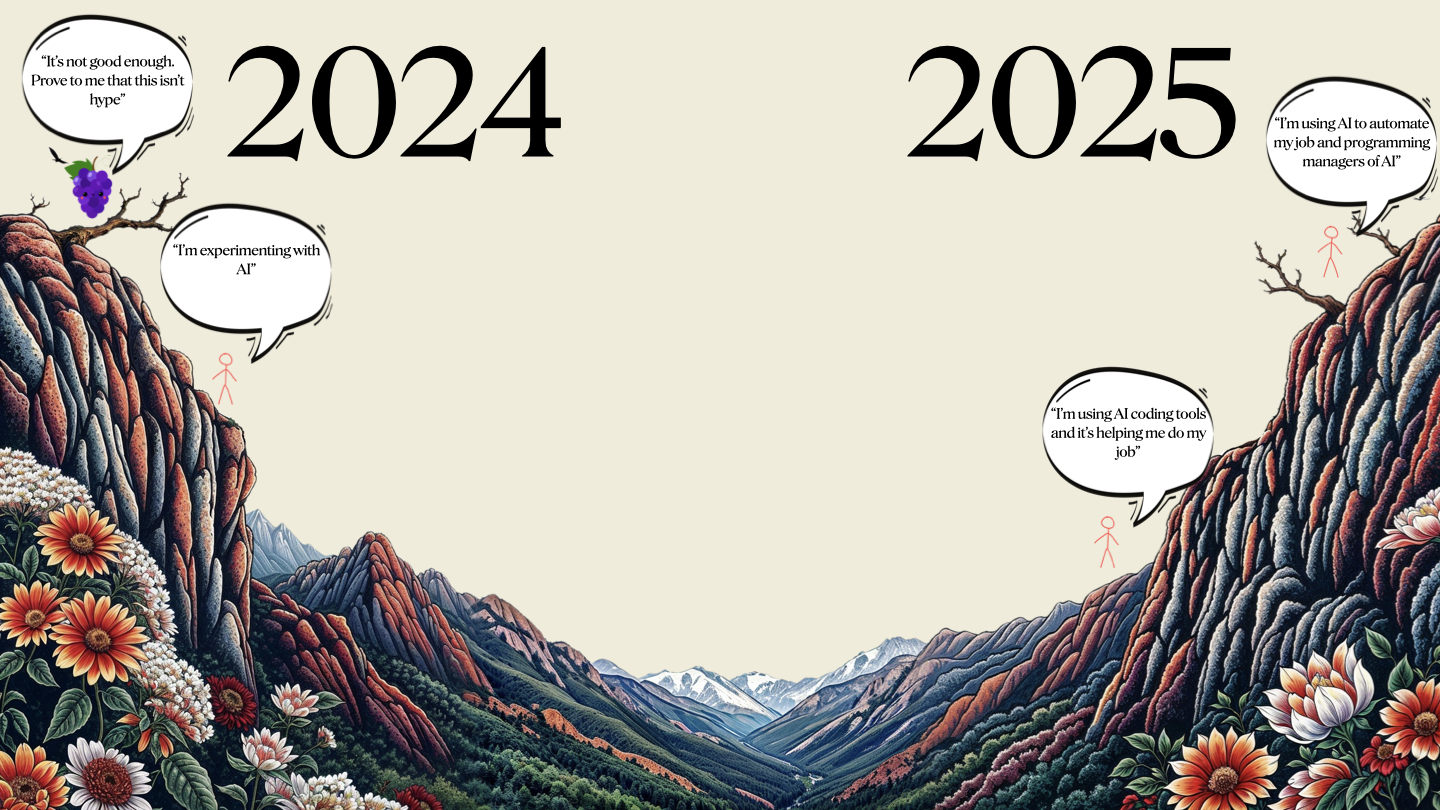

Between 2024 and 2025, a rift is emerging. The skill set that founders and companies demand is evolving rapidly.

In 2024, you could be an exceptional software engineer. But in 2025, founders are seeking AI-native engineers who leverage AI to automate job functions within their companies. It’s akin to being a DevOps engineer in 2025 without knowledge of AWS or GCP—a critical skills gap. This shift is creating a rift in the industry.

For engineering leaders, it’s vital to guide teams through the emotional middle phase of AI adoption, where fear and uncertainty can paralyse progress, leaving people like deer in headlights. Building robust support mechanisms is essential.

Companies often encourage employees to “play with AI,” but this evolves into an expectation to “do more with AI.” For those who embrace AI, the rewards are significant. However, engineering leaders also face challenges: the tech industry is once again booming, creating retention issues.

You want the right people using AI effectively, but talented engineers who master AI automation may be lured elsewhere. For individuals, mastering AI is among the most valuable personal development investments you can make this year.

For those who don’t invest in themselves, the outlook is grim. When I published my blog posts and research, I recall walking to the Canva office after getting off the train, feeling like I was in The Sixth Sense. I saw “dead people”—not literally, but I was surrounded by colleagues who were unaware their roles were at risk due to displacement. This realisation drove me to write more.

Initially, I thought moving from scepticism to AI adoption was straightforward. But I discovered it’s an emotional rollercoaster. The more you realise AI’s capabilities, the more it pushes you back to that central question: “Will I have a job?” This cycle makes it critical for engineering leaders to support their teams through this transition, recognising it’s not a linear process but a complex people change management challenge.

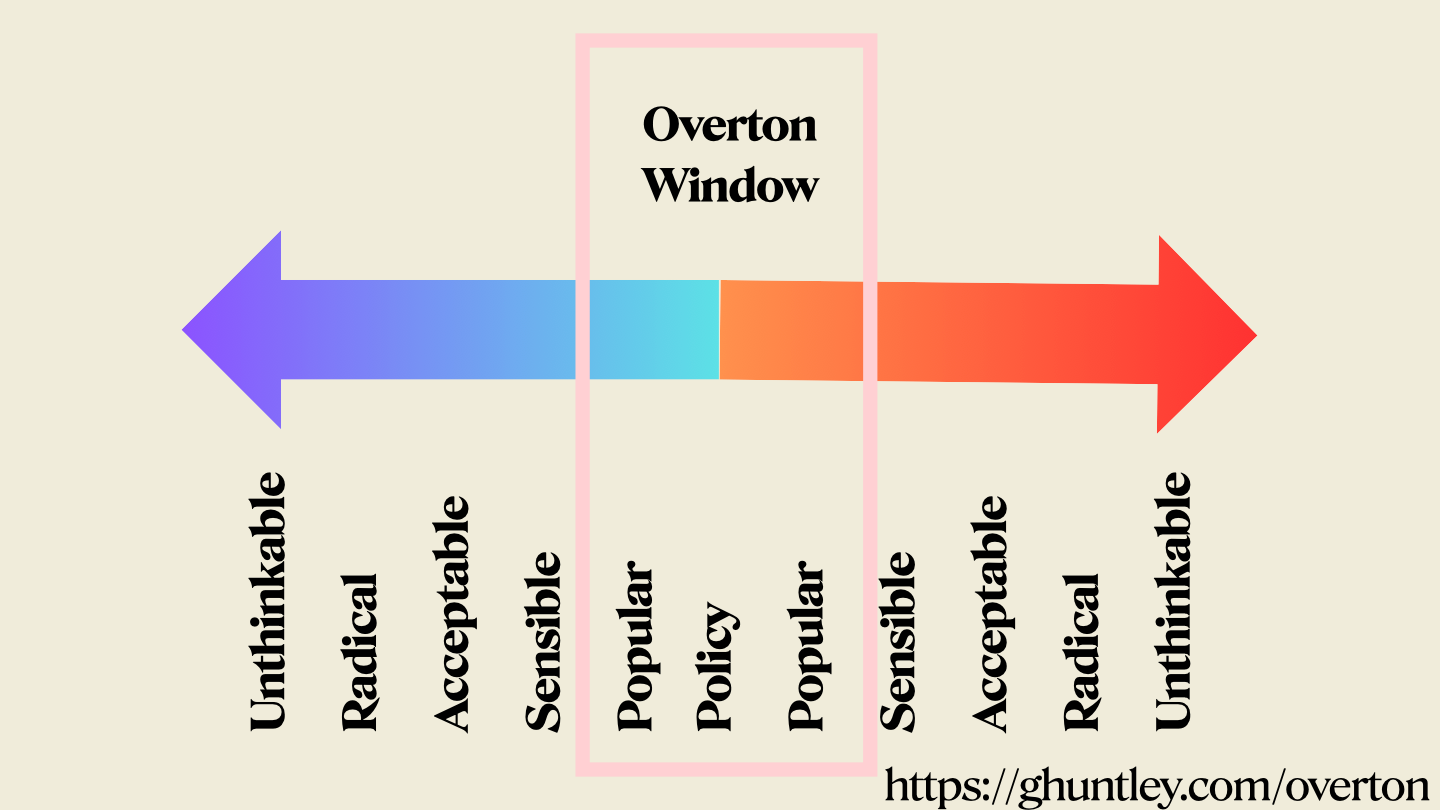

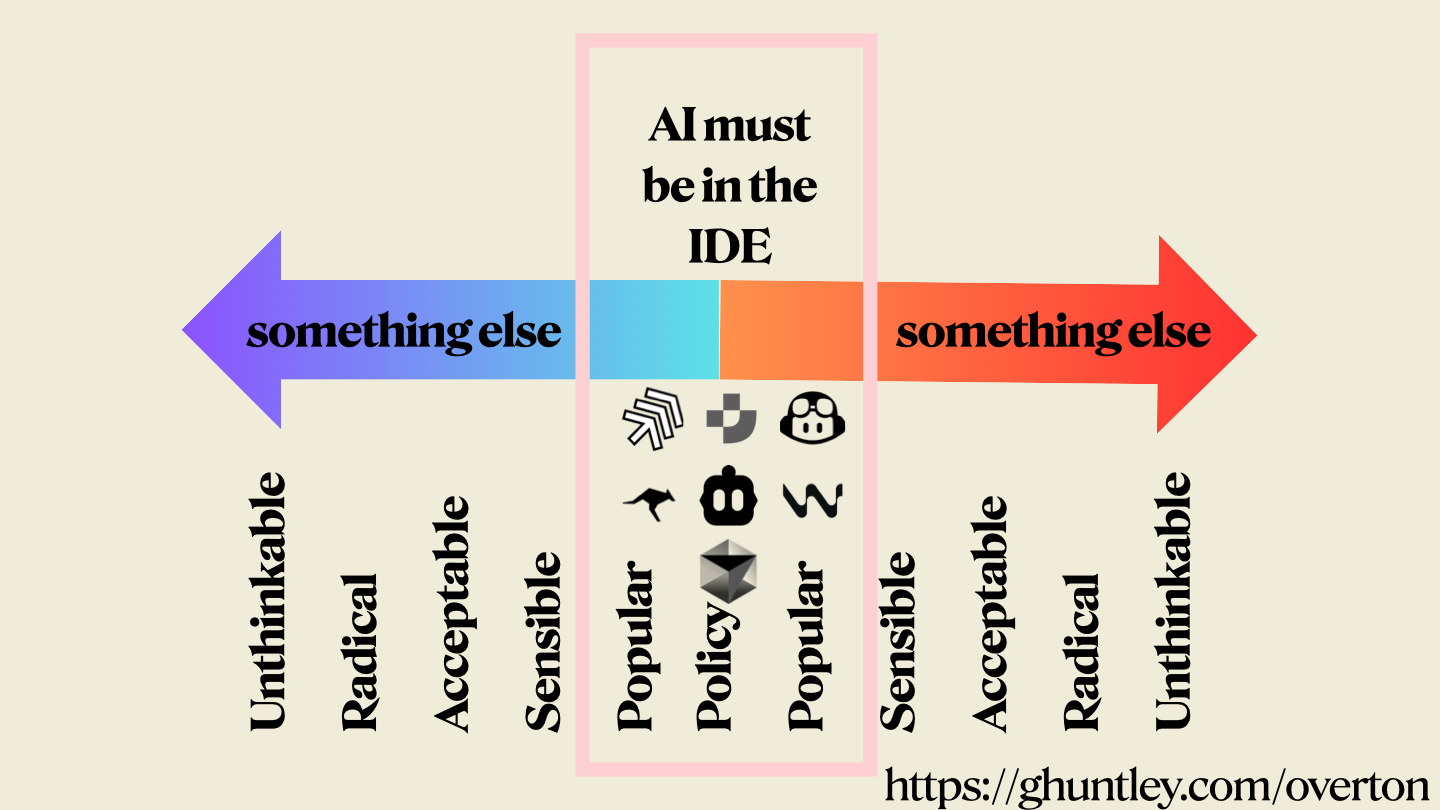

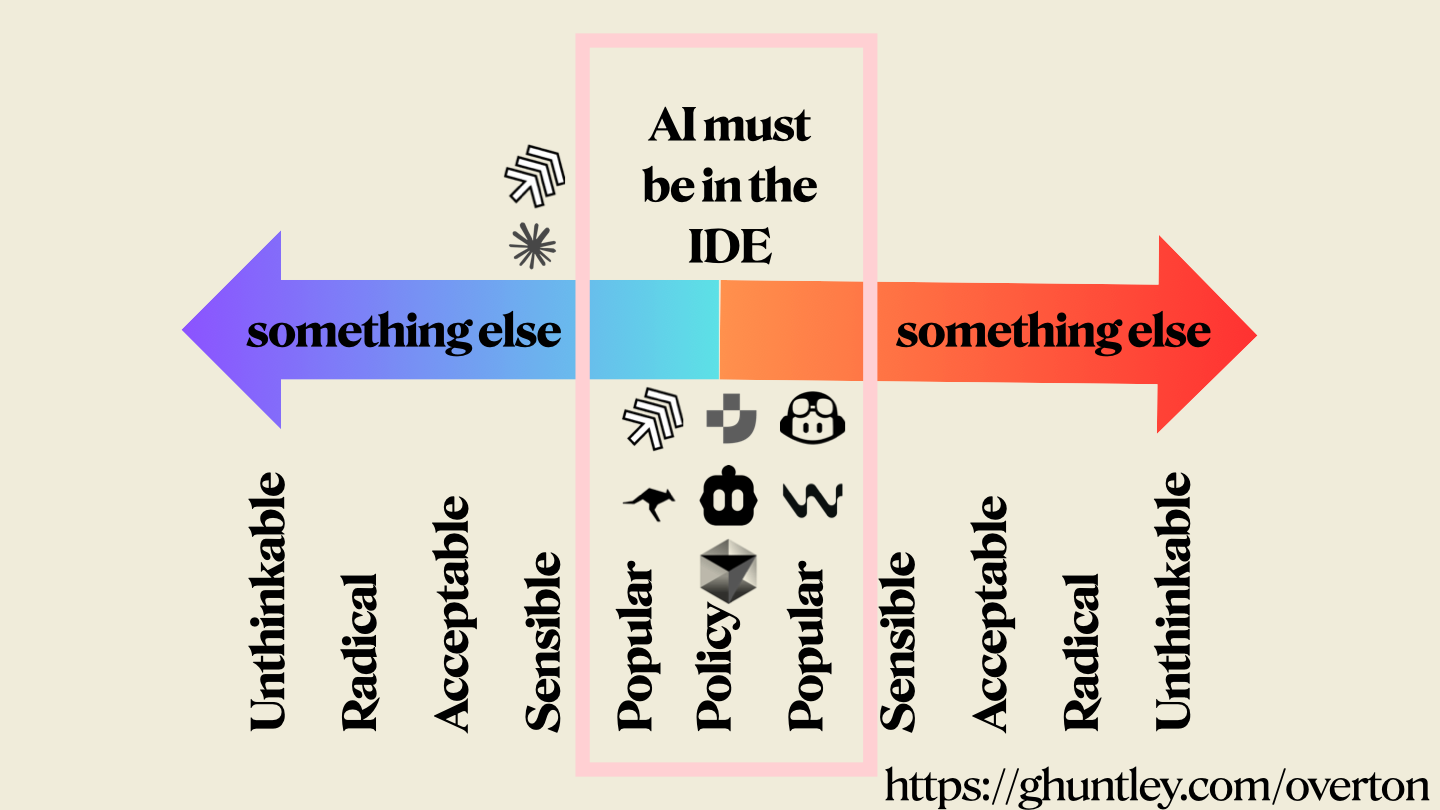

I’ve also explored the Overton window concept, traditionally used in political theory to map societal acceptance of policies. It’s equally effective for understanding disruptive innovation like AI.

Currently, vendors are embedding AI into integrated development environments (IDEs), as it’s perceived as accessible and non-threatening. Five months ago, I argued the IDE-centric approach was outdated. Last week, Anthropic echoed this, confirming the shift.

this is so validating; saw it six months back and coworkers thought I was mad. pic.twitter.com/d0vXPmgL4N

— geoff (@GeoffreyHuntley) May 23, 2025These days, I primarily use IDEs as file explorer tools. I rarely use the IDE except to craft and maintain my prompt library.

New approaches are emerging. Amp, for example, operates as both a command-line tool and a VS Code extension. We’re also seeing tools like Claude Code. The Overton window is shifting, and this space evolves rapidly. I spend considerable time contemplating what’s “unthinkable”—innovations so radical they unsettle people. Even today’s advancements can feel intimidating, raising questions about the future.

Let me show you how I approach software development now. AMP is both a command-line tool and an extension.

0:00

/1:26

Here’s an example task:

“Hey, in this folder there's a bunch of images. I want you to resize them to be around about 1920px and no bigger than 500 kilobytes. Can you make it happen please?"Most people use coding assistants like a search engine, treating them as a Google-like tool for chat-based operations. However, you can drive these tools into agentic loops for automation.

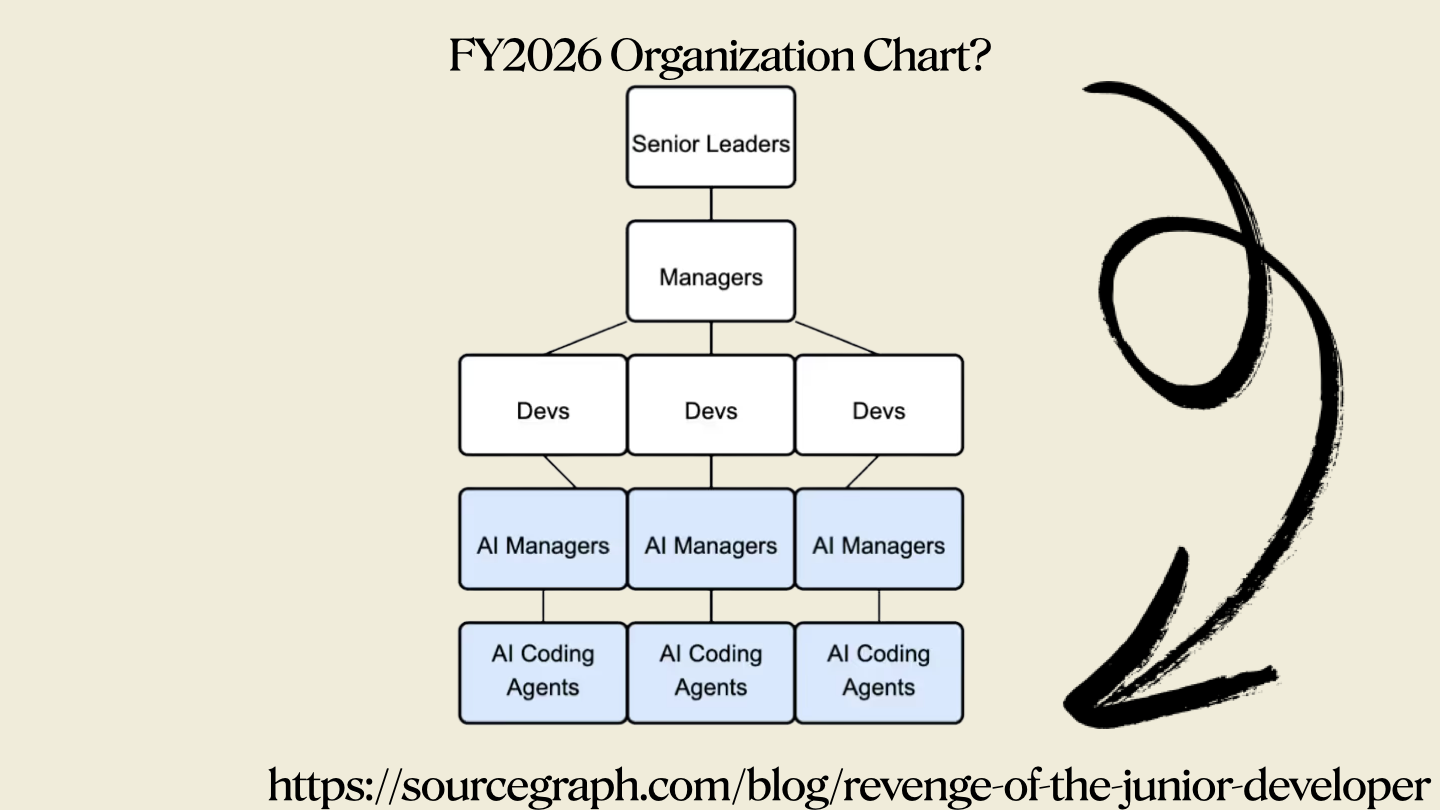

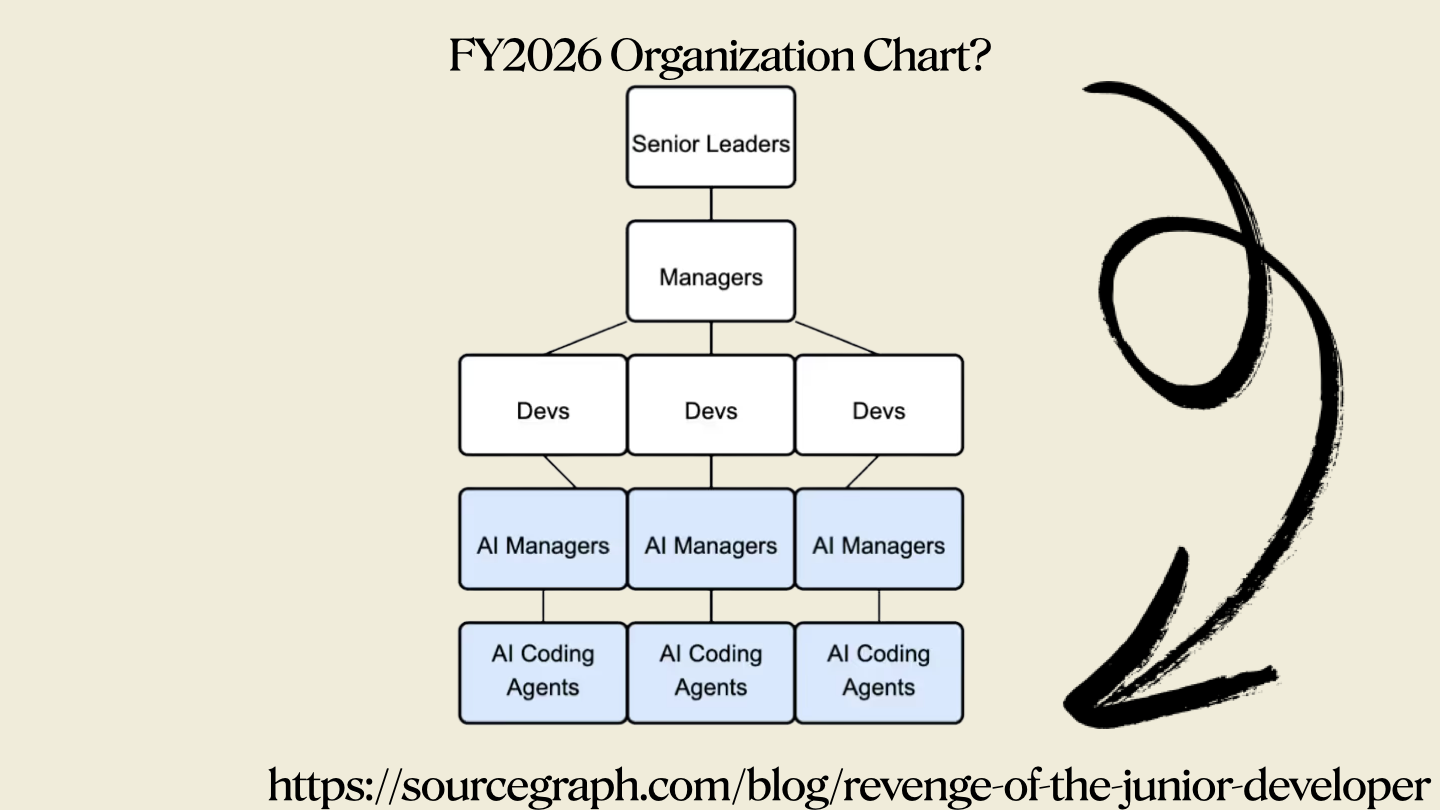

While that runs, let’s discuss something I’ve been pondering: what will future organisational charts look like? It’s hard to predict. For some companies, this shift might happen by 2026; for others, it could take 10 to 15 years. What you just saw is a baseline coding agent - a general-purpose tool capable of diverse tasks.

The concept of AI managers might sound strange, but consider tools like Cursor. When they make mistakes, you correct them, acting as a supervisor. As software developers, you can automate this correction process, creating a supervisory agent that minimises manual intervention. AI managers are now a reality, with people on social media using tools like Claude Code and AMP to automate workflows.

One of the most valuable personal development steps you can take this year is to build your own agent. It’s roughly 500 lines of code and a few key concepts. You can take the blog post below, feed it into Cursor, AMP, or GitHub Copilot, and it will generate the agent by pulling the URL and parsing the content.

How to Build an Agent

Building a fully functional, code-editing agent in less than 400 lines.

When vendors market their “new AI tools,” they’re capitalising on a lack of education. It's important to demystify the process: learn how it works under the hood so that when someone pitches an AI-powered code review tool, you’ll recognise it’s just an agent loop with a specific system prompt.

Building an agent is critical because founders will increasingly seek engineers who can create them.

This might sound far-fetched, but consider this: if I asked you to explain a linked list, you’d know it as a classic interview question, like reversing a linked list or other data structure challenges.

In 2025, interview questions are evolving to include: “What is an agent? Build me one.” Candidates will need to demonstrate the same depth of understanding as they would for a linked list reversal.

Three days ago, Canva publicly announced a restructuring of its interviewing process to prioritise AI-native candidates who can automate software development.

Yes, You Can Use AI in Our Interviews. In fact, we insist - Canva Engineering Blog

How We Redesigned Technical Interviews for the AI Era

canva.devSimon Newton

canva.devSimon Newton

This trend signals a clear shift in the industry, and it’s critical to understand its implications. Experience as a software engineer today doesn’t guarantee relevance tomorrow. The dynamics of employment are changing: employees trade time and skills for money, but employers’ expectations are evolving rapidly. Some companies are adapting faster than others.

I’ve been reflecting on how large language models (LLMs) act as mirrors of operator skill. Many try AI and find it lacking, but the issue may lie in their approach. LLMs amplify the user’s expertise or lack thereof.

LLMs are mirrors of operator skill

This is a follow-up from my previous blog post: “deliberate intentional practice”. I didn’t want to get into the distinction between skilled and unskilled because people take offence to it, but AI is a matter of skill. Someone can be highly experienced as a software engineer in 2024, but that

Geoffrey HuntleyGeoffrey Huntley

Geoffrey HuntleyGeoffrey Huntley

A pressing challenge for companies seeking AI-native engineers is identifying true proficiency. How do you determine if someone is skilled with AI? The answer is observation. You need to watch them work.

Traditional interviewing, with its multi-stage filtering process, is becoming obsolete. Tools now enable candidates to bypass coding challenges, such as those found on HackerRank or LeetCode. The above video features an engineer who, as a university student, utilised this tool to secure offers from major tech companies.

This raises a significant question: how can we conduct effective interviews moving forward? It’s a complex problem.

LLMs are mirrors of operator skill

This is a follow-up from my previous blog post: “deliberate intentional practice”. I didn’t want to get into the distinction between skilled and unskilled because people take offence to it, but AI is a matter of skill. Someone can be highly experienced as a software engineer in 2024, but that

Geoffrey HuntleyGeoffrey Huntley

Geoffrey HuntleyGeoffrey Huntley

see this blog post for extended ponderoos about how to conduct interviews going forward

I’ve been considering what a modern phone screen might look like. Each LLM is trained on different datasets, excelling in specific scenarios and underperforming in others.

For example, if you’re conducting security research, which LLM would you choose? Grok, with its lack of restrictive safeties, is ideal for red-team or offensive security work, unlike Anthropic, whose safeties limit such tasks.

For summarising documents, Gemini shines due to its large context window and reinforcement learning, delivering near-perfect results. Most people assume all LLMs are interchangeable, but that’s like saying all cars are the same. A 4x4, a hatchback, and a minivan serve different purposes. As you experiment, you uncover each model’s latent strengths.

For automating software development, Gemini is less effective. You need a task runner capable of handling tool calls, and Anthropic excels in this regard, particularly for incremental automation tasks. If you seek to automate software, then you need a model that excels at tool calls.

The best way to assess an engineer’s skill is to observe them interacting with an LLM, much like watching a developer debug code via screen share. Are they methodical? Do they write tests, use print statements, or step through code effectively? These habits reveal expertise. The same applies to AI proficiency, but scaling this observation process is costly: you can’t have product engineers shadowing every candidate.

Pre-filtering gates are another challenge. I don’t have a definitive solution, but some companies are reverting to in-person interviews. The gates have been disrupted.

Another thing I've been thinking: when someone says, “AI doesn’t work for me,” what do they mean? Are they referring to concerns related to AI in the workplace or personal experiments on greenfield projects that don't have these concerns?

This distinction matters.

Employees trade skills for employability, and failing to upskill in AI could jeopardise their future. I’m deeply concerned about this.

If a company struggles with AI adoption, that’s a solvable problem - it's now my literal job. But I worry more about employees.

In history, there are tales of employees departing companies that resisted cloud adoption to keep their skills competitive.

The same applies to AI. Companies that lag risk losing talent who prioritise skill relevance.

Employees should experiment with AI at home, free from corporate codebases’ constraints. There’s a beauty in AI’s potential; it’s like a musical instrument.

deliberate intentional practice

Something I’ve been wondering about for a really long time is, essentially, why do people say AI doesn’t work for them? What do they mean when they say that? From which identity are they coming from? Are they coming from the perspective of an engineer with a job title and

Geoffrey HuntleyGeoffrey Huntley

Geoffrey HuntleyGeoffrey Huntley

Everyone knows what a guitar is, but mastery requires deliberate practice.

Musicians don't just pick up a guitar, experience failure, and then go, "Well, it got the answer wildly wrong", and then move on and assume that that will be their repeated experience.

The most successful AI users I know engage in intentional practice, experimenting playfully to test its limits.

What they do is play.

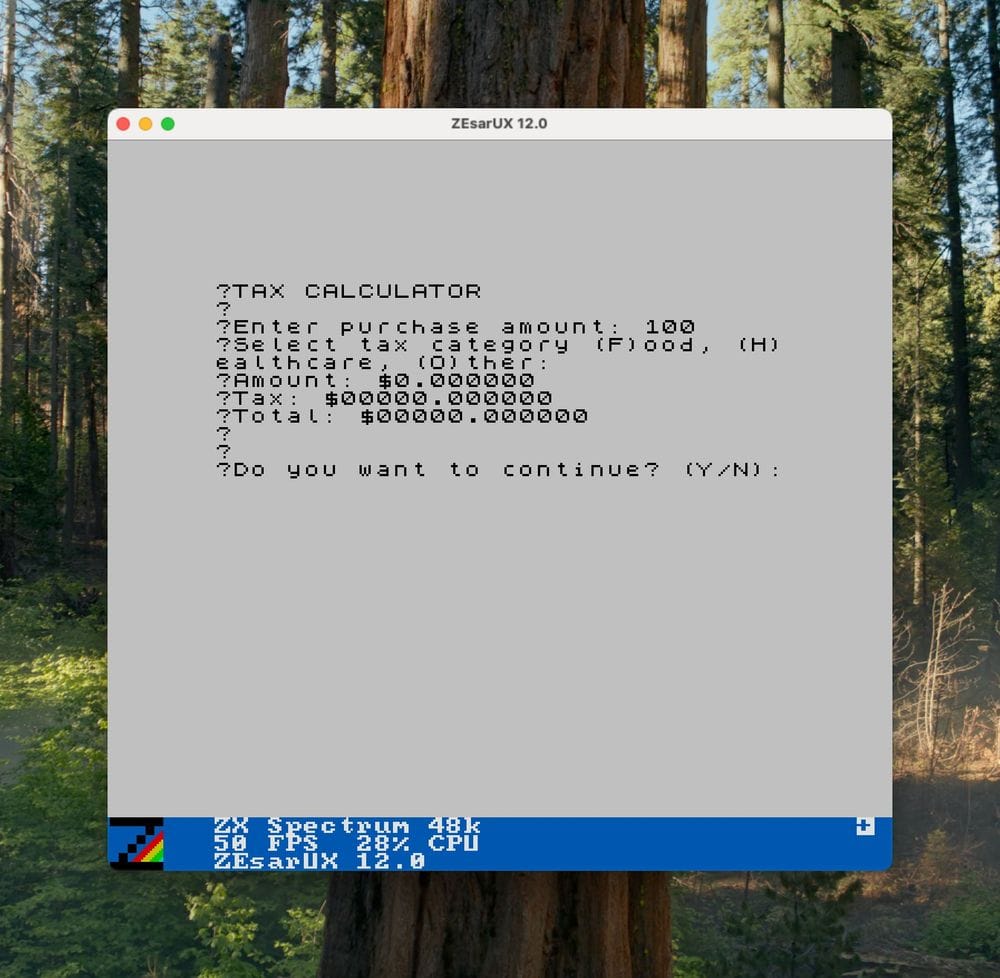

Last week, over Zoom margaritas, a friend and I reminisced about COBOL.

Curiosity led us to ask, “Can AI write COBOL?”

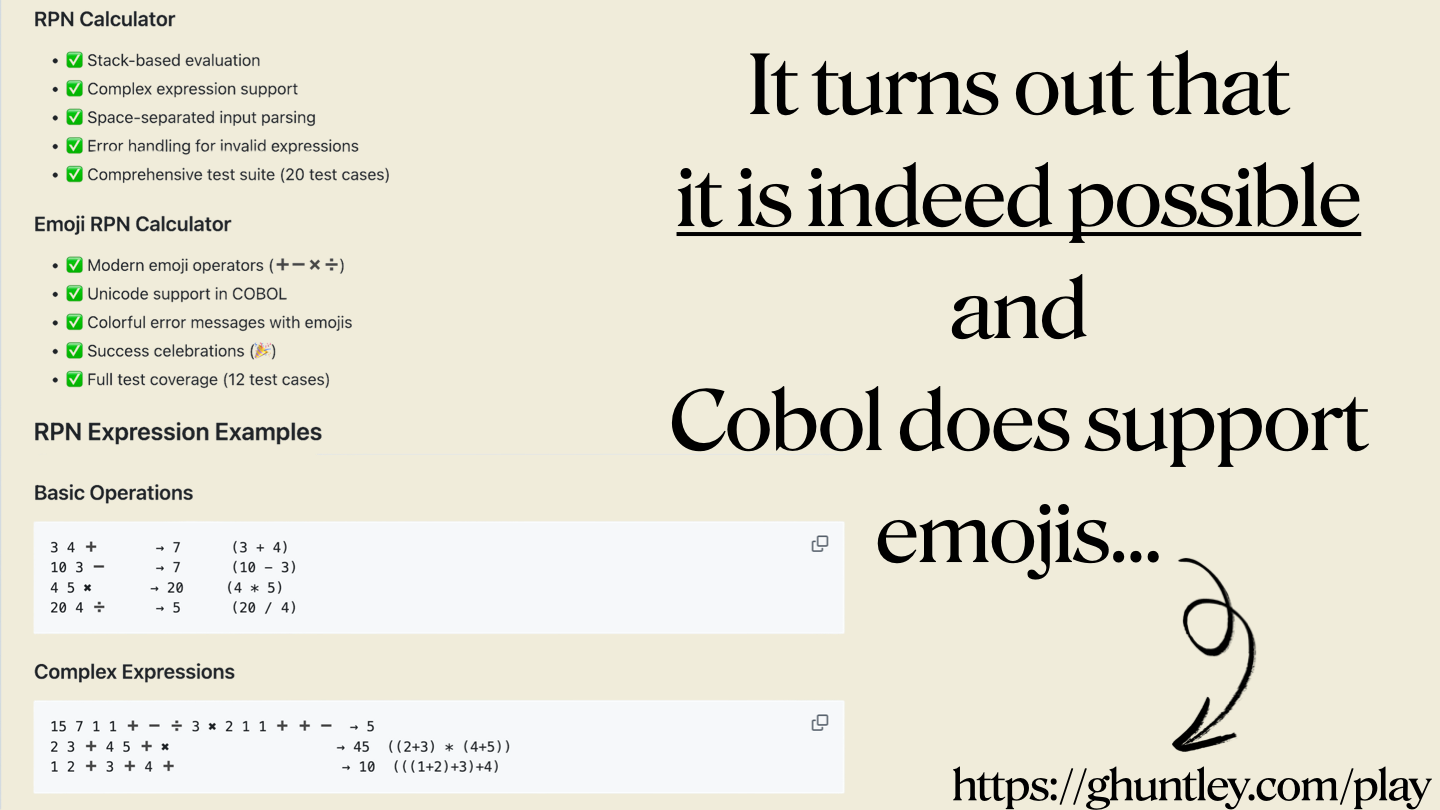

Moments later, we built a COBOL calculator using Amp.

Amazed, we pushed further: could it create a reverse Polish notation calculator?

It did.

Emboldened, we asked for unit tests - yes, COBOL has a unit test framework, and AI handled it.

At this stage, our brains were just racing and we're riffing. Like, what are the other possibilities of what AI can do?

After a few more drinks, we went absurd: let's build a reverse Polish notation calculator in COBOL using emojis as operators.

Does COBOL even support emojis?

Well, there's one way to find out...

Surprisingly, COBOL supports emojis, and we created the world’s first emoji-based COBOL calculator.

GitHub - ghuntley/cobol-emoji-rpn-calculator: A Emoji Reverse Polish Notation Calculator written in COBOL.

A Emoji Reverse Polish Notation Calculator written in COBOL. - ghuntley/cobol-emoji-rpn-calculator

Last night at the speakers’ dinner, fonts were discussed, and the topic of Comic Sans came up. In the spirit of play, I prompted AI to build a Chrome extension called “Piss Off All Designers,” which toggles all webpage fonts to Comic Sans. It turns out AI does browser extensions very, very well...

GitHub - ghuntley/piss-off-all-designers-in-the-world: 💀🔥 OBLITERATE THE TYPOGRAPHY ESTABLISHMENT 💀🔥 A Chrome extension that transforms any website into a beautiful Comic Sans masterpiece

💀🔥 OBLITERATE THE TYPOGRAPHY ESTABLISHMENT 💀🔥 A Chrome extension that transforms any website into a beautiful Comic Sans masterpiece - ghuntley/piss-off-all-designers-in-the-world

Sceptics might call these toy projects, but AI scales. I’ve run four headless agents that automated software development, cloning products such as Tailscale, HashiCorp Nomad, and Infisical. These are autonomous loops, driven by learned techniques, that operate while I sleep.

Another project I’m exploring is an AI-built compiler for a new programming language, which is now at the stage of implementing PostgreSQL and MySQL adapters. Remarkably, it’s programming a new language with no prior training data. By feeding it a lookup table and lexical structure (e.g., Go-like syntax but with custom keywords), it generates functional code. It’s astonishing.

To achieve such outcomes, I built an AI supervisor to programmatically correct errors, enabling headless automation.

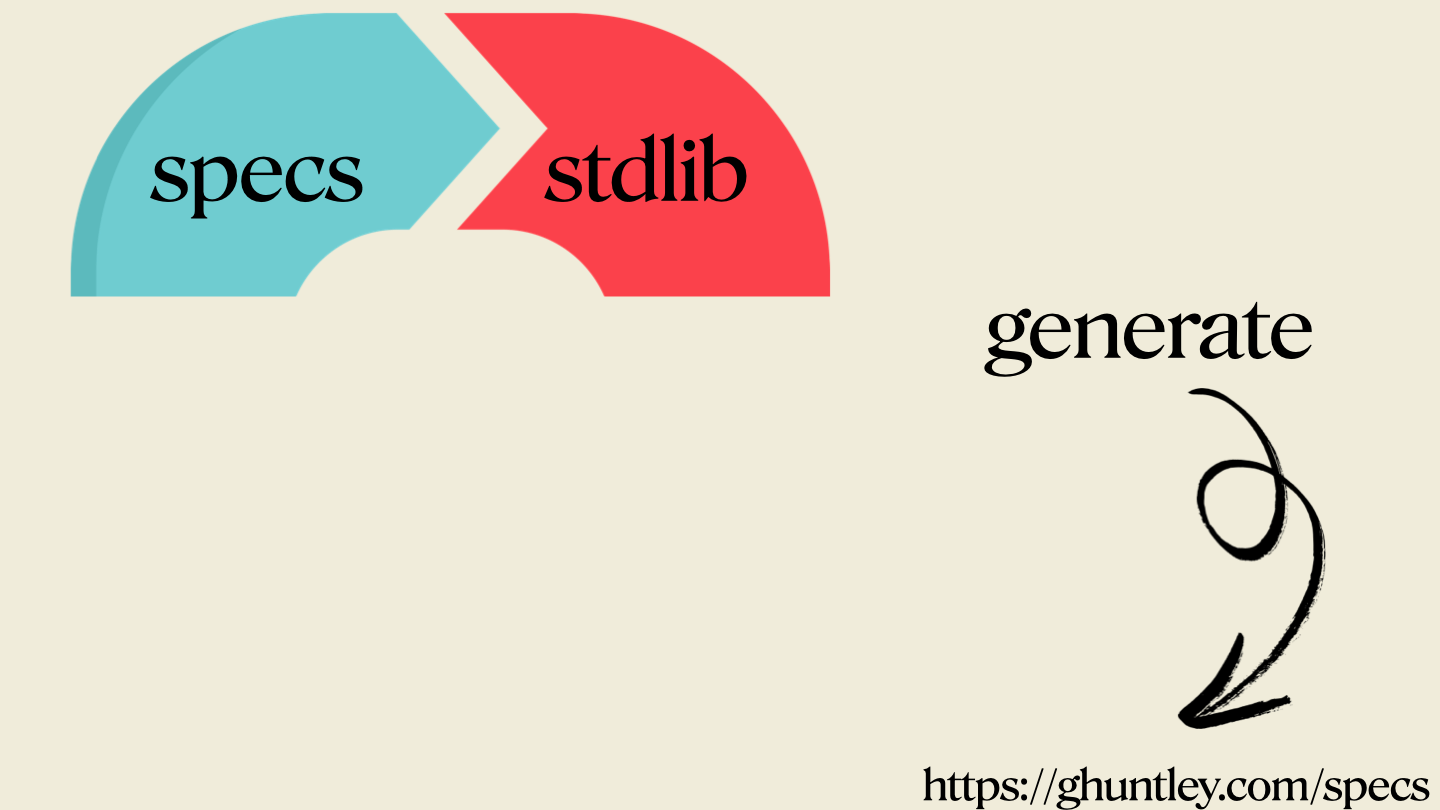

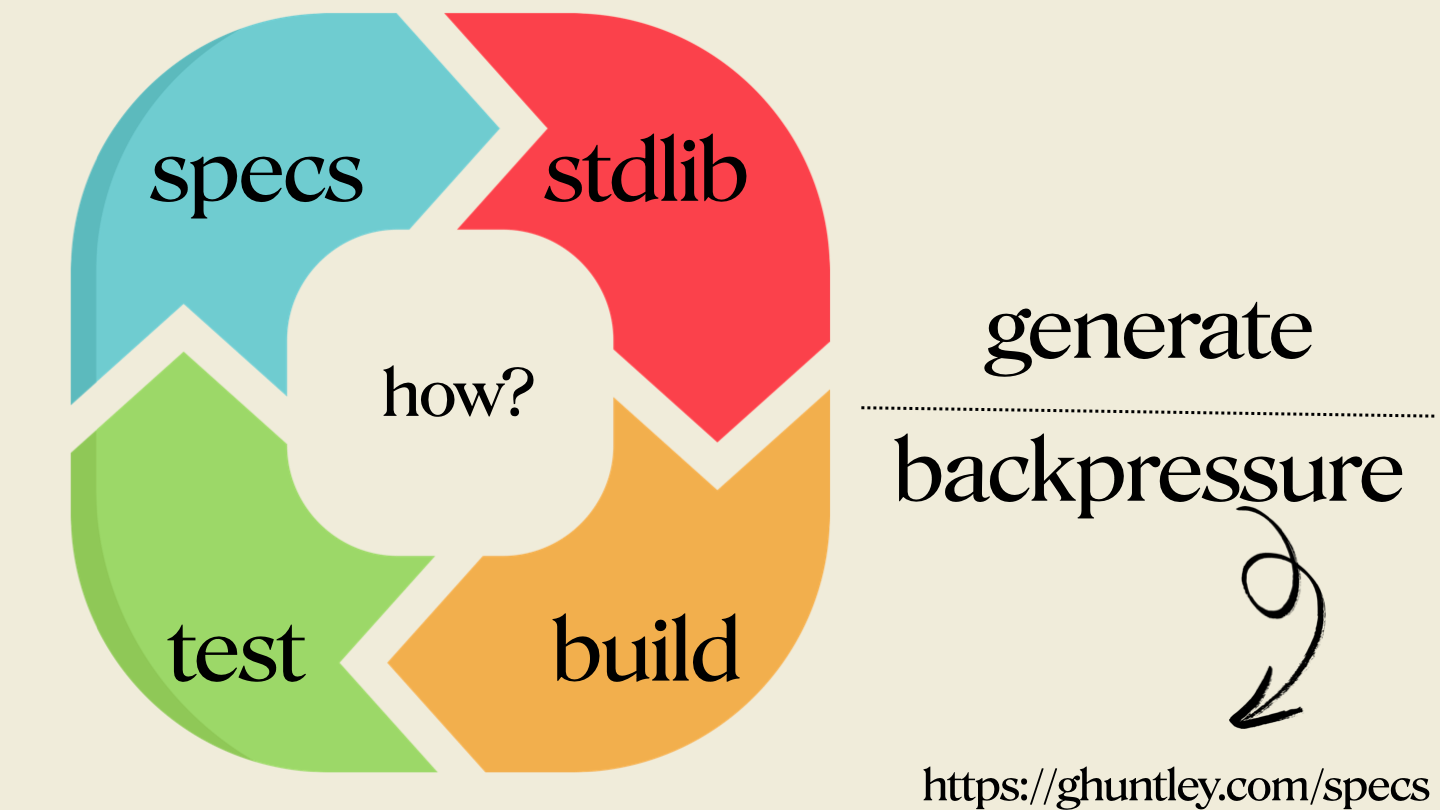

For the compiler, I didn’t just prompt and code. I held a dialogue: “I’m building a Go-like language with Gen Z slang keywords. Don’t implement yet. What’s your approach for the lexer and parser?” This conversation created a context window, followed by the generation of product requirements (PRDs). This is the "/specs" technique found below.

From Design doc to code: the Groundhog AI coding assistant (and new Cursor vibecoding meta)

Ello everyone, in the “Yes, Claude Code can decompile itself. Here’s the source code” blog post, I teased about a new meta when using Cursor. This post is a follow-up to the post below. You are using Cursor AI incorrectly...I’m hesitant to give this advice away for free,

Geoffrey HuntleyGeoffrey Huntley

Geoffrey HuntleyGeoffrey Huntley

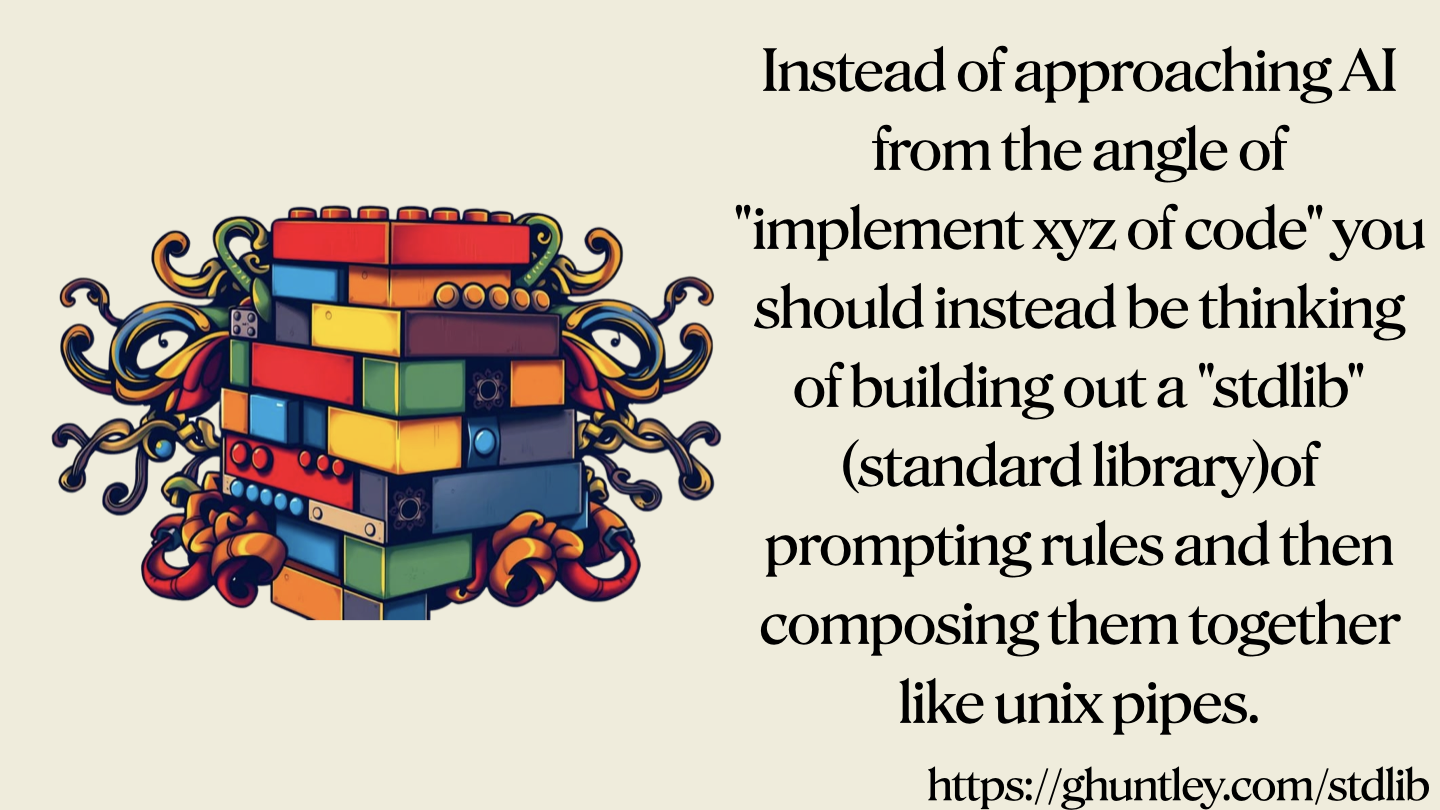

Another key practice is maintaining a “standard library” of prompts. Amp is built using Svelte 5, but Claude keeps suggesting Svelte 4. To resolve this, we have created a prompt to enforce Svelte 5, which addresses the issue. LLMs can be programmed for consistent outcomes.

You are using Cursor AI incorrectly...

🗞️I recently shipped a follow-up blog post to this one; this post remains true. You’ll need to know this to be able to drive the N-factor of weeks of co-worker output in hours technique as detailed at https://ghuntley.com/specs I’m hesitant to give this advice away for free,

Geoffrey HuntleyGeoffrey Huntley

Geoffrey HuntleyGeoffrey Huntley

Another concept is backpressure, akin to build or test results. A failing build or test applies pressure to the generative loop, refining outputs. Companies with robust test coverage will adopt AI more easily, as tests provide backpressure for tasks like code migrations (e.g., .NET upgrades).

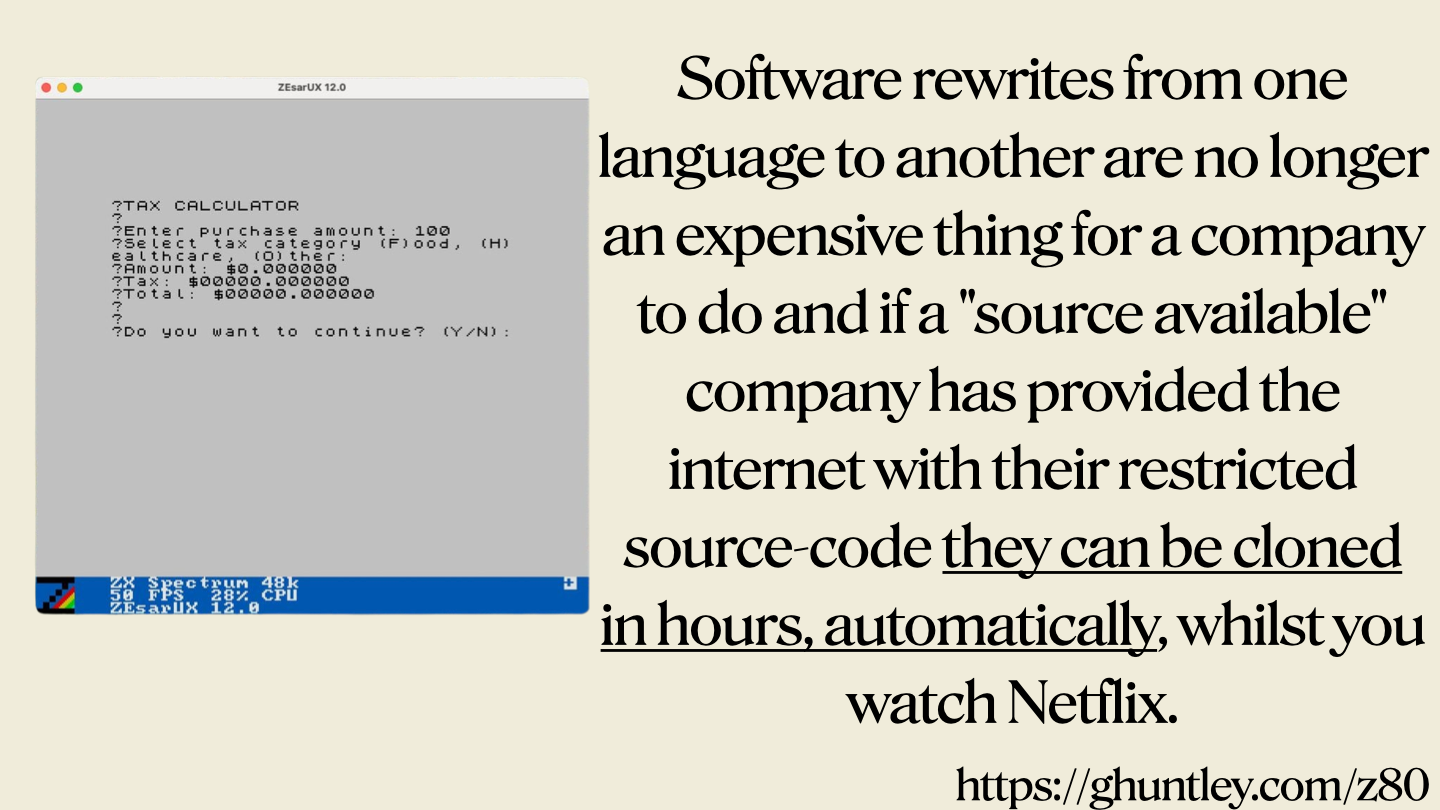

AI has some concerning implications for business owners, as AI can act like a “Bitcoin mixer” for intellectual property. Feed it source code or product documentation, generate a spec, and you can clone a company’s functionality. For a company like Tailscale, which recently raised $130 million, what happens if key engineers leave and use these loops to replicate its tech? This raises profound questions for business dynamics and society when a new competitor can operate more efficiently or enter the market with different unit economics.

Can a LLM convert C, to ASM to specs and then to a working Z/80 Speccy tape? Yes.

✨Daniel Joyce used the techniques described in this post to port ls to rust via an objdump. You can see the code here: https://github.com/DanielJoyce/ls-rs. Keen, to see more examples - get in contact if you ship something! Damien Guard nerd sniped me and other folks wanted

Geoffrey HuntleyGeoffrey Huntley

Geoffrey HuntleyGeoffrey Huntley

To optimise LLM outcomes, one should avoid endless chat sessions (e.g., tweaking a button’s colour, then requesting a backend controller). If the LLM veers off track, start a new context window. Context windows are like memory allocation in C—you can’t deallocate without starting fresh.

However, recent advancements, introduced four days ago, called subagents, enable async futures, allowing for garbage collection. Instead of overloading a 154,000-token context window, you can spawn sub-agents in separate futures, enhancing efficiency. We have gone from manually allocating memory using C to the JVM era seemingly overnight...

I dream about AI subagents; they whisper to me while I’m asleep

In a previous post, I shared about “real context window” sizes and “advertised context window sizes” Claude 3.7’s advertised context window is 200k, but I’ve noticed that the quality of output clips at the 147k-152k mark. Regardless of which agent is used, when clipping occurs, tool call to

Geoffrey HuntleyGeoffrey Huntley

Geoffrey HuntleyGeoffrey Huntley

Some closing thoughts...

Removing waste from processes within your company will accelerate progress more than AI adoption alone. As engineering teams adopt these tools, it will be a mirror to the waste within an organisation. As generating code is no longer the bottleneck, other bottlenecks will appear within your organisation.

A permissive culture is equally critical. You know the old saying that ideas are worthless and execution is everything? Well, that has been invalidated. Ideas are now execution - spoken prompts can create immediate results.

Stories no longer start at zero per cent; they begin at 50–70% completion, with engineers filling in the gaps.

However, tools like Jira may become obsolete. At Canva, my team adopted a spec-based workflow for AI tools, requiring clear boundaries (e.g., “you handle backend, I’ll do AI”) because AI can complete tasks so quickly. Thinly sliced work allocations cause overlap, as AI can produce weeks’ worth of output rapidly.

Traditional software has been built in small increments or pillars of trust, but now, with AI-generated code, that's inverted. With the compiler, verification is simple—it either compiles or doesn’t. But for complex systems, “vibe coding” (shipping unverified AI output) is reckless. Figuring out how to create trust at scale is an unsolved problem for now.

AI erases traditional developer identities—backend, frontend, Ruby, or Node.js. Anyone can now perform these roles, creating emotional challenges for specialists with decades of experience.

Engineers must maintain accountability, explaining outcomes as they would with traditional code. Creating software is no longer enough. Engineers now must automate the creation of software.

Libraries and open source are also in question. AI can generate code, bypassing the need to fix bugs or nag maintainers. This shift challenges the role of open-source ecosystems. I've found myself using less open source these days, and when I speak with people around me who understand it, they're also noticing the same trend.

Finally, all AI vendors, including us, are selling the same 500 lines of code in a while True loop. I encourage you to build your own agent; it’s critical.

How to Build an Agent

Building a fully functional, code-editing agent in less than 400 lines.

This is a perilous year to be complacent, especially at high-performance companies. These changes won’t impact everyone simultaneously, but at some firms, they’re unfolding rapidly.

Please experiment with these techniques, test them, and share your results. I’m still grappling with what’s real, but I’m pushing boundaries and seeing impossible outcomes materialise. It’s surreal.

Please go forward and do things...

.png)