If Broadcom says that co-packaged optics is ready for prime time and can compete with other ways of linking switch ASICs to fiber optic cables, then it is very unlikely that Broadcom is wrong.

The company that is the modern Broadcom has a long and deep – and acquired – expertise in optical communications. The lineage runs on one branch from the original Hewlett Packard to its Agilent Technologies spinoff, which a bunch of private equity companies acquired a chunk of and called Avago Technologies. Another line of optical expertise comes out of AT&T Bell Labs through the Lucent Technologies spinout in 1996, which runs through Agere Systems, which was bought by LSI Logic and which was in turn acquired by Avago. Avago, of course, bought Broadcom for its switch ASIC and other chip businesses in 2016 for $37 billion, which seemed like an incredibly high number at the time but which turns out to be prophetic in the age of AI.

There have been plenty of naysayers about CPO in the datacenter, but for many years now Broadcom’s techies have told us that using CPO inside of switches will not only improve the reliability of switches and reduce power consumption in the datacenter network, but will also lower costs. (Eventually, CPO or an optical interposer or something will be added to compute engines of all kinds for the same reasons, and when that happens, we will get much more malleable racks and stop pushing for rack density for the sake of latency.)

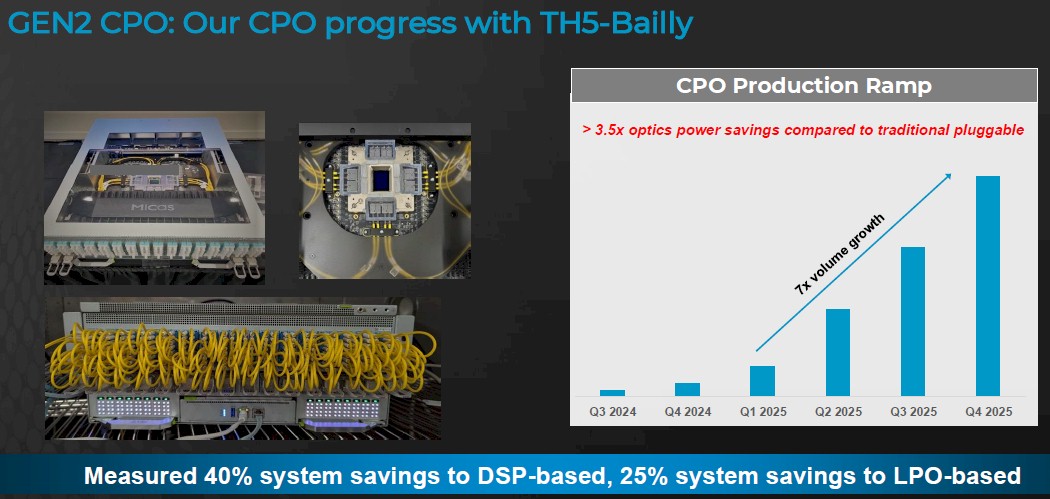

These CPO claims for the past several years were tough for many to swallow. But, as Broadcom readies its third generation of CPO add-ons for its Tomahawk line of Ethernet switch ASICs, the data is in from a real-world, large-scale deployment of Broadcom’s second generation CPO technology, and the results are clear. We will get to the testing that Meta Platforms has done using switches based on Broadcom’s “Bailly” Tomahawk 5 CPO switch ASIC in a moment; the paper is not yet public, but here is the link for it. We have a copy of the paper as well as summary data that we can present compliments of Broadcom. Right now, let’s look at the CPO switch ASIC roadmap from Broadcom and what it means, and how we can interpret the data on the Bailly ASIC and how the future “Davisson” Tomahawk 6 CPO device will be even better and that much more ready to be mainstreamed.

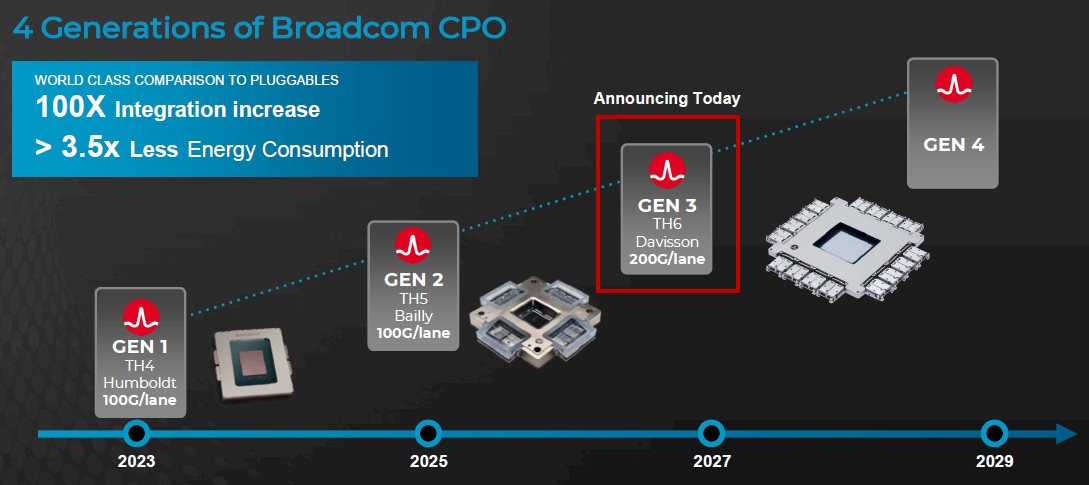

For a lot of complicated reasons, we never did get around to writing about the first generation “Humboldt” Tomahawk 4 CPO switch chips, which were announced in January 2021 and which were deployed as a development platform by Chinese hyperscaler Tencent. (Broadcom names its CPO switch ASICs after craters on the Moon, which are in turn named after famous people on Earth throughout history as well as various Latin and English names having to do with lunological features.)

The Tomahawk 4 CPO ASIC had 25.6 Tb/sec of aggregate switching capacity, like the plain vanilla TH4 on which it is based, and had four 3.2 Tb/sec optical engines for 12.8 Tb/sec of bandwidth (which could be allocated as 400 Gb/sec or 800 Gb/sec ports) as well as 12.8 Tb/sec of electrical lanes that could be allocated the same way. Importantly, an 800 Gb/sec port on the CPO consumed about 6.4 watts, compared to somewhere around 16 watts to 18 watts for regular pluggable optics running on the same Tomahawk 4 switch without CPO. The Humboldt ASIC had remote laser modules, but if something went wrong with the lasers or the optics, you had to replace the entire switch.

It would be great to see the port counts in that chart to the right above, not just the change.

With the second generation Bailly CPO switch ASICs, which are based on the 51.2 Tb/sec Tomahawk 5 chip and which started shipping to a few hyperscalers in 2023, Broadcom put eight 6.4 Tb/sec optical engines on the devices and no electrical connections at all. The SerDes ran at 100 Gb/sec per lane, and 800 Gb/sec of bandwidth (meaning eight lanes) took 5.5 watts, which was a 14.1 percent reduction in power compared to a Humboldt port and that much less than the power used with pluggable optics. The Bailly CPO switch chip only did optical links and saw some adoption by a couple of hyperscalers (we know of Meta Platforms and presume Tencent did as well). The Bailly design had detachable lasers, which meant they could be field replaceable, easing the minds of many potential switch buyers who are understandably nervous about giant shared laser sources that might fail in the field.

The good news – sort of – is that in a massively parallel AI cluster, if one pluggable optics module fails, then the whole job stops anyway. So a failed pluggable optics module is as bad as a failed laser that kills an entire switch in this regard. (This is what happens when a workload spans all accelerators and all switches.)

That brings us to next year’s Davisson TH6 CPO device, which Broadcom is now shipping to early access customers. As the name suggests, the Davisson switch chip is based on the Tomahawk 6 ASIC that we told you about back in June. The CPO version of TH6 is using the one that has four Serdes chiplets wrapped around the packet processing engines, which have native 100 Gb/sec speeds plus PAM4 modulation to give two bits per signal and an effective 200 Gb/sec of bandwidth per lane. (There is another version of TH6 that has 50 Gb/sec native signaling with PAM4 modulation that delivers 100 Gb/sec effective bandwidth per lane, like TH4 and TH5 do.) On a 102.4 Tb/sec ASIC, you can get 64 ports running at 1.6 Tb/sec or 128 ports running at 800 Gb/sec – or significantly, 512 ports running at 200 Gb/sec, which is enough for certain kinds of inference workloads and modest training workloads to have one ASIC link to 512 XPUs without anything other than a direct connection. Broadcom adds that the Davisson chip, like the stock Tomahawk 6, can link 131,072 XPUs together in a two tier network. The spec sheets say more than 100,000, but that is the precise number.

Among the many things that are cool about the Davisson TH6 CPO setup is the fact that the laser modules for the switch are now field replaceable, not just detachable, through the front panel of the switch and are no longer close to the switch ASIC and optical interfaces welded onto them and therefore the heat coming off of them, which messes with lasers and can kill them. This makes the lasers more reliable as well as more serviceable. An 800 Gb/sec port will burn about 3.5 watts, says Broadcom, which is 36.4 percent lower than with the Tomahawk 5 CPO port at the same bandwidth and more than 70 percent lower than pluggable optics at the same bandwidth.

This is accomplished in large part through Broadcom working with Taiwan Semiconductor Manufacturing Co on its Compact Universal Photonic Engine (COUPE) packaging technique, which is used to add 16 6.4 Gb/sec optical engines around the TH6 package, which comes in at around 120 mm x 120 mm compared to 75 mm by 75 mm for Bailly.

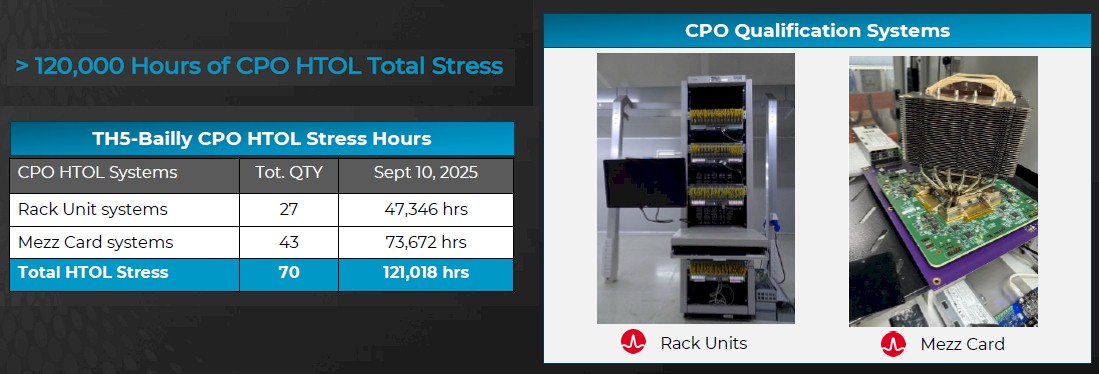

Here is the stress testing gear for the Bailly CPO switches at Broadcom:

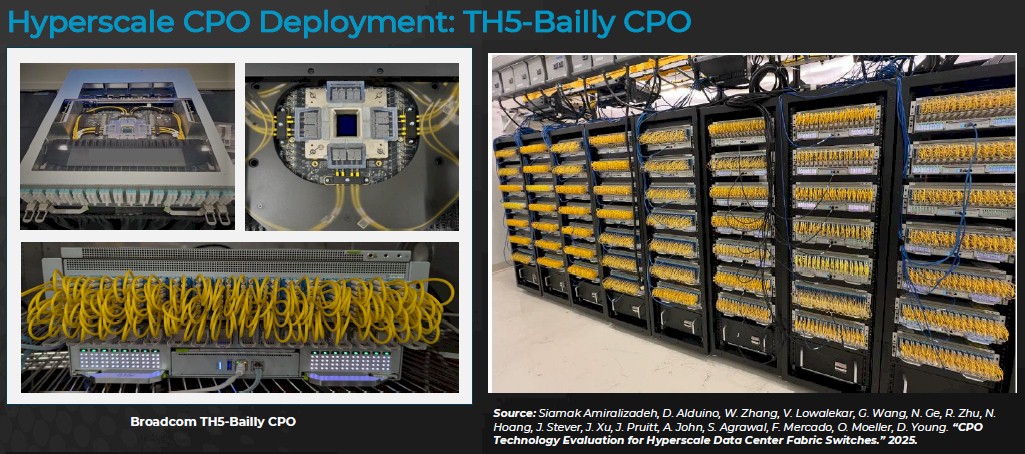

And here is one of the production rows of Bailly CPO switches tested at hyperscalers Meta Platforms:

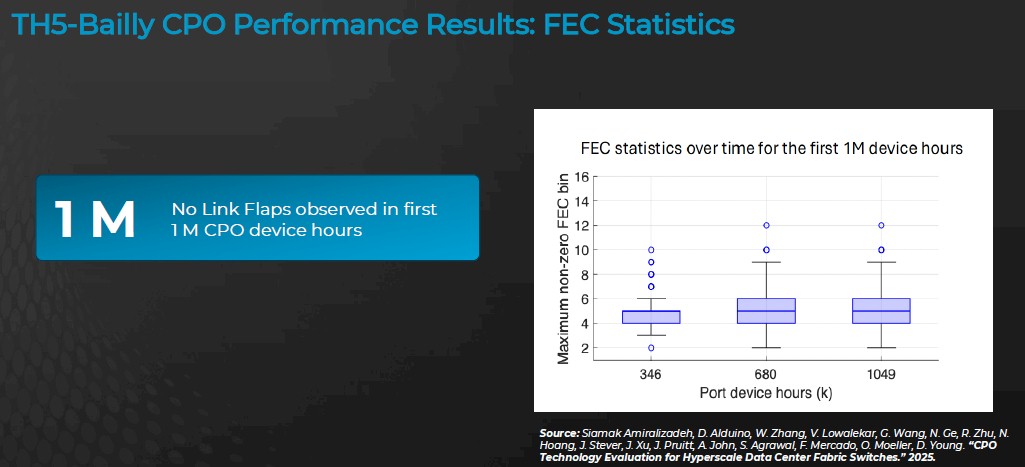

“We’ve continued to develop, learn, and evolve over the last five years,” Manesh Mehta, vice president of marketing and operations for the Optical Systems Division at Broadcom, tells The Next Platform. “I think there are a few areas that we are really focused on. First, it’s clear to us that our customers are starting to really get excited about and value the reliability and link performance of CPO platform the way that we’re building it, which is basically a high density optical engine built using very scalable foundry and OSAT-based manufacturing techniques and then solder-attaching that optical engine to a common substrate with the core ASIC package. And second, what Meta presented two weeks ago was that the first million device hours that they ran on Bailly, there were zero link flaps observed. These link flaps drive a pretty high inefficiency or underutilization of XPU’s compute time with all the checkpoint retries.”

So what is a link flap, you ask? Is it some weird kind of sausage made of an even weird kind of meat? No. it is when a communication link – a port, a lane, what have you – cycles from being up to being down when there is a faulty cable, some software configuration issue, a bad connection, dust on an optical transceiver, and dozens of other possible causes. The link is flapping around like one of those hand-wavy tubular balloons on used car lots.

Over the first 1 million device hours with the Bailly switches, which we think were manufactured by Micas Networks, who we told you all about here way back in October 2023, there were no link flaps:

“To the best of our knowledge, this is the highest device hours reported for CPO technology operation at the system level,” the techies at Meta Platforms write in their paper. “Over the period of the experiment, each unit ran continuously without interruption or clearing the FEC [forward error correction] counters and we did not see any failures or uncorrectable codewords (UCWs) in the links.”

One port had some funniness over the test run, and it was traced back to a faulty fiber cable.

But here’s important thing in all of the paper: “The demonstrated lower bound mean time between failures of (MTBFs) of optical links can readily support a 24K GPU AI cluster with >90 percent training efficiency without interconnect failures being the bottleneck.”

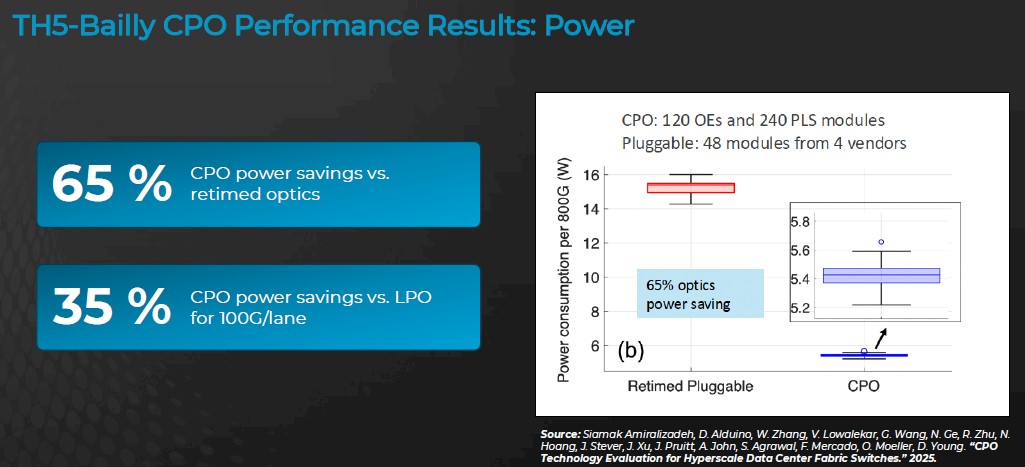

Meta added that the Bailly CPO switch optics delivered 65 percent lower power per 100 Gb/sec lane compared to retimed pluggable optical models. Here’s the data on that from the paper:

Linear drive pluggable optics, or LPO, burns somewhere on the order of 10 watts, which is possible because there no DSP in the network path and the switch ASIC drives the signal processing itself, and therefore can reduce the power draw compared to pluggable optics – and is an approach famously advocated by Andy Bechtolsheim of Arista Networks – the CPO approach burns 35 percent less power per 100 Gb/sec lane than the LPO approach.

This may not seem like a lot until you do the math on a 100,000 XPU cluster with 4 KW XPUs, as Bechtolsheim did in August 2024 in the story linked above. The 6.4 million pluggable optical transceivers needed to interlink the GPUs burned 192 MW of power, compared to 400 MW for the GPUs. LPO dropped this to 64 MW. But CPO will drop this to 42 MW, which is only 10.5 percent of the XPU power.

This is real money. A few years ago, the rates that supercomputing centers were budgeting for power was $1 million per MW-year, but in high demand areas like Northern Virginia or Silicon Valley, it is more like $1.2 million to $1.5 million per MW-year. So at the high-end of that pricing, pluggable optics for 100,000 XPUs would cost $1.44 billion to run over five years, while LPO would cost $480 million and CPO would cost $315 million. The savings in power from switching from pluggable optics to CPO would cover the cost of around 32,000 “Blackwell” GPU accelerators at $35,000 a pop. For lower-cost XPUs, it could easily be twice that number of XPU units for that $1.13 billion in incremental spending on electricity.

This seems like a freaking no-brainer to us. Particularly when the CPO units are actually more reliable and the lasers are going to be field replaceable with the Davisson generation of ASICs from Broadcom.

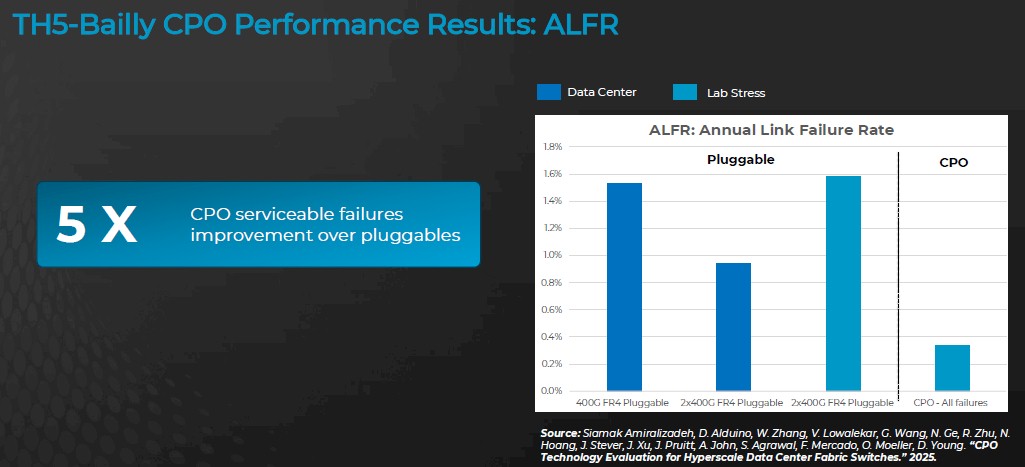

Here’s the reliability data from the Meta Platforms paper, starting with annual link failure rates:

Remember, an AI cluster doing training is a shared everything architecture and the stoppage of one link or one GPU stalls all computation in the cluster. A failure is a big deal. 5X fewer failures is therefore a very, very big deal. There is no data presented for LPO in the Meta Platform paper, unfortunately.

And here is the mean time between failure for the CPO versus pluggable optics from the Meta Platform paper:

The pluggable optics use actual datacenter failure rates, while the CPO is using lab stress failure rates because Meta Platforms did not see any failure rates in its datacenter test. And by the way, Mehta says that the lab conditions where the Bailly CPO was tested were much harsher than the environment in the Meta Platforms datacenters because the lab is explicitly trying to cause failures, not to avoid them, by being too hot or vibrating a lot.

All of this brings us back to the Davisson CPO switch, which we expect will see much broader adoption for scale-out networks in AI clusters as well as for the Clos networks in use by hyperscalers and cloud builders for more generic infrastructure and data analytics workloads.

Here is a zoom shot on the Davisson package, with the 102.4 Tb/sec Tomahawk 6 ASIC in the center and the sixteen optical interconnects wrapped around the perimeter of the chip:

And here is an early version of a Davisson CPO switch:

Corning is partnering with Broadcom to deliver fiber harnesses and cable assemblies to hook the optical ports to the front of the switch chassis, TSMC and SPIL do the packaging, and we presume that Micas Networks, Celestica, and Nexthop.ai – who have been working with Broadcom on the Bailly CPO switches – will be working with the company on Davisson CPO switches, too.

What we hope, however, is that Broadcom makes a CPO version of its Tomahawk Ultra “InfiniBand Killer” switch ASIC, which was announced in July and which is being positioned as the Ethernet for scale up networks for sharing XPU memories in rackscale nodes used in AI clusters. We think it would be very interesting to see CPO ports on accelerators matched to CPO ports on switch chips, as we have pointed out time and again. It is OK to start with the scale out network, but ultimately, we need such links everywhere, even for memory banks and flash banks, so we can have more options to connect components and to save power.

Sign up to our Newsletter

Featuring highlights, analysis, and stories from the week directly from us to your inbox with nothing in between.

Subscribe now

.png)