We now have machines that can “think” and “reason”.

Well, approximations of “thought” and “reason” that are good enough for most people anyway.

Humans have been outsourcing thought to other humans or groups of other humans since the beginning of time. This is how ideas spread, and culture is created - by taking someone else’s word for it. So what’s different now?

What happens when you start outsourcing your thoughts to an AI?

I call this phenomenon “Thoughtsourcing”. Here’s an official definition (that I just made up).

"Thoughtsourcing is the increasing reliance on artificial intelligence and large language models to generate ideas, solve problems, and formulate responses, effectively outsourcing cognitive processes."

The allure of thoughtsourcing is very tempting, especially as AI models get better and better with every new release. We could achieve amazing things by removing the bottleneck we have today on how much quality thought we can do as a species. Just imagine, if the right amount of the right kind of “thought” could solve pollution, poverty, famine, war, the climate crisis, or any other problem you can think of and then think beyond to curing cancer, interstellar travel, space colonization and more.

The stakes are high, and probably so high that we’ve already boarded a runaway train that ensures enough money and resources will be provided to see this scale to its logical conclusion. A machine god will be built or we will die trying.

And we don’t even need to reach those rarefied realms, think about the mundane benefits that AI already offers: Freedom from repetitive, thankless and uninteresting tasks. A human intelligence effectively augmented with AI can clearly achieve a lot more, and its not just about productivity gains, its also about the time and energy that you can spend elsewhere doing what really matters to you.

So what price would you be willing to pay for such freedom?

Leaving aside the risks of a misaligned super intelligence, lets think about what “thoughtsourcing” will do (and is already doing) to us humans.

Your brain is an organ, and your mind needs exercise just like any other part of you. If you stop using parts of it, they will shrivel, shrink and eventually become a vestigial appendage. Its so much easier to ask ChatGPT (or one of its brethren) how to solve a problem, or how to respond to a prickly email, or how to fix your code, or how to say the right thing to someone, or.. (the list goes on), that its easy to fall into the trap of going to the AI when you needn’t or shouldn’t.

You can see this phenomenon more and more these days. It’s especially visible on social media (LinkedIn is the worst culprit in this regard and especially cringe). These posts or responses that are so clearly and obviously (to those who know) written by a large language model. The tell tale signs all exist, over reliance on certain catch phrases and words - “tapestry”, “delve”, “In this world of..” - and also something less tangible but clearly recognizable as what an LLM would output given the barest of instructions or prompting and without a human editorial.

Sometimes it’s personal posts about supposedly thought provoking incidents in the author’s life - but casual prompting can never catch the idiosyncrasies that make a story truly “personal”.

Sometimes it’s inane automatic responses to posts by others, which just restate the subject using different words. In other words, its engagement farming, provides zero value and a waste of screen real estate and time.

And sometimes, you even get to see an AI masquerading as a human responding to another AI masquerading as a human. What is the point of this all this performative posting?

Social media just happens to be where thoughtsourcing is more obviously visible, it’s happening everywhere:

In workplaces everywhere employees are thoughtsourcing ideas, emails, business plans and code

Imagine a marketing team relying solely on AI to generate campaign ideas, it might show positive results to start with and save the team time and money but it could just as well lead to homogenized, less innovative content that never uncovers the unique voice of the brand.

In schools and universities homework, essays and assignments are at the cutting edge of thoughtsourcing (as is to be expected)

If students are using AI to turn in their work, what do they really learn besides thoughtsourcing? AI generated content detectors don’t really work and just means that the teachers are thoughtsourcing as well.

Even in our personal life and communication, companies like Google and Meta are now suggesting we use AI to rewrite or draft or summarize or respond to emails.

Yes its all very convenient but if I send you an AI generated email and you use AI to summarize and respond to it, did we just have an actual conversation? Or is this just recursive thoughtsourcing leading to eventual cognitive collapse.

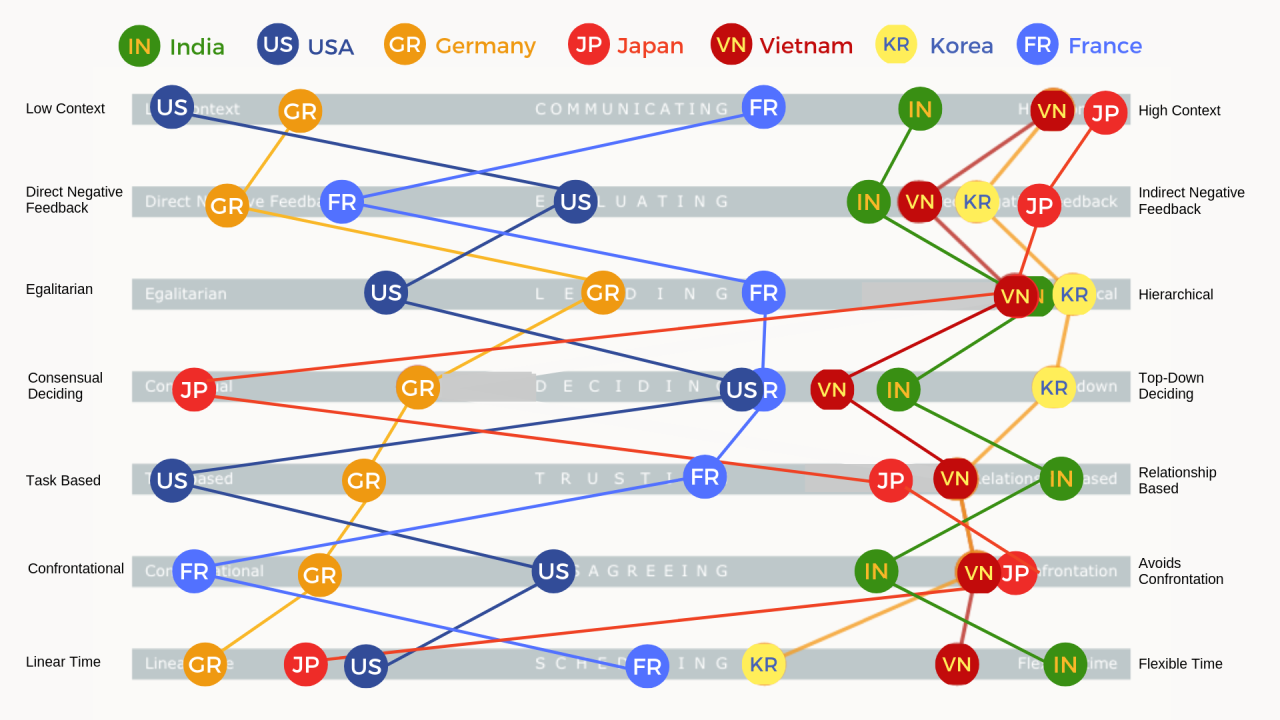

In many ways thoughtsourcing is similar to what happens when you are part of a culture.

What is culture but knowingly or unknowingly submitting to the ideas and customs distilled across an entire population of people.

And what are large language models but the collective thoughts (expressed in text, audio and video) of an entire population of people.

Culture is neither good nor bad, but it can have real world effects that are good or bad for the people who ware part of it.

Culture is quintessentially human. Thoughtsourcing is not.

Culture is naturally curated for you by your family, reinforced by the people around you, and assimilated through books, entertainment and nowadays, the Internet.

Cultural values emerge organically through lived experiences, interactions and traditions of a community. It evolves over time and is (imperfectly) self-correcting, it is subject to bias but open to challenges.

Large language models on the other hand are built by companies and research labs of a few hundred people, thousands of miles away, whose values and life experiences maybe alien to you.

The values in these AI models emerge from the data they are trained on and embedded by human labelers (RLHF). These values are subject to the biases of a small group of developers and potentially reflect less rigorously debated viewpoints that have not had to evolve in the arena of ideas. (Most people never get to experience these models in their raw unfiltered form, instead they get a neutered version that has been straitjacketed by system prompts and RLHF).

So what do we do? Do we stop using these extremely convenient and powerful tools that we have built? No - I don’t think so, in any case that ship has sailed. But this doesn’t mean that we do nothing and auto pilot our way into oblivion.

I am definitely no luddite - the opposite in fact, I am a child of science fiction and have always been a techno-optimist.

If I had to decide, I will always choose a future with super intelligence over one without it.

But maintaining the ability and the motivation for independent thought will be hard, and it will get harder each passing year. I think that it might be one of the greatest challenges that homo sapiens have had to face.

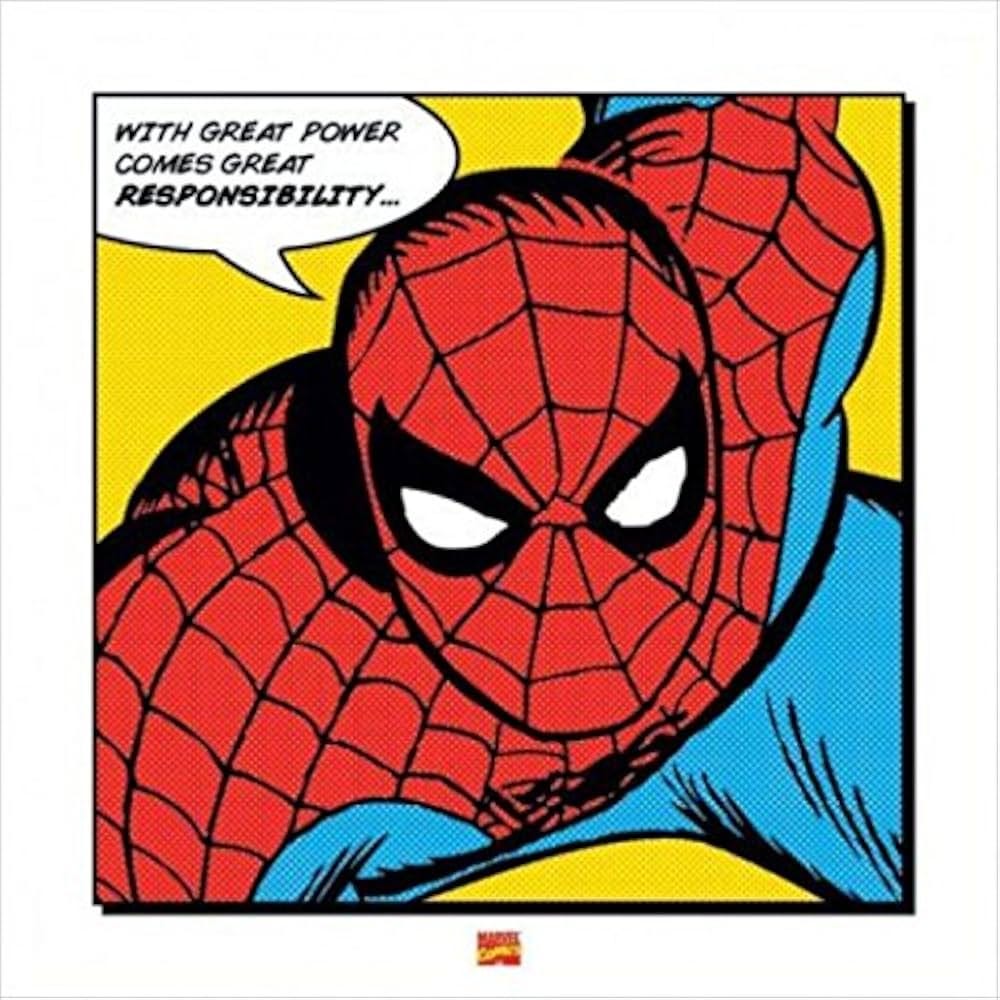

The next time you are tempted to thoughtsource, just think of Spiderman.

.png)