Is Machine Learning Becoming Obsolete?

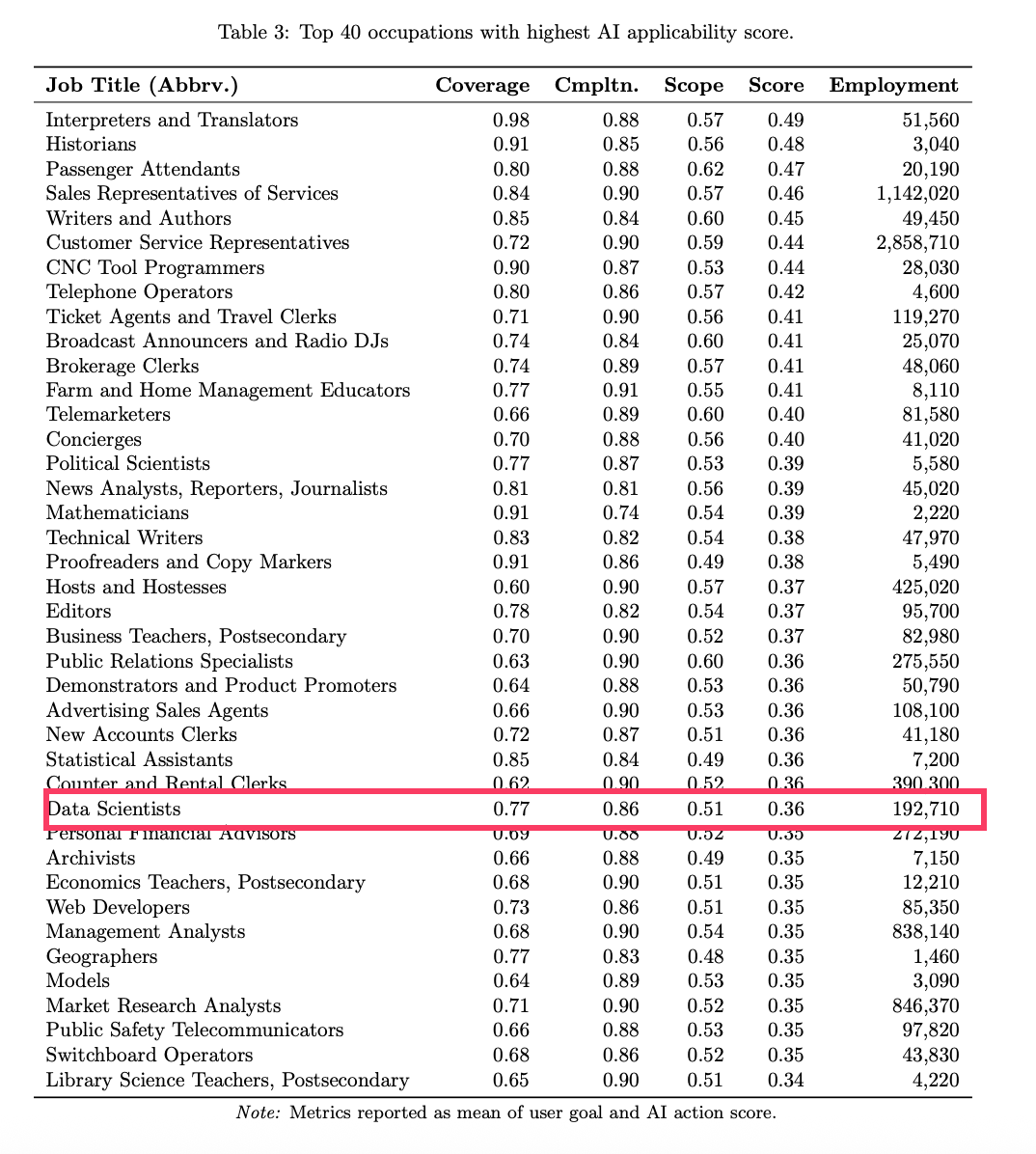

A paper released just last week from Microsoft - "Working with AI: Measuring the Occupational Implications of Generative AI"- ranked 'Data Scientist' among the top occupations with the highest potential exposure to Generative AI. (ie, one of the top 40 jobs to be replaced by AI).

This wasn't a death knell; it was a signal of a seismic shift happening right now.

The traditional, defining task of a data scientist -- painstakingly building bespoke models from scratch -- is being replaced by a new paradigm: assembling sophisticated solutions from powerful, pre-existing components.

What once required weeks of custom engineering can now be accomplished in minutes. The skill that is becoming "obsolete" isn't the understanding of machine learning; it's the muscle memory of building everything from the ground up, starting with from sklearn.model_selection import train_test_split.

The Old Playbook: A World of Builders

Not long ago, the playbook for applied Data Science and Machine Learning was clear. The path to solving a business problem was paved with custom-built models.

-

For Text: Need to understand customer sentiment? You’d fine-tune a BERT model on thousands of labeled tweets or reviews, a multi-week effort of data collection, labeling, and training.

-

For Video: Want to find a specific product in a video feed? You’d implement a YOLO model, painstakingly label thousands of frames for training, and build a complex pipeline to process the video stream in real-time.

-

For Audio: Need to transcribe and analyze support calls? You’d architect a custom speech-to-text model from scratch, train it on massive audio datasets, and still struggle with unique accents and domain-specific jargon.

Each of these was a significant undertaking, requiring deep expertise, vast amounts of labeled data, and rigorous MLOps engineering. It was a bottom-up world. Today, that entire playbook requires a massive mindset shift - a "great unlearning" of the default "build-from-scratch" approach.

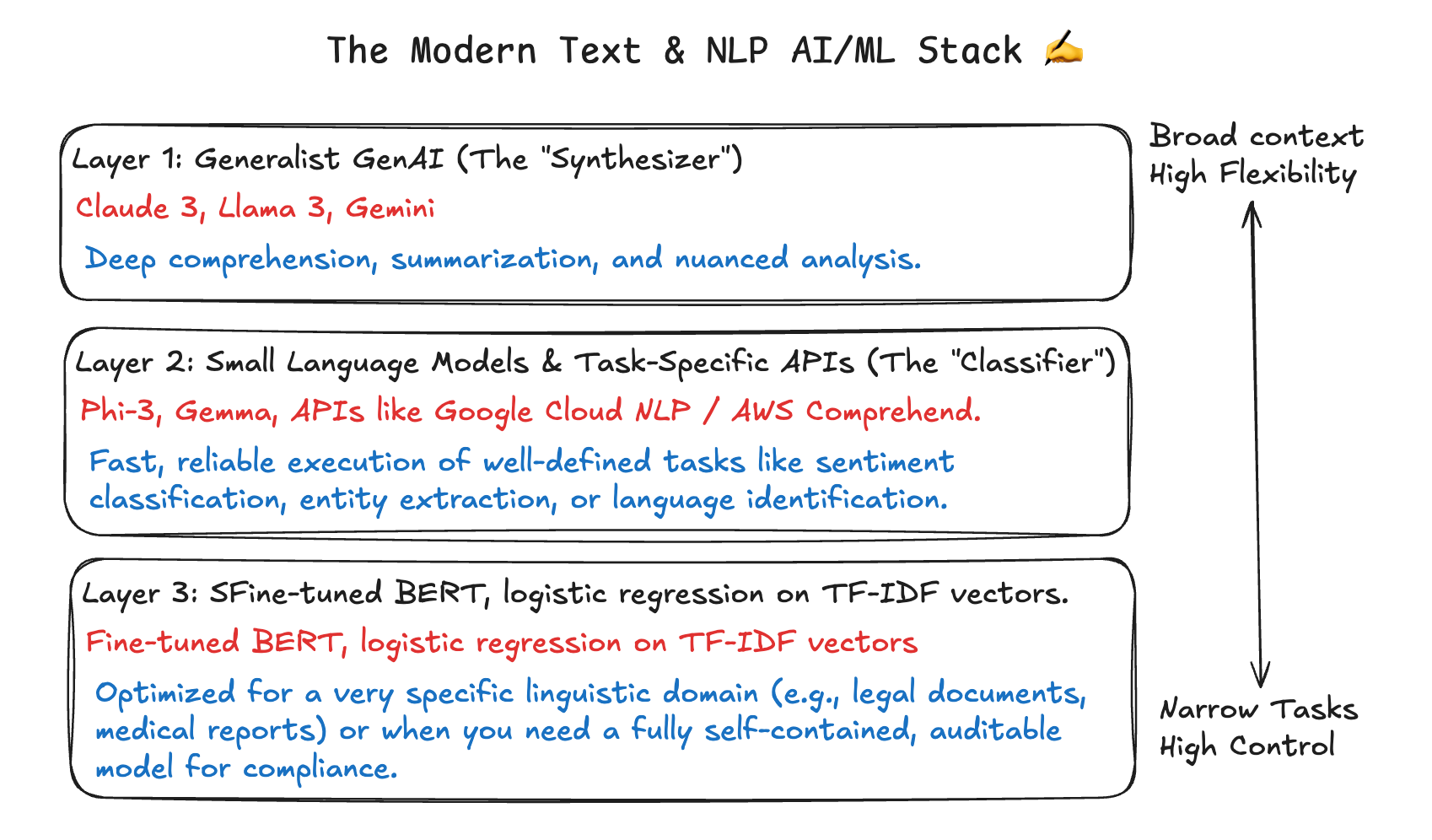

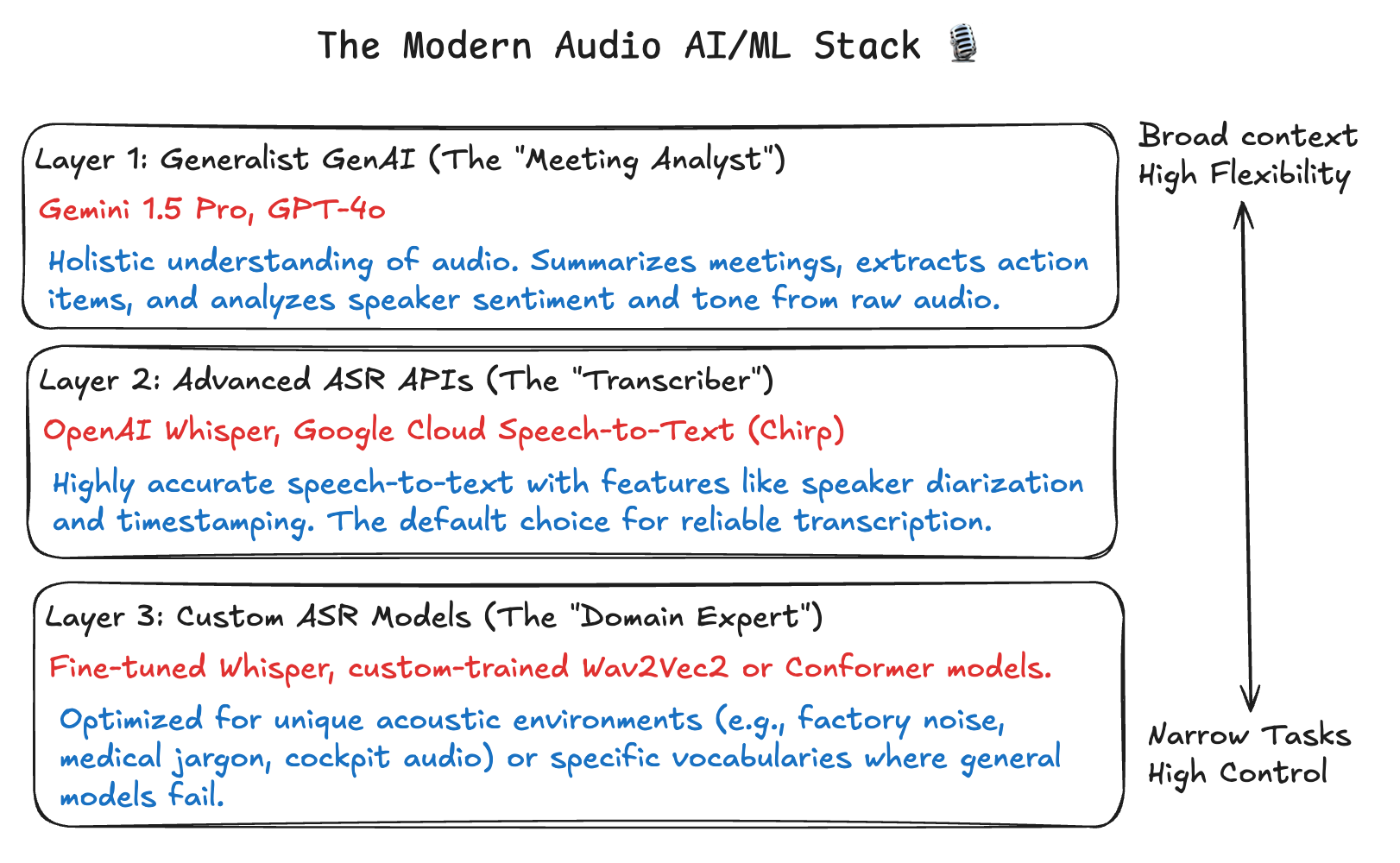

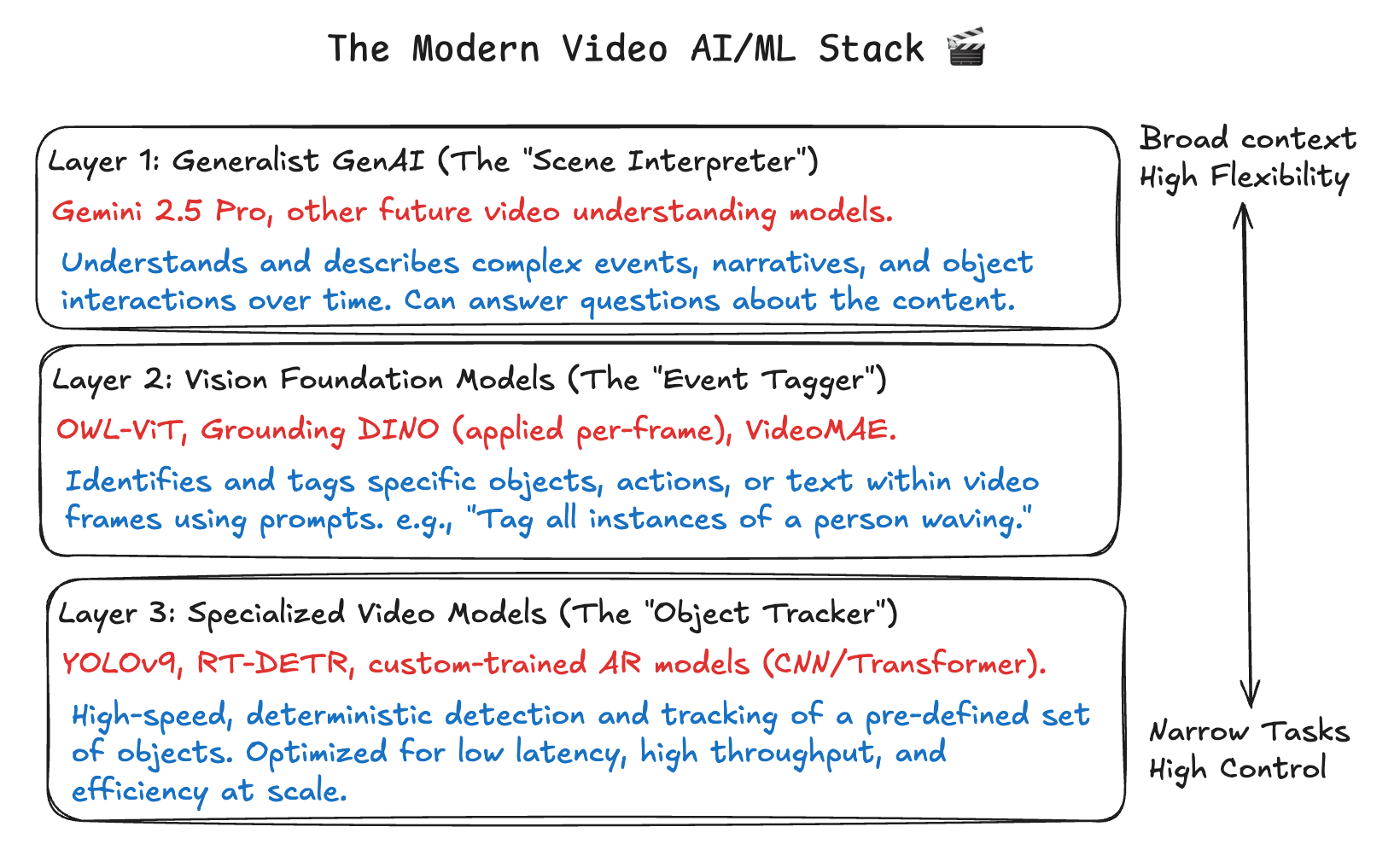

The Assembler's Playbook: Diving From the Top

Instead of building from the bottom-up, elite AI teams now assemble solutions by diving down from the top. This "GenAI-First" strategy is the core of the modern assembler's playbook.

The process starts by using the most powerful component available - a generalist GenAI model (Layer 1) - to get an immediate, high-quality result. From there, you strategically select and integrate more specialized components from lower layers (Layer 2 or 3) only when needed for optimization, cost, or control. This isn't just a different workflow; it's a fundamentally smarter way to engineer value.

This layered assembly applies across all major data modalities.

You might wonder how this applies to traditional use cases like sales forecasting from structured, tabular data. Even here, the 'assembling' mindset is taking hold. While custom models like XGBoost remain critical (Layer 3), they are now frequently augmented by foundation models that generate predictive features from text or automated by powerful AutoML platforms (Layer 2).

The New Core Competency: Knowing Which Component to Use

Understanding this stack is one thing; knowing how to navigate it is the new core competency for any applied AI practitioner. The strategic value is no longer just in building, but in choosing the right layer for the job.

Here is a simple framework for making that decision:

Start at Layer 1 (Generalist GenAI) when:

-

Speed of Development is Critical: You need to build a proof-of-concept or deliver business value immediately.

-

The Problem Requires Deep Context: The task involves reasoning, summarization, generation, or understanding nuance that a simple classifier would miss.

-

The Input is Ambiguous or Varied: You need a model that is flexible enough to handle a wide range of unstructured prompts and user requests.

Dive to Layer 2 (Foundation Models / APIs) when:

-

You Need to Solve a Well-Defined Problem at Scale: The task is a common one, like transcription, translation, or basic sentiment analysis.

-

Cost and Performance Need to be Balanced: You need a reliable, production-grade solution that is more cost-effective and faster than a massive generalist model for a specific task.

-

An Off-the-Shelf Solution is "Good Enough": A powerful, pre-trained API or model can meet 95% of your requirements without the overhead of custom development.

Descend to Layer 3 (Specialized Models) when:

-

You Require Maximum Control and Determinism: The application demands predictable, repeatable outputs, which can be a challenge for more creative generative models.

-

You Need Extreme Optimization: Your use case requires ultra-low latency or must be cost-optimized for millions of calls per day.

-

Your Domain is Highly Specialized: You are working with unique data (e.g., proprietary legal documents, specific medical imagery, noisy industrial audio) where general models are insufficient and a custom-trained model is the only way to achieve the required accuracy.

The great unlearning isn't about discarding ML knowledge. It’s about re-calibrating our identity. The future doesn't belong to the craftsman who can only build a perfect, single component from scratch. It belongs to the system architect who can skillfully assemble these components into a powerful, cohesive solution. The most valuable AI practitioners of tomorrow will be the assemblers, not just the builders.

.png)

![I made Minecraft in Minecraft with redstone (2022) [video]](https://www.youtube.com/img/desktop/supported_browsers/chrome.png)