When the eye sees a character it perceives a glyph, which is a single visual form. Computers represent the glyph using a number (a code point) within a lookup table (a codespace). To the eye the forms may be identical, but to the machine they are different.

To work with text is to know the difference between what is seen and what is unseen.

The novice’s confusion is a common one. Two strings can appear to be the same character yet possess different underlying structures. This is the issue of canonical equivalence in Unicode.

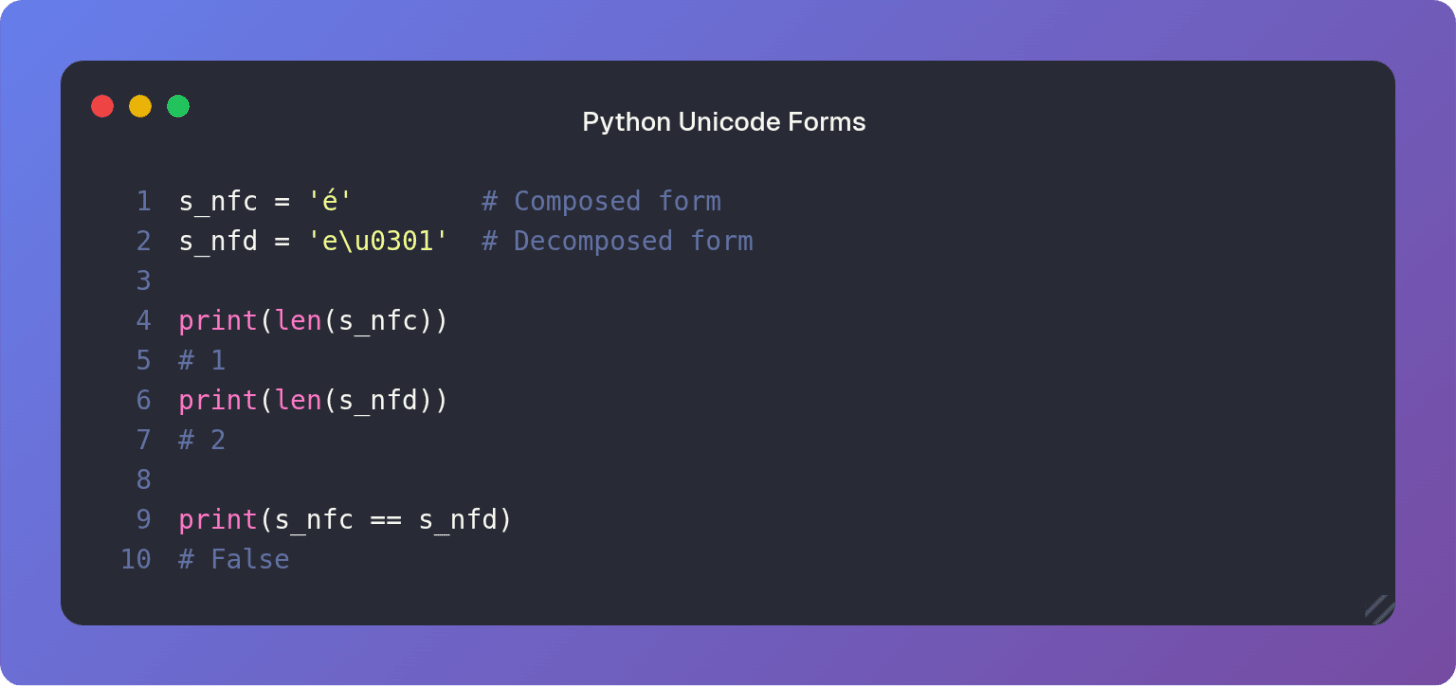

Consider the character é. It can be represented in two ways:

Normalization Form C (NFC): A single code point, U+00E9 (LATIN SMALL LETTER E WITH ACUTE). This is the composed form.

Normalization Form D (NFD): Two code points, U+0065 (LATIN SMALL LETTER E) followed by U+0301 (COMBINING ACUTE ACCENT). This is the decomposed form.

Python treats them as distinct sequences of code points, which are unequal in length and content:

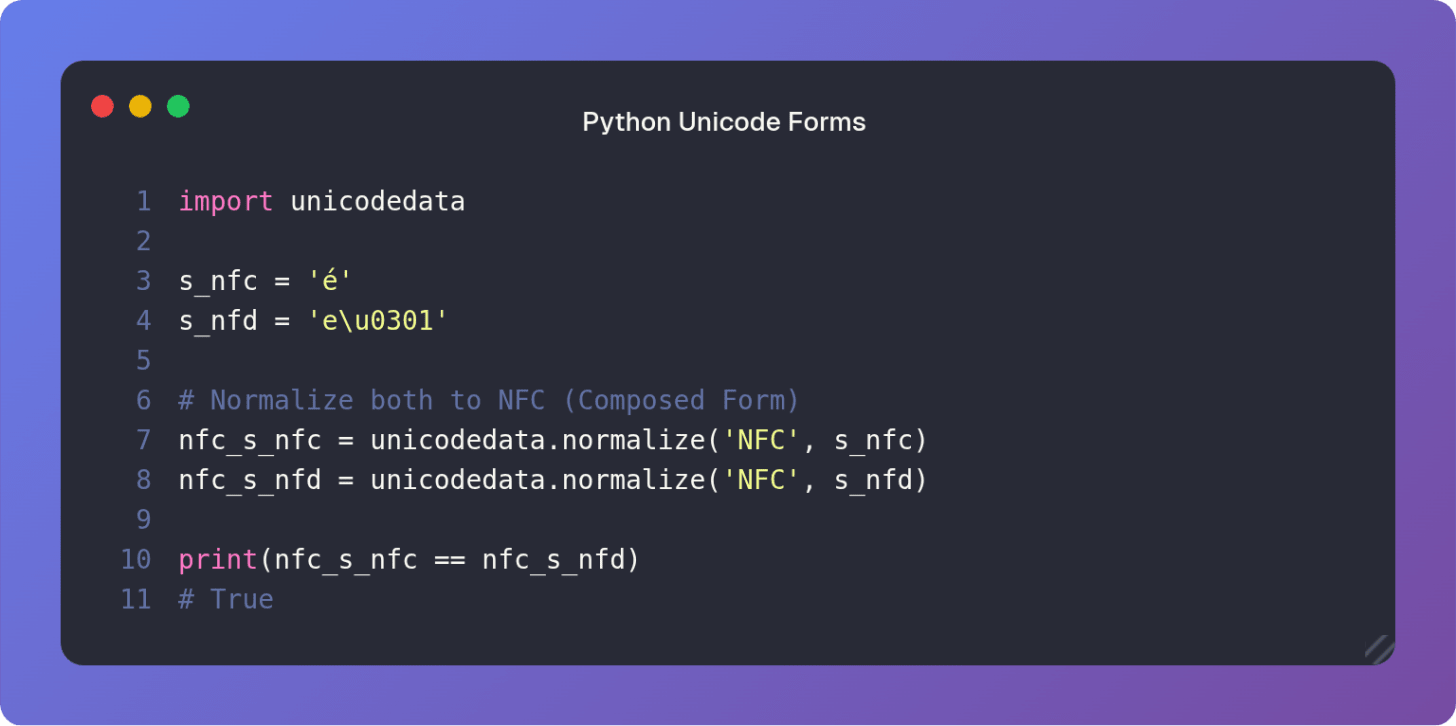

To compare characters based on their visual or canonical meaning, we must normalize them. Python’s unicodedata module provides the tool for this alignment.

The unicodedata.normalize() function converts strings to a standard form ensuring that sequences that are visually the same (canonically equivalent) are also code-point-wise the same.

By normalizing both strings to NFC we ensure that the decomposed form e\u0301 is converted into its single-point composed form é making the comparison meaningful and true to the eye’s perception.

The common wisdom for text processing is:

Use NFC (Composition) for storage and transmission of unicode text.

Use NFD (Decomposition) for text processing and complex comparisons.

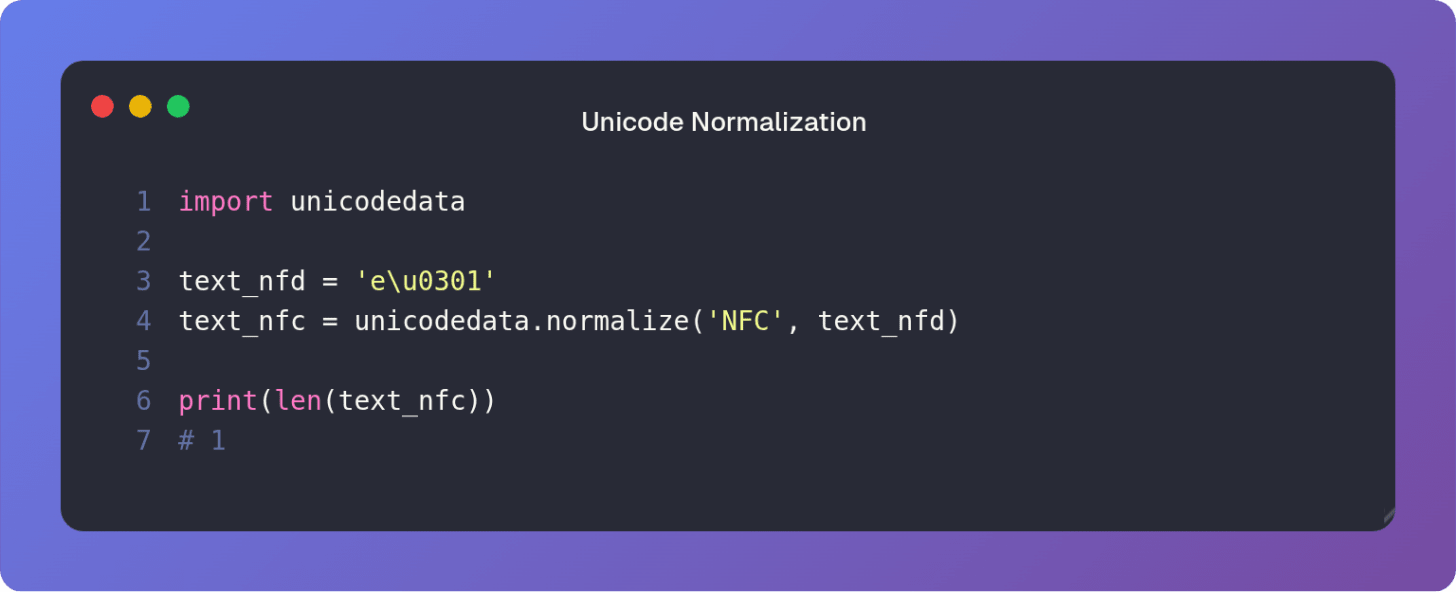

As we saw previously, the built-in len() function counts the number of code points, not the number of visual characters or graphemes. This is important for tasks like truncating text display or cursor movement which operate on grapheme clusters.

To correctly count the visual characters, one must implement a more sophisticated counting mechanism which understands the rules of grapheme clustering. For simple cases, normalization can offer a partial solution.

For example, if we normalize to NFC before counting, we often align the code point count closer to the grapheme count:

This is a helpful step but it is not a complete answer for all complex scripts and combining marks which may still result in a grapheme that is more than one code point, even in NFC.

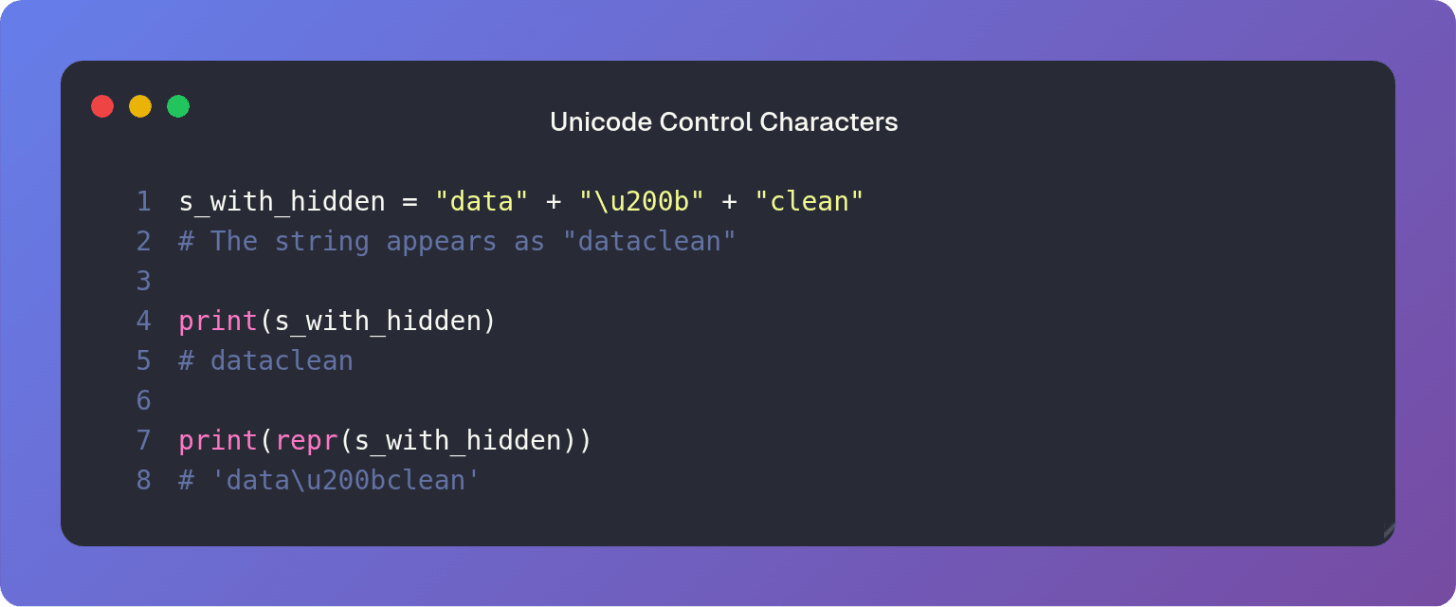

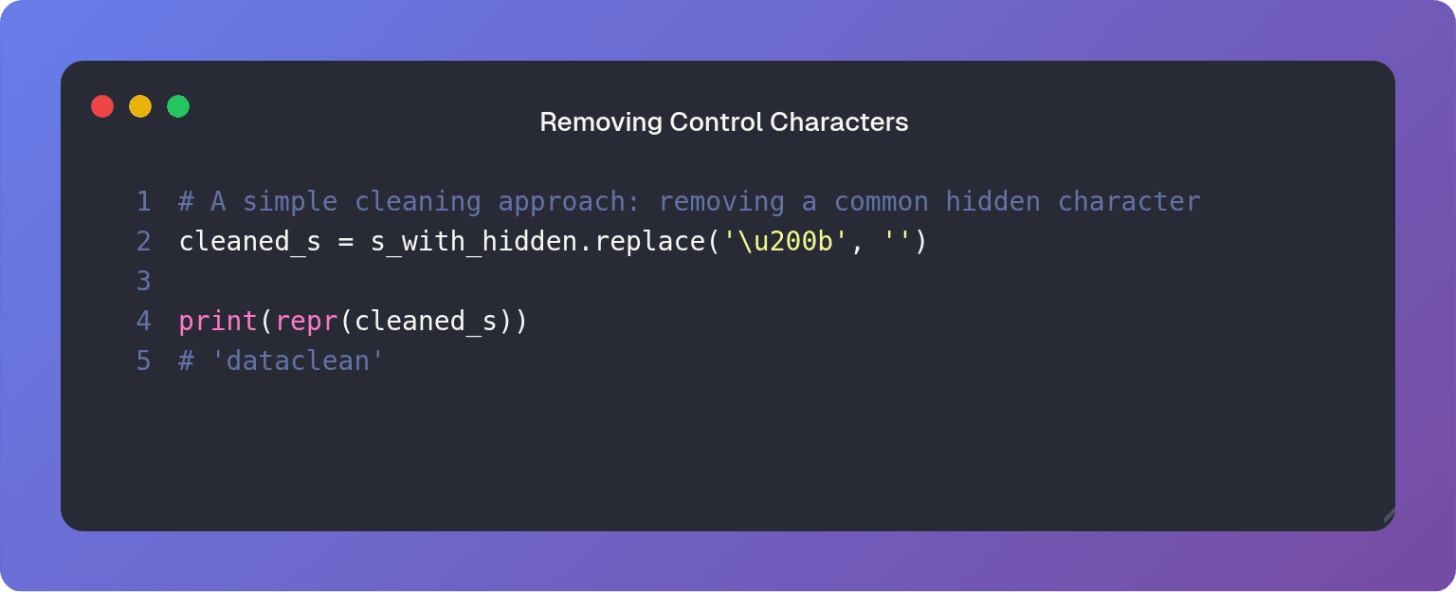

Beyond composition lies the matter of invisible characters. Control characters like the Zero Width Space U+200B or soft hyphens are valid code points but have no visual rendering. They are often copied from web sources and cause silent failures in parsing or comparison.

When inspecting strings the standard print() function often hides the true nature of the text. The repr() function however reveals the string’s raw code point sequence making the invisible visible.

The Zero Width Space \u200b is revealed by repr(). Before comparison or processing it is often necessary to aggressively strip or replace such characters.

The principle of canonical equivalence and unseen control characters have been pu to nefarious use in phishing attacks. Attackers register a domain name containing one or more unicode characters which are canonically equivalent to a well known character.

They then host an identical copy of the legitimate website on the newly registered domain, and steal user data or infect their computer with malware.

The following is an example of an homographic attack; the Latin letters “e” and “a” are replaced with the Cyrillic letters “е“ and “а“

This necessity to look beneath the surface reveals a universal principle: Trust the representation not the appearance.

When the Master revealed the second character with the candle’s heat, he showed the novice a fundamental truth. The text seen by the eye is merely a glyph, but the text seen by the machine is a sequence of code points.

To write robust code is to not be deceived by the visual form. One must apply the heat of normalization to reveal and align the true sequence of underlying code points. Only then can the comparison be honest and the measurement be true.

Thanks for reading Python Koans! If you enjoyed this post, share it with your friends:

.png)