TL;DR Computer programs with a user interface are parallel computation across multiple substrates: CPU and brain.

This is a follow-up to my post about Universal Causal Language (UCL).The Concept

Every program with a user interface is running parallel computation on both a CPU and a brain.

The computation happening in the brain is:

"Read this. Think about it. Decide what to do next. Submit input."The CPU and brain are co-processors working on the same problem.

Traditional View vs Reality

Traditional View

Program: [CPU executes code] → [Displays UI] → [Waits for input] Human: [Passive observer] → [Reacts to stimulus]Reality (UCL View)

CPU: [Execute] → [Render] → [Wait] → [Receive input] → [Execute] Brain: [Wait] → [Perceive UI] → [Process] → [Decide] → [Emit action] → [Wait]Both are executing programs in parallel. The "interface" is the message-passing protocol between two computational substrates.

Example 1: Simple Dialog

Computer: "Do you want to save? (y/n)" User: [thinks] → "y" Computer: [saves file]The CPU and the Brain each run their own causal programs simultaneously. The display and keyboard form the interface layer, passing messages between substrates.

Example 2: Web Form

The CPU renders the form, validates syntax, and handles submission. The brain perceives, recalls, decides, and acts. Both validate in different domains: CPU checks structure; brain checks intent.

Example 3: Video Game

A game loop is a dual process:

- CPU runs logic, physics, and rendering 60 times per second.

- Brain processes perception, strategy, prediction, and learning at a slower but higher-level frame rate.

They are co-processors solving the same loop.

Example 4: AI Chat Interface

Three substrates operate in parallel:

- GPU (AI model)

- CPU (UI and I/O)

- Brain (cognition and emotion)

Each processes, generates, and transmits information in its own language.

1. UI Design is a Program for the Human Brain

A user interface is not just a presentation layer; it is a set of instructions compiled for the brain. Layout, color, motion, and wording form the opcodes of a cognitive program that a human executes in order to understand and act. Every UI element is a command that shapes perception, triggers recognition, or initiates motor output.

2. Human Cognitive Architectures

Each human brain can be seen as having its own unique CPU architecture, shaped by genetics, language, culture, and experience, and evolving over time. Like instruction set architectures such as x86, AMD64, ARM64, or RISC-V, these human architectures differ slightly in how they encode and execute operations.

3. Design Systems are Reduced Instruction Sets

A minimal, consistent design language acts like a Reduced Instruction Set (RISC). A small set of clear, reusable primitives that is easy to translate between different architectures. Simple patterns like consistent button shapes, predictable layout grids, or uniform feedback loops reduce cognitive translation overhead and make the experience more universally executable.

4. UX Design is Substrate Coordination

- Good UX = efficient message passing between CPU and brain.

- Bad UX = poor coordination, high latency, or protocol ambiguity.

5. User Testing is Performance Benchmarking

Metrics like time-to-task, error rate, and frustration are performance benchmarks for the human substrate.

6. E2E Tests Implement a Simple Brain VM

When we write integration tests that click through an app and make sure nothing is broken, we are emulating a human running a tiny causal program:

- Click this link

- Wait for the render

- Read the text on the page and make sure it includes specific text

This is a rudimentary simulation of perception and decision: "see element → decide it matches intent → proceed." We are, in effect, simulating what it is like to be a human reading the page and confirming understanding.

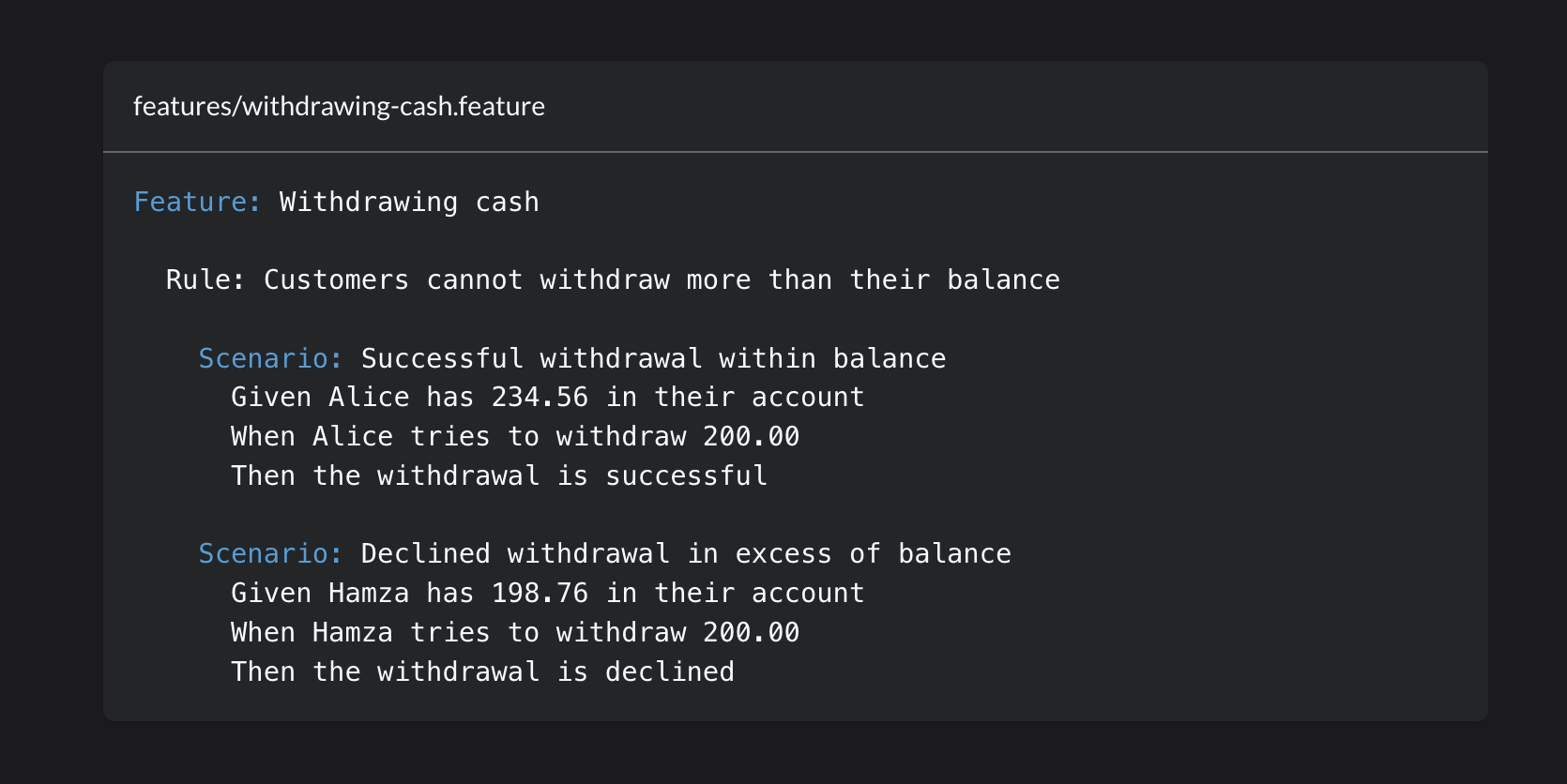

You can see this idea being explored in Cucumber acceptance tests. Cucumber allows humans to write plain text scenarios that are turned into tests that software can run.

7. The Brain is Not a Passive Observer

When you use software, your brain executes "code". Reading, parsing, deciding, recalling all are computations.

8. Accessibility is Compatibility

Accessibility tools are alternative communication protocols for diverse brain substrates. They enable equivalent computation through different sensory channels.

9. The "Wait for Input" is a Join Point

Each join point is where the CPU and brain synchronize states, e.g., pressing Enter, submitting a form, or clicking a button.

Design Principles for Multi-Substrate UX

- Assign computation to the optimal substrate.

CPU: precision and repetition.

Brain: pattern and context. - Minimize join point latency.

Reduce idle waiting between substrates. - Expose substrate state.

Spinners, progress bars, and disabled buttons signal where computation is happening. - Handle substrate failures gracefully.

Catch both CPU and brain errors with feedback or redundancy.

Multi-Substrate Programming

UCL could allow us to design interfaces as explicit coordination protocols between substrates:

{ "substrates": ["CPU", "Brain"], "coordination": "parallel", "join_points": [ {"after": "cpu_render", "wait_for": ["brain_perception"]}, {"after": "brain_decision", "wait_for": ["cpu_validation"]} ] }Future UX systems might treat humans, CPUs, and AIs as nodes in one distributed computation.

Conclusion

UX is parallel computation across heterogeneous substrates.

Good UX = efficient coordination.

Bad UX = desynchronization.

When you design a user interface, you are programming two computers at once: silicon and biological.

The future of UX might be explicit multi-substrate programming.

.png)