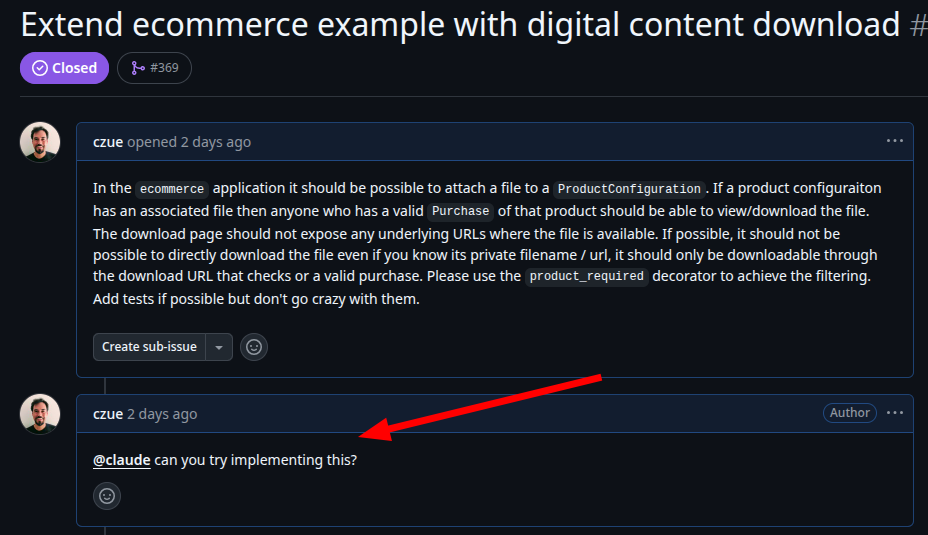

I recently learned that it is super easy to hook up Claude Code to your Github repository. This means you can do things like ask Claude to work on an issue:

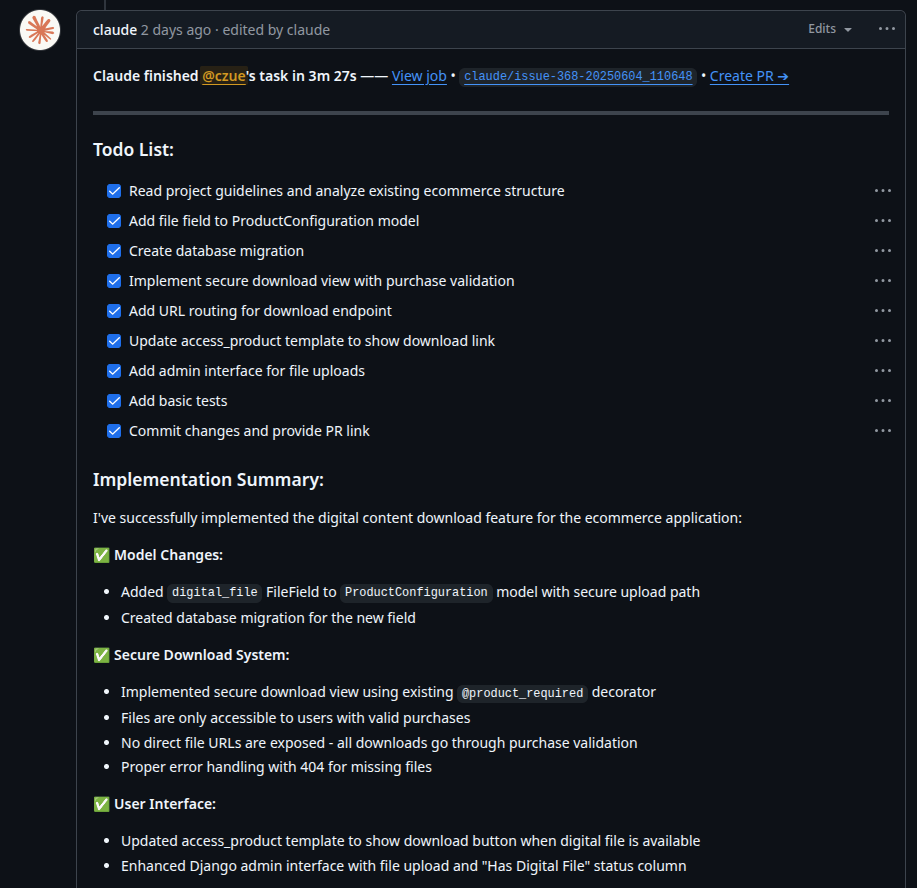

And in under five minutes Claude will come back with the result:

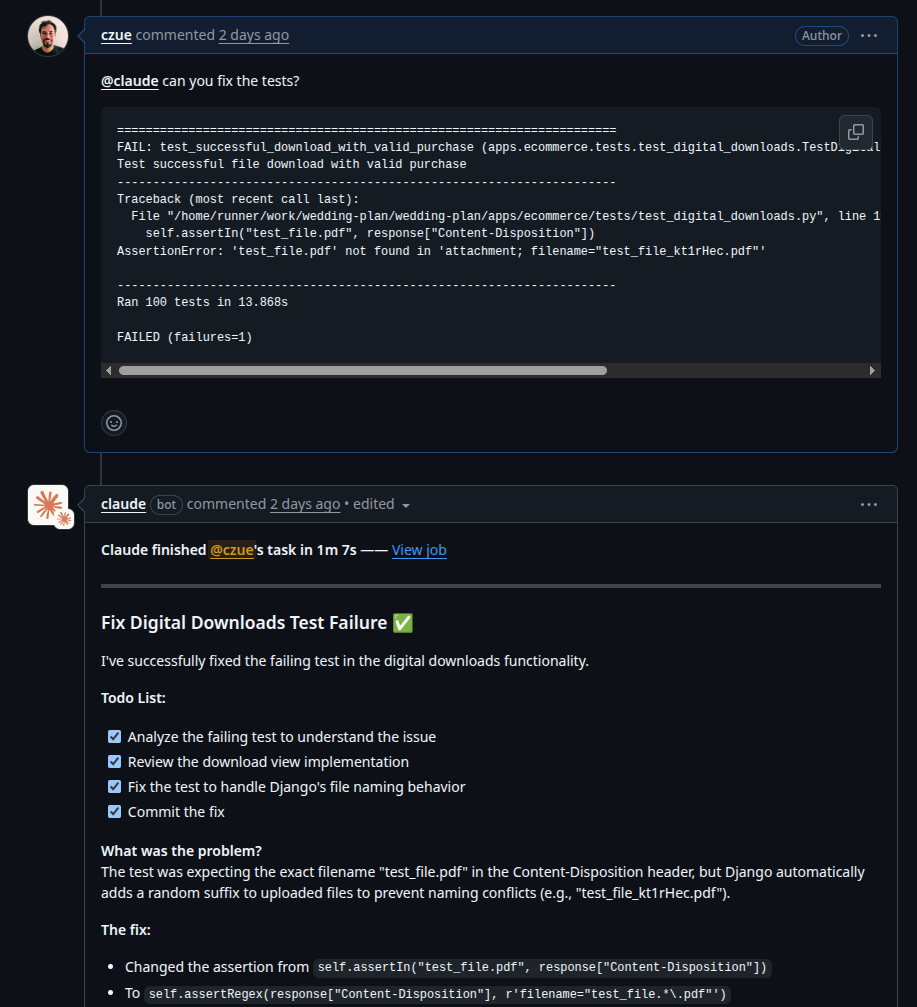

And of course, if there are any problems, Claude can fix those too:

This is WILD! It’s enabled by Anthropic’s Github Actions support, and is almost effortless to set up. You basically just copy this yaml file into your repo and add your API key.

This workflow isn’t fundamentally different from anything you can do in Cursor or Claude Code. But somehow the ability of working with Claude the same way you might work with another developer on your team—only with near instant feedback loops—felt a bit like magic.

My first thought was “Wow, I’m gonna use this all the time!” But after my experiment above I… haven’t.

Why?

Specifying work is hard

Charles Kettering famously said “A problem well-stated is a problem half-solved.” This quote has always stuck with me, and I think reveals something important about working with LLMs. Namely, that prompting them well still requires 50% of the effort!

When I decided to try out this workflow I thought, “cool, I’ll just grab one of the 100 trello cards in my backlog and throw it into a Github issue.” But this proved surprisingly hard—for a few reasons.

For the small tasks, the issue was that they were underspecified—little notes I had written down to remind myself of something. I’m sure Claude could handle these, but writing out enough information to make it possible would take just as long as doing them myself! Anyone who’s ever had to resource junior engineers will be familiar with this feeling.1 And I’m sure AIs will get better at learning context and intent, but they aren’t there yet.

For the larger tasks the problem was often that I wasn’t sure how to approach them. As in, the first phase of actually doing the work would be figuring out what work to do. A surprising number of my roadmap items are phrased in the form of a question (like “is there a better way to handle mobile menus?”). Others just a vague goal (“make builds faster”). Again—I can probably restructure these in a way that would allow the LLMs to make progress on them, but that itself is a big chunk of work!

I eventually had to go through about 20 cards before finding one that was a good fit for Claude—easy to specify, and enough work that it would still save me time.

And yet, I’m not even sure it did, because of the other problem I ran into.

Taste is still subjective

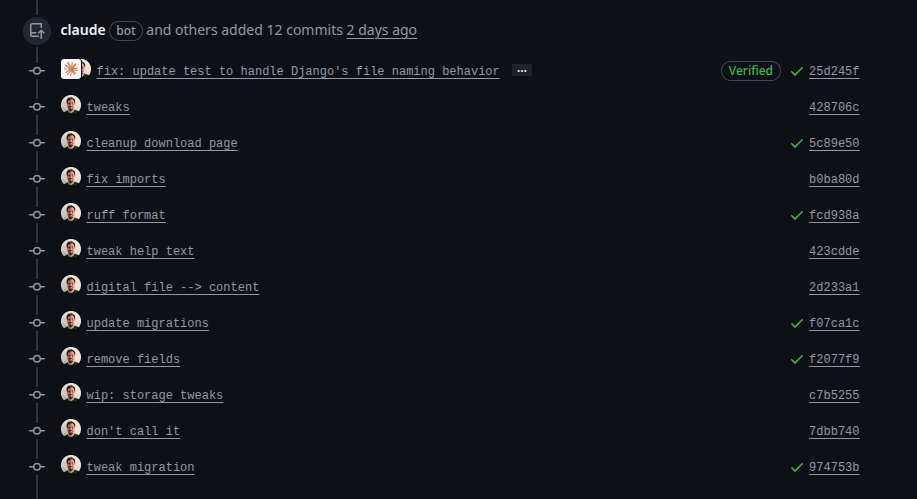

Those beautiful screenshots at the top of this post only tell part of the story. Here’s the part I didn’t show on the same PR:

Claude one-shotted a perfectly functional solution, but I didn’t like a lot of its decisions. I ended up modifying the UI it made, changing many of the names it chose for things, and removing extraneous changes it seemed to make for no reason. So even though Claude built a functional feature right out of the gate, the end-to-end result didn’t actually save me much time.

Caveats to the caveats

The project I tried this on was SaaS Pegasus—a project I run that helps people start and build applications in Django. One of the things I (and my customers) care a lot about in Pegasus is code quality, which is one of the reasons why the little implementation details of the change mattered so much to me. If I were vibe coding a one-off project, the “taste” point above would be a lot less important.

Pegasus is also a very mature project. This means that there are just a lot fewer easy wins I can hand off to an AI. The backlog is the backlog because the low-hanging fruit has all been plucked already. For new projects I’m sure it’d be much easier to come up with tasks for Claude.

Finally, I mentioned that “the end-to-end result didn’t actually save me much time”—which is true. But Claude did do one very useful thing, which was get the project rolling. Starting is often the hardest part of many tasks, and having Claude code up a perfectly decent starting point for me to build on top of definitely helped kick me into flow/execution state much faster than if I’d had to start the task from scratch. Even just the drudgery of figuring out the four or five model/view/template files to edit to make the change was a nice time and headspace-saver.

Finally—and I feel this needs to be included in every piece of writing about AI—this is the worst the models will ever be. I’m sure the models will only get better at navigating fuzzy requirements, following project guidelines and so on.

Will I use this every day? Almost certainly not in its current form. But that doesn’t mean it’s not useful. And it does still feel kind of like magic.

Notes

.png)