I’ve recently automated a large portion of my Rails performance gigs. In this tutorial, I’ll describe how to configure an AI-powered PG performance debugging. We will cover using LLMs with custom MCP (model context protocol) n8n integration. We will also discuss the legal and security implications of connecting AI to the production database. There’s also a live demo available!

How to optimize PostgreSQL performance with AI?

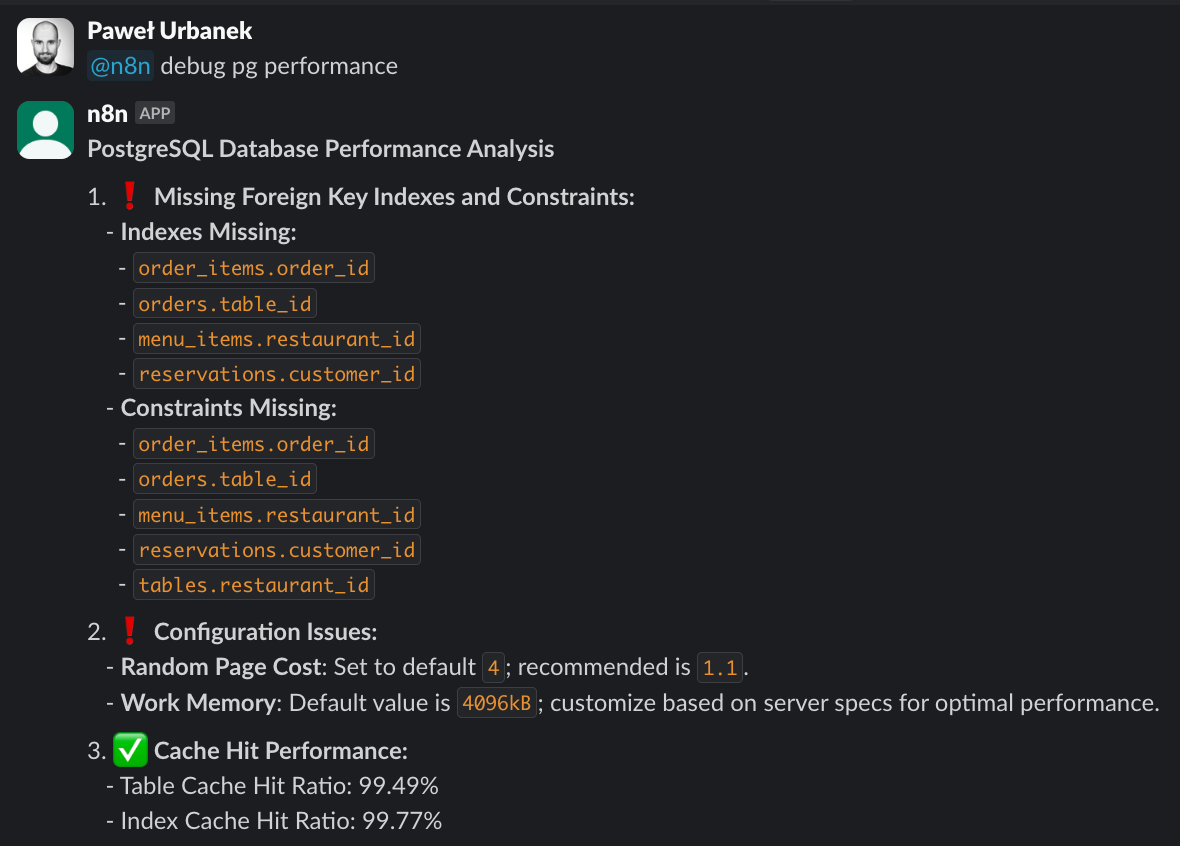

I’m not sure if the current blogosphere needs yet another introduction to MCP. Instead, here’s a sneak peek of what we will be building in this tutorial:

TLDR Slack bot debugging PG performance.

A Slack bot connected to the PostgreSQL database? Can it run arbitrary SQL queries? Does it send production data to OpenAI servers? Is it any good in nontrivial Rails apps? Will it yolo a DROP TABLE on prod at 3 AM?

Let’s answer all these questions.

Copy-pasting queries from heroku-pg-extras one weekend turned out to be a good thing. I’ve been maintaining the rails-pg-extras gem for over 6 years now. The project’s relative popularity helped me onboard multiple clients and earn a living as a Rails performance consultant for over four years.

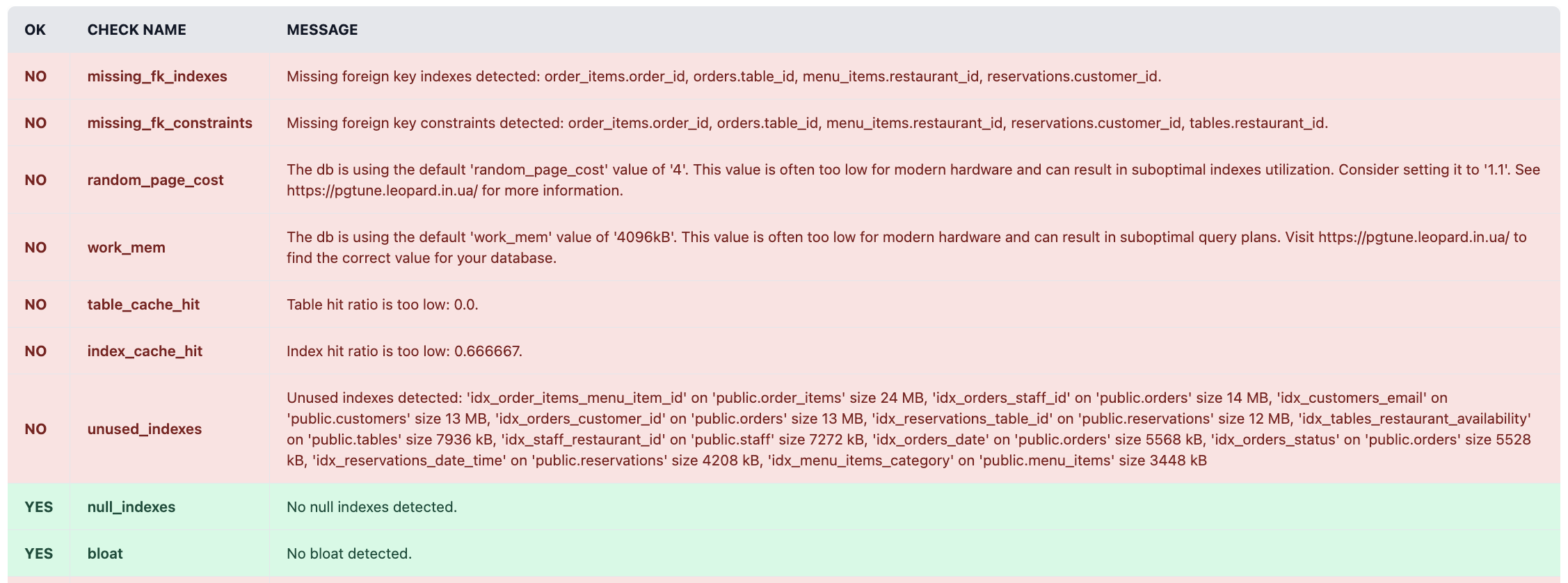

A significant part of my performance audits and subsequent retainer offering was interpreting the output of the pg-extras gem. It currently exposes ~40 methods. A solid entry point is the diagnose method, which aggregates over a dozen checks to produce a report about the database health. By configuring the web interface, you can get a nice dashboard:

rails-pg-extras web dashboard diagnose report

Rails projects with a dynamic development pace and sizeable traffic are likely to discover new db-level inefficiencies every week. But, deep dive into metadata exposed by the pg-extras API can be tedious and repetitive process.

Here’s where a brand new rails-pg-extras-mcp gem makes an entrance.

rails-pg-extras-mcp is a relatively simple (~300 boilerplate LOC total) wrapper on top of rails-pg-extras. It exposes the API in a format digestible by LLMs. By connecting it to your AI model of choice, you can automate the repetitive task of hunting for inefficiencies in the database layer.

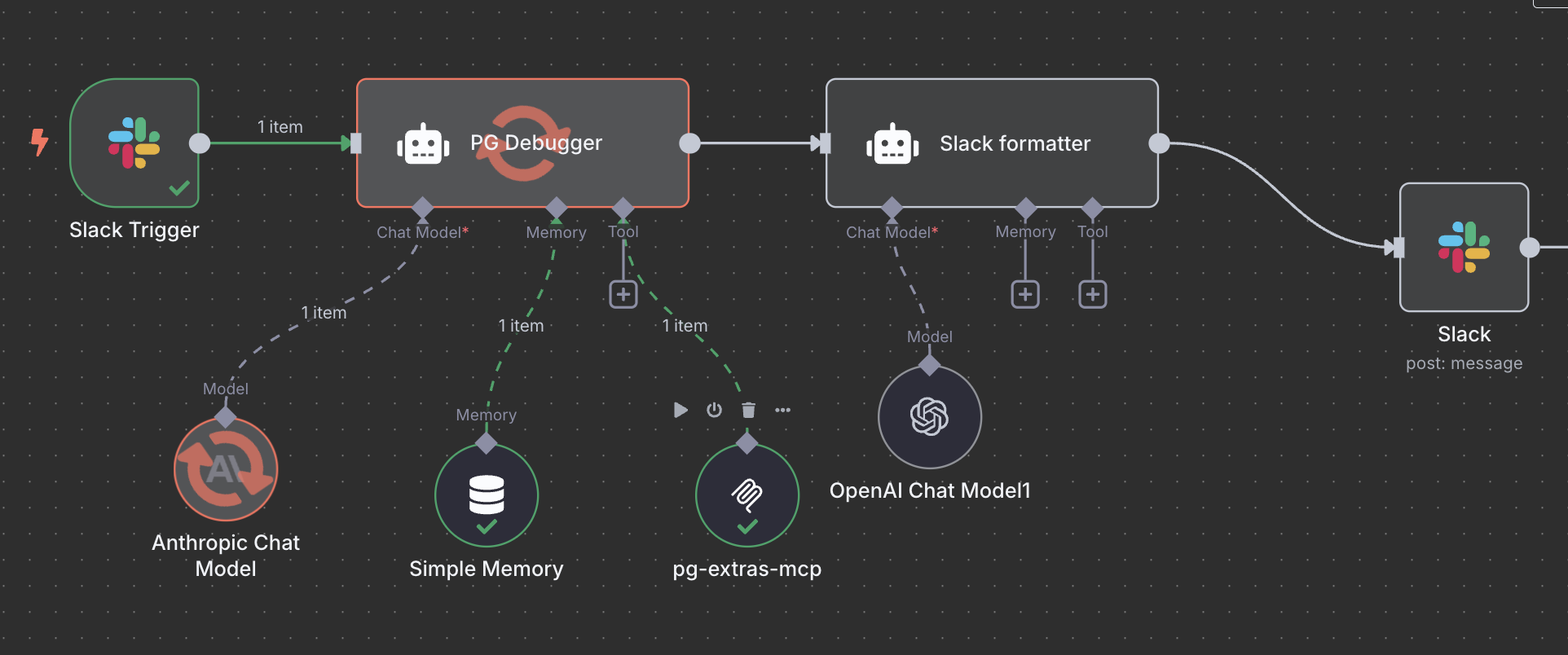

This gem is a core component of the previously showcased Slack bot demo. Slack bot is just an arbitrary choice of interface, configured with the help of the n8n platform:

rails-pg-extras MCP with Slack bot n8n schema

MCP protocol is universal, so you can use it with local Cursor IDE, Claude Code, OpenAI API, basically any modern LLM.

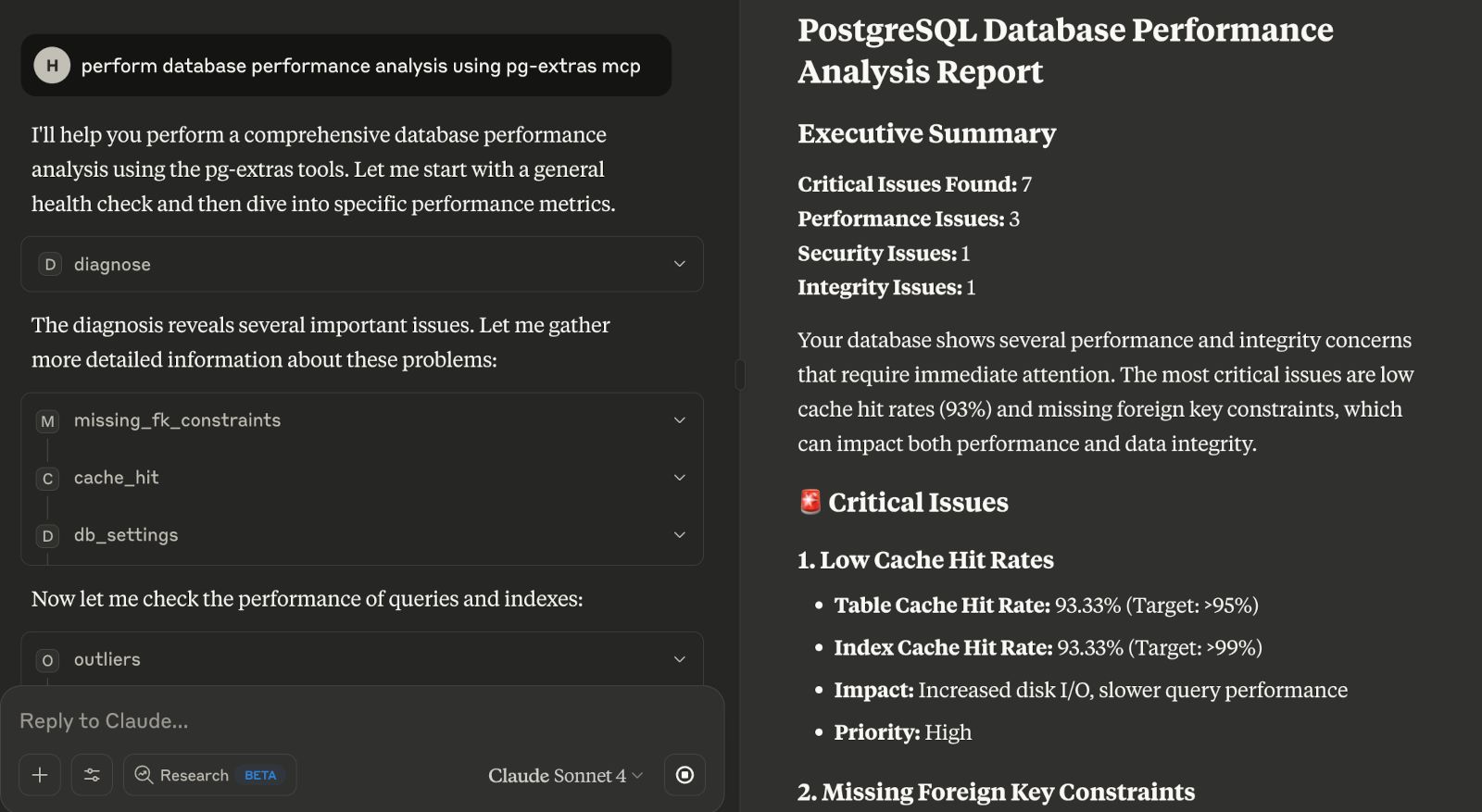

Claude Desktop rails-pg-extras MCP integration

I like the Slack bot interface because it works well for team collaboration. Realistically, you cannot expect LLM to resolve PostgreSQL issues with 100% accuracy. However, the usual bottleneck of working with pg-extras is making sense of its verbose output. A raw output is often illegible:

Raw rails-pg-extras output

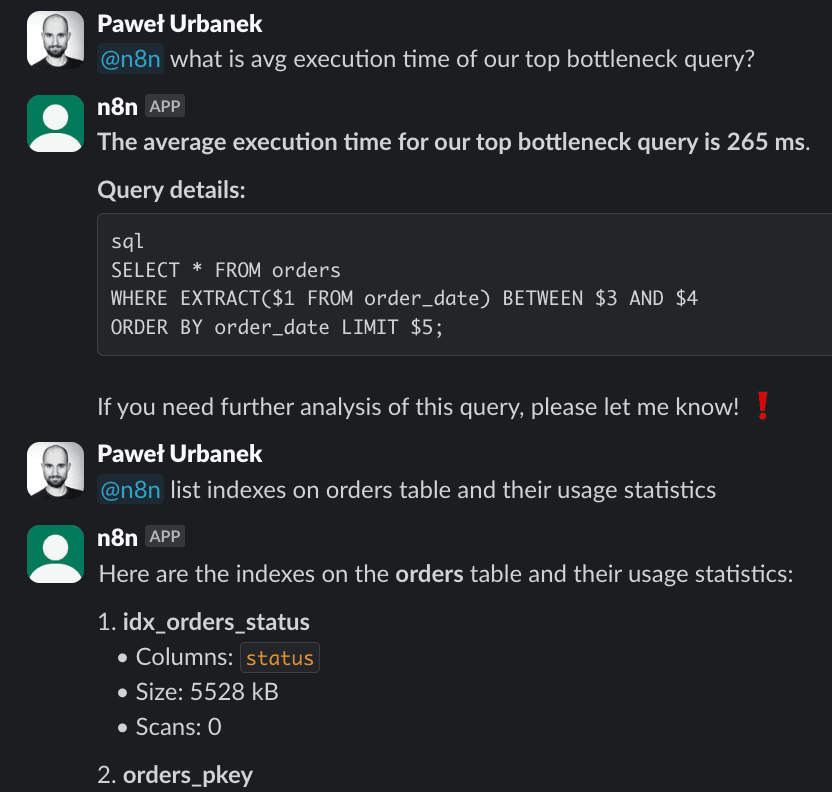

LLMs do a fantastic job, extracting useful info from raw data and reformatting it to vastly simplify human analysis. I’ve configured the demo Slack bot to only reply if mentioned by the @n8n handle. A development team can discuss ongoing db issues on a dedicated channel and augment context by predigested metadata from the LLM.

LLM-powered PG debugging session

Please remember the Slack bot this is just a choice of interface. You can configure LLMs to email you weekly database healthcheck reports PDF, talk to the database directly from the Cursor IDE chatbox etc.

So it looks pretty cool, but can you use it on production without summoning the GDPR police?

Legal and security concerns of using LLMs with production data

DISCLAIMER: I’m not a lawyer, and this is not legal advice. Please do your own research before exposing any sensitive data to LLMs.

rails-pg-extras core component is ruby-pg-extras gem. It defines metadata queries with performance debug data.

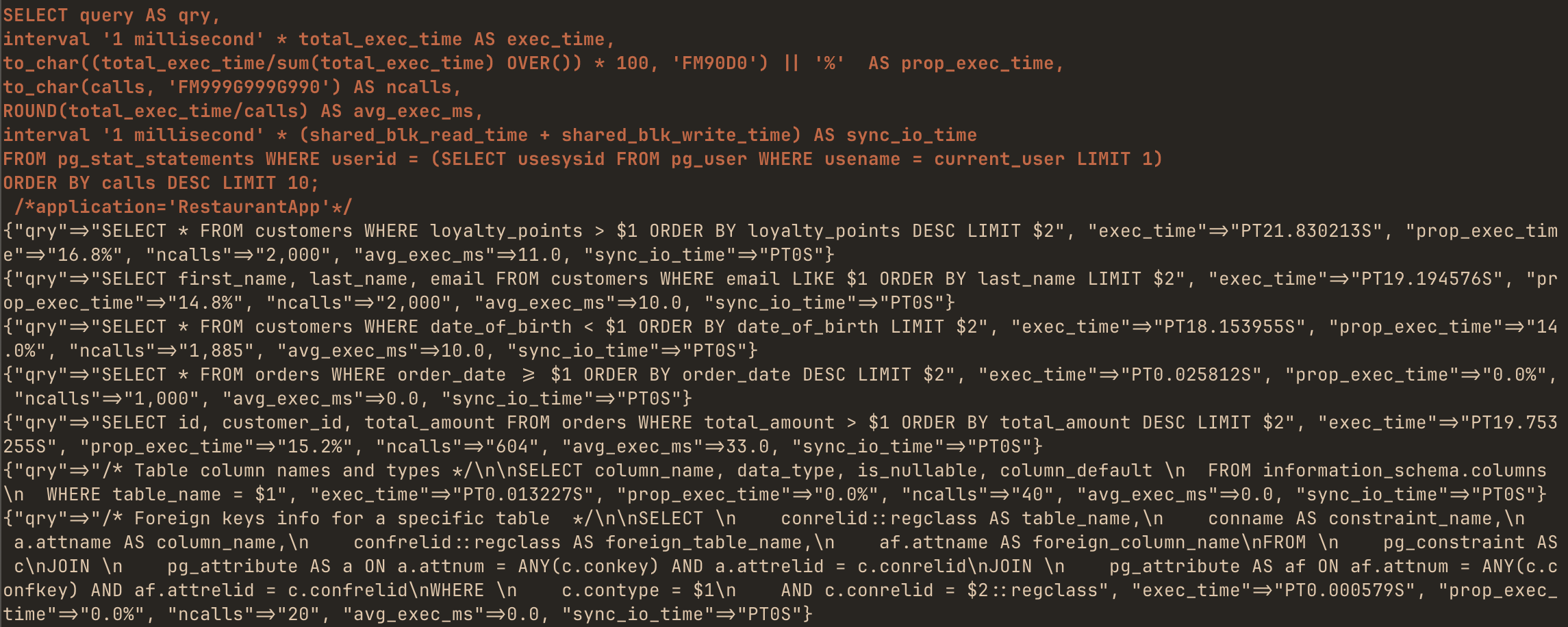

METADATA is an critical keyword here. It means that you can use rails-pg-extras MCP integration without giving LLMs access to ANY business logic-related tables. Additionally, even query argument values are not exposed. Here’s a sample output excerpt of the calls method, which lists top bottlenecks:

As you can see, values are replaced by $1, $2 placeholders. It means that in the default rails-pg-extras-mcp configuration, LLMs cannot read any sensitive data.

To strengthen this assumption, you can configure a dedicated user with read access only to the metadata tables:

and configure the ENV value:

I’m not sure if it’s enough to keep the GDPR inspectors happy. But, it’s an order of magnitude safer compared to letting LLMs roam free with a full-blown read-write db access.

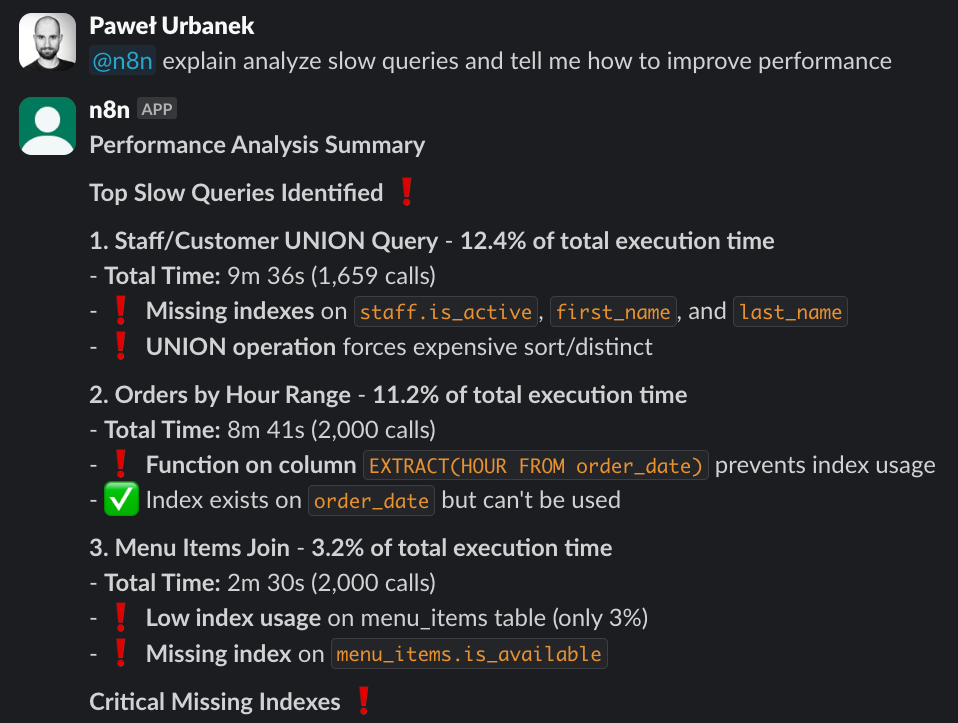

Enabling optional EXPLAIN ANALYZE mode

If you feel adventurous, you can toggle PG_EXTRAS_MCP_EXPLAIN_ENABLED and PG_EXTRAS_MCP_EXPLAIN_ANALYZE_ENABLED flags. They add explain and explain_analyze tools to the MCP interface:

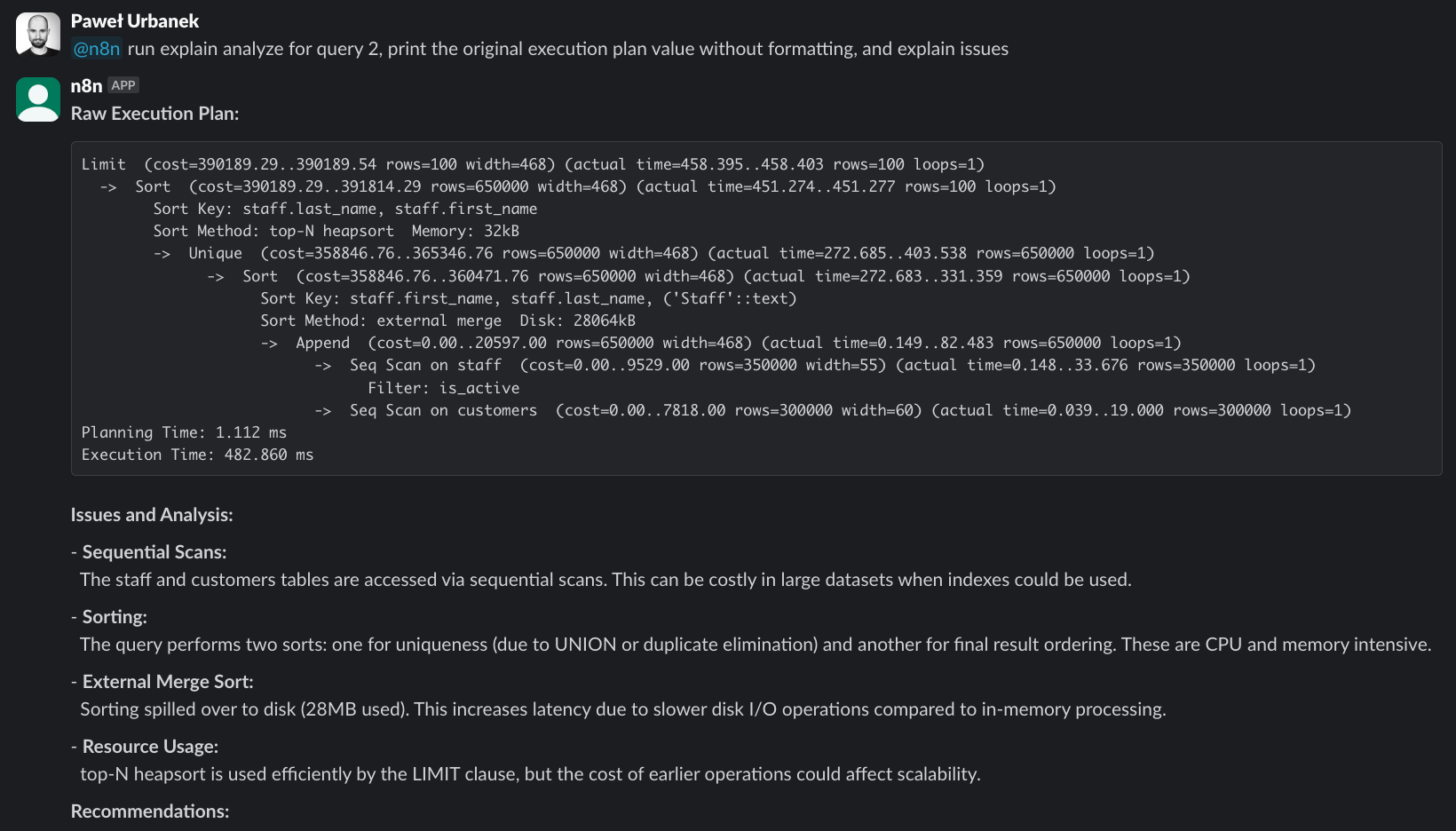

LLM running EXPLAIN ANALYZE on a bottleneck query

This feature allows LLMs to run EXPLAIN and EXPLAIN ANALYZE on selected SQL queries. Hand-crafting queries for such analysis by replacing $1, $2 placeholders with matching data can be a little tedious and time-consuming. LLMs do a fantastic job automating this process.

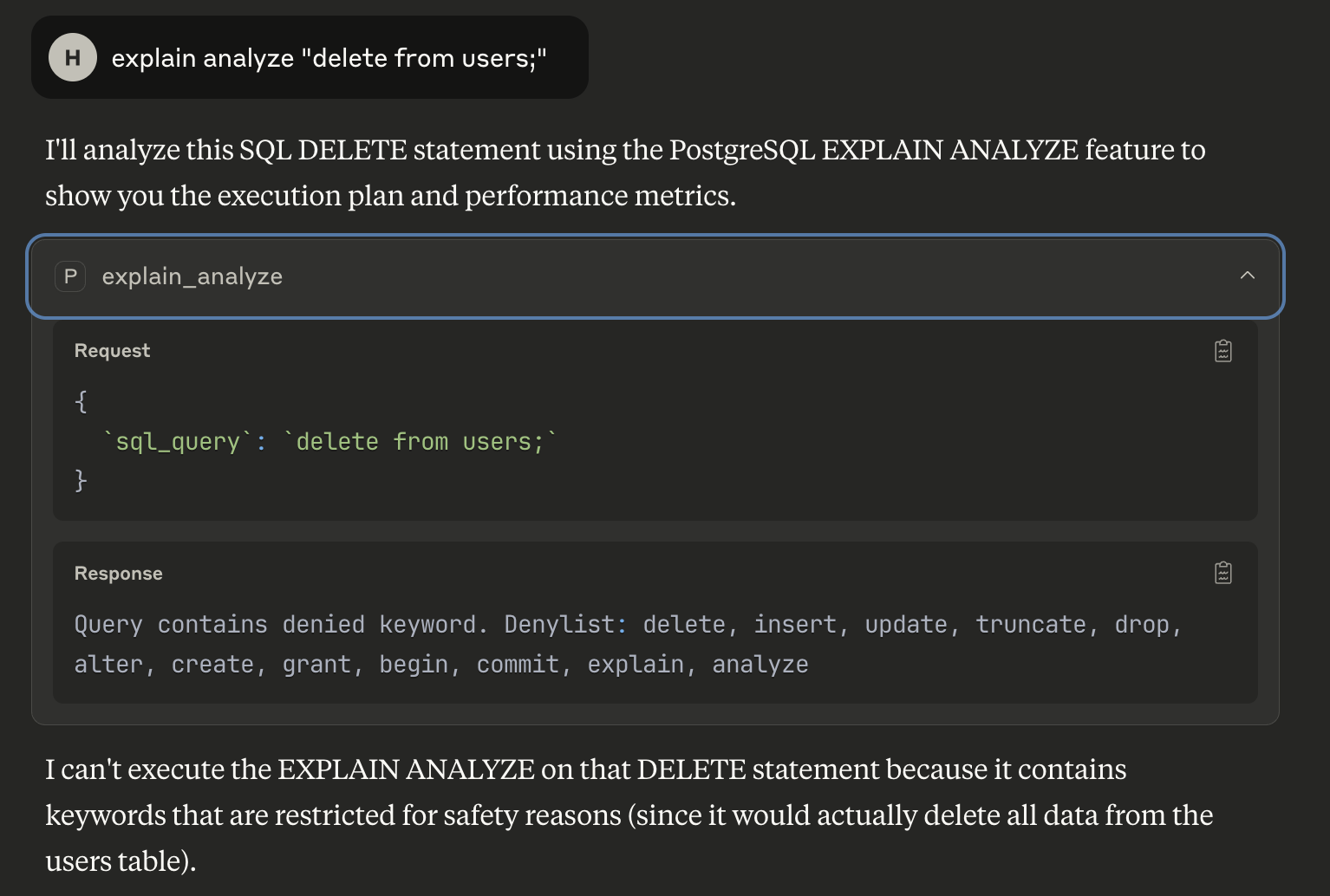

A critical difference is that it requires read access to the underlying tables. And while EXPLAIN outputs the only theoretical execution plan, EXPLAIN ANALYZE runs the actual query and reports real execution metrics. It comes with an increased risk of potentially exposing sensitive data to the LLMs. Or even writing to the database if you forget to configure read-only access. I did put some effort into preventing LLMs from injecting malicious SQL.

No hesitation

But I wouldn’t bet that a crafty LLM cannot sneak a DROP TABLE SQL injection past these safeguards. If you have feedback on how this security layer could be improved, please get in touch.

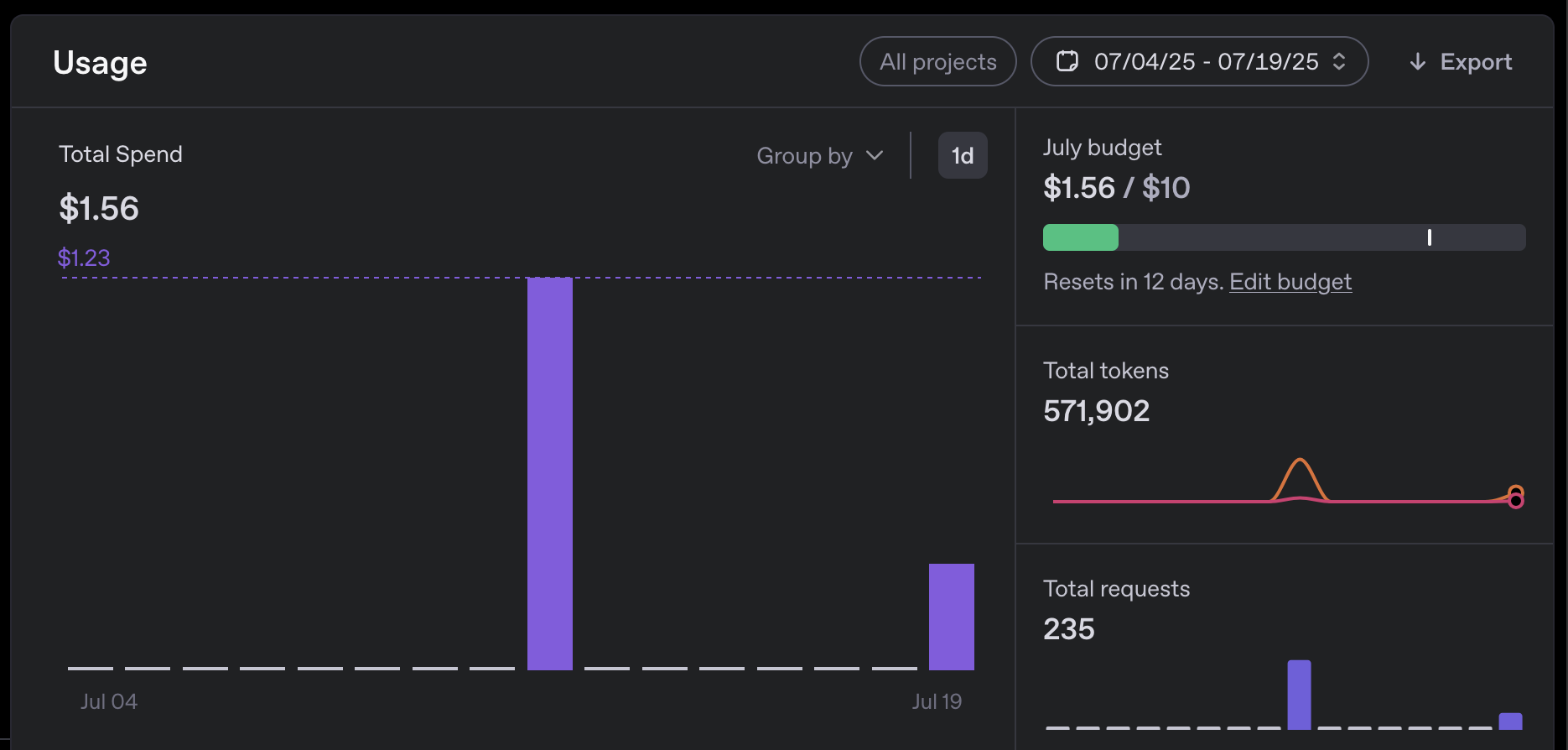

How much does it cost?

Not very much. So far, I’ve had the most success with OpenAI gpt-4o model. During the two weekends I spent testing the setup, sending ~200 Slack messages, I managed to rack up a whopping total of $1.56 in API charges.

Anthropic models seem significantly more expensive. Claude Sonnet 4 costs ~$0.05 per chat message, and Claude Opus 4 devoured $1 for a single performance analysis. I was unable to distinguish the difference in quality of output depending on the model, but I did not spend much time cross-testing them. An interesting strategy could be to use a pricey deep-thinking model for weekly performance reports, and a cheaper one for everyday chat usage.

On top of that you’ll need an n8n instance for ~$20 month, but you can also self-host it.

Give it a try!

I’ve prepared a public MCP endpoint so that you can testrun this integration. I’ll probably keep it live for a week or so after the post release. It’s connected to a dummy PG database with multiple simulated performance issues. It uses a header authentication, so you can connect your LLM of choice with a similar config:

I gave it a read-write db access, and enabled EXPLAIN ANALYZE features. I’m curious if the current gem safeguards are good enough to prevent unauthorized data writes or reads. Let me know if you manage to drop a table!

I’ve just started playing with this tech so full disclaimer - I take no responsiblity for any damage. But, it should be perfectly safe to connect non-deterministic AI agents, with elevated system access, to a random URL found on the internet. Source code is here.

And make sure to check out the rails-pg-extras-mcp readme for info on how to use it with your Rails app. The project in an early POC stage, so contributions are welcome.

Summary

A Slack bot, some LLM magic, a few hundred lines of glue code, and just like that… I’ve automated myself out of PG consulting gigs. The future looks scary. I’ll be off now to practice my plumbing skills.

.png)