TL;DR

Python’s pickle module is powerful but risky!

This article proposes a CPython-level context tainting technique to block exploits during deserialization. See the GitHub PR ↗

Try the “Pickle Escape” challenge →

Pickle is like that friend who’s incredibly helpful but has a habit of leaving the front door unlocked. While it offers powerful serialization capabilities used by major platforms like Hugging Face and Python’s multiprocessing system, it poses serious security risks when deserializing untrusted data. Recent research continues to reveal pickle-related vulnerabilities, from critical flaws in picklescan to CVE-2025-9906 in the Keras deep learning library. A recent DefCon 2025 talk has also demonstrated that crafted pickle payloads can easily bypass endpoint protection and antivirus software during deserialization.

This article introduces context tainting—a CPython-level approach that monitors deserialization contexts and blocks unsafe operations during pickle loading. The technique has demonstrated effectiveness against at least 32 pickle-related vulnerabilities over the past year. For security teams and tech leaders, this offers a potential path to make pickle safer without losing current functionality.

Disclaimer:

This article and the approach it describes are experimental and intended for educational and research purposes only. This solution has known limitations and does not protect against all possible attack vectors, mainly 3rd-party unaudited code.

This is not a production-ready security feature.

The pickle module is the standard way to serialize and deserialize Python objects. It converts complex Python objects into byte streams that can be stored or transmitted and later reconstructed. Unlike JSON or XML, pickle can handle nearly any Python object, including custom classes, functions, and complex data structures. Pickle’s versatility has made it widely used in the Python ecosystem. Major machine learning platforms like Hugging Face rely on pickle to distribute pre-trained models. Python itself uses pickle internally for critical tasks, including passing objects between subinterpreters and enabling multiprocessing.

The power of pickle is also its biggest weakness. The pickle format isn’t just data - it’s essentially a stack-based programming language that’s been given the keys to your entire Python runtime. When unpickling data, Python executes instructions from the pickle stream, and those instructions can run arbitrary Python code (because apparently, “trust me, I’m just data” wasn’t suspicious enough).

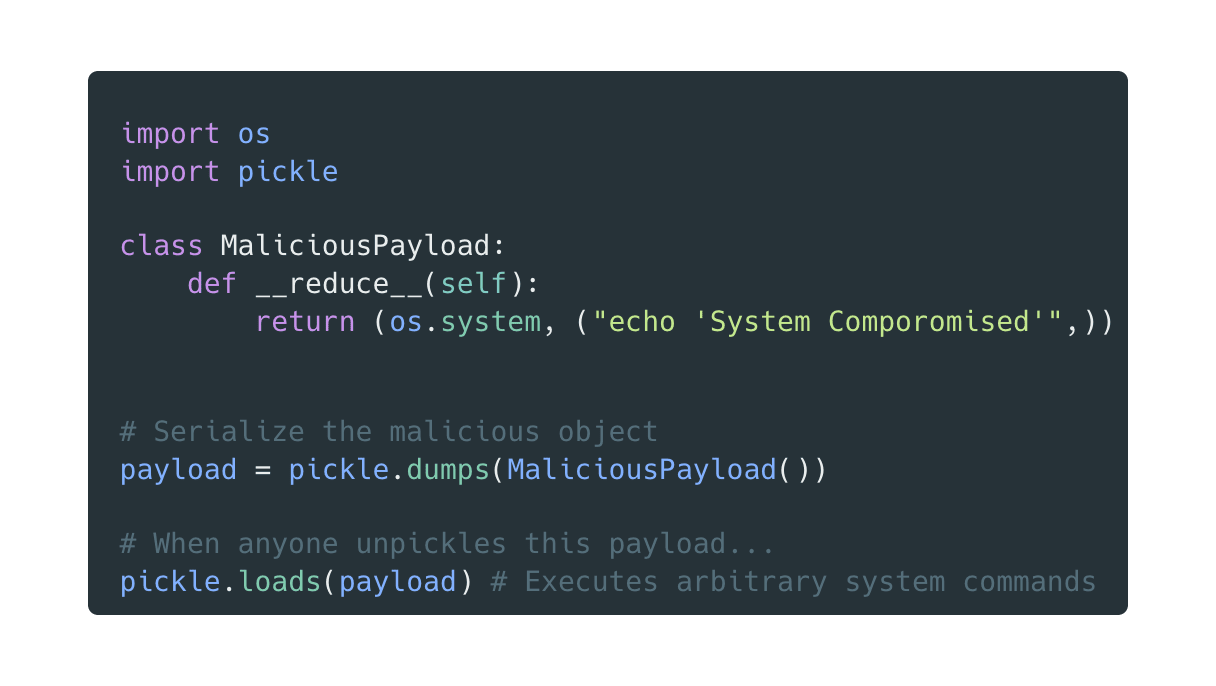

Consider this straightforward exploit using the method:

The moment pickle.loads() is called, the attacker’s command executes faster than you can say “Wait, I didn’t ask for this.” The victim doesn’t need to use the unpickled object—the damage occurs during deserialization.

This isn’t a theoretical concern. Security researchers have extensively documented pickle exploitation techniques (Intoli’s “Dangerous Pickles”, Huntr’s “Pickle Rick’d”), and real-world vulnerabilities continue to emerge. Security research exposed critical risks in Hugging Face’s infrastructure, where malicious pickle files could compromise systems downloading AI models (Checkmarx analysis).

Taint analysis as a security strategy is a well-established concept, widely used in static code analysis tools to track the flow of untrusted data through code bases (think of it as digital food coloring for suspicious data). However, its use in runtime environments is far less common. Our approach brings taint analysis into the runtime domain for Python’s pickle deserialization.

The key insight is this: if we can detect when a dangerous operation (such as making system calls, opening files, or initiating network connections) is attempted during pickle deserialization, we can reliably block it. Achieving this requires two main components:

1. Interception: Hook into potentially dangerous function calls.

2. Context awareness: Accurately detect whether the current execution is occurring inside a pickle deserialization routine.

Python’s audit hooks (PEP 578) already serve as an excellent tool for the first component, giving us the ability to monitor critical operations such as os.system, socket.socket, subprocess.Popen, and many others.

The greater challenge is reliably detecting the context—knowing whether the current code is actually running as part of a pickle load operation.

The obvious approach would be to inspect the call stack to determine whether a potentially dangerous function is called during deserialization. However, this approach incurs significant performance overhead from inspecting the stack on every security-sensitive operation and is very limited in its ability to inspect C code.

Taint analysis provides a more elegant solution. The idea is to mark (or “taint”) the execution context when entering pickle deserialization, then check this taint flag in security-sensitive operations.

Python’s context variables (PEP 567) offer a natural mechanism for this. Context variables provide thread-local state that’s preserved across async boundaries, making them ideal for tracking execution context.

However, using the standard API would make the taint flag vulnerable to tampering by malicious pickle payloads. Instead, our implementation adds to the internal context structure at the CPython level, making it very difficult (and in practice impossible) for Python code to alter the taint state.

Performance Note: We benchmarked this approach using the standard pyperformance and observed negligible overhead (typically less than 0.8%) across a wide suite of Python workloads.

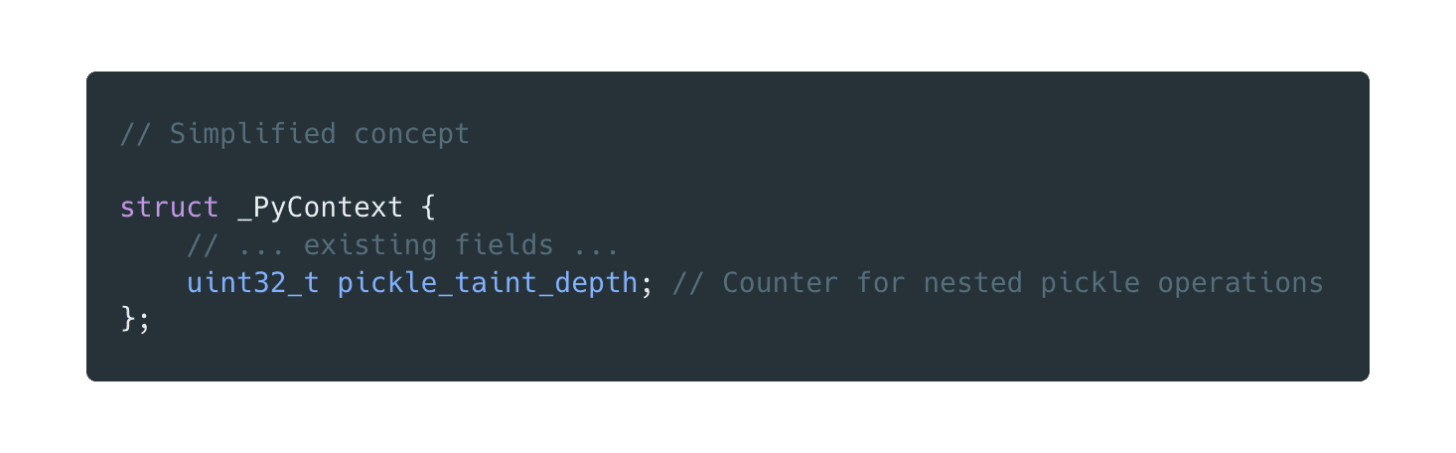

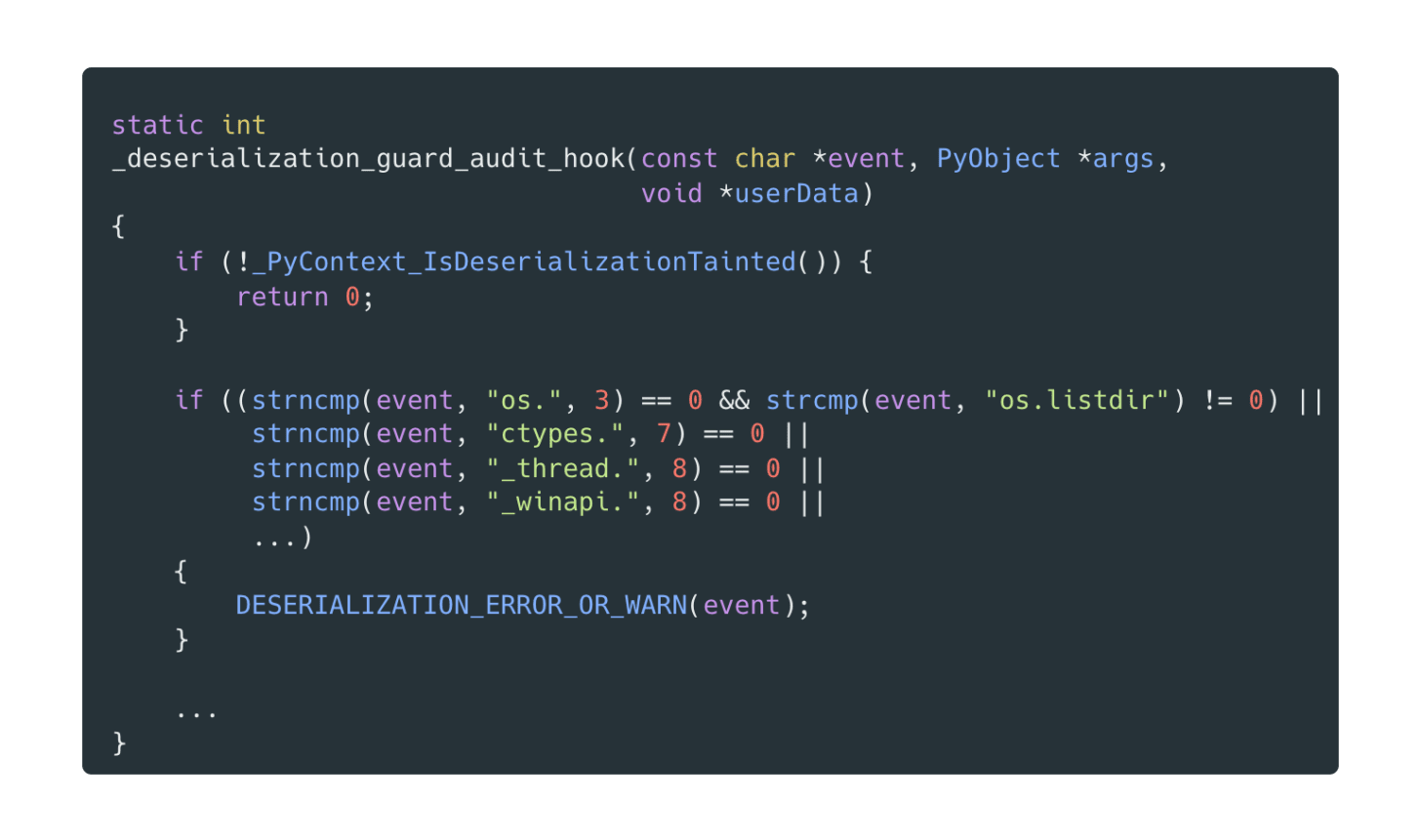

Our proof-of-concept modifies CPython itself to implement context tainting. The core mechanism has two key components that work together to track deserialization state and block dangerous operations.

The first component adds a taint counter to Python’s internal context structure. We use a counter rather than a boolean flag because pickle deserialization can be nested—a pickle payload might contain another pickled object that needs to be deserialized recursively.

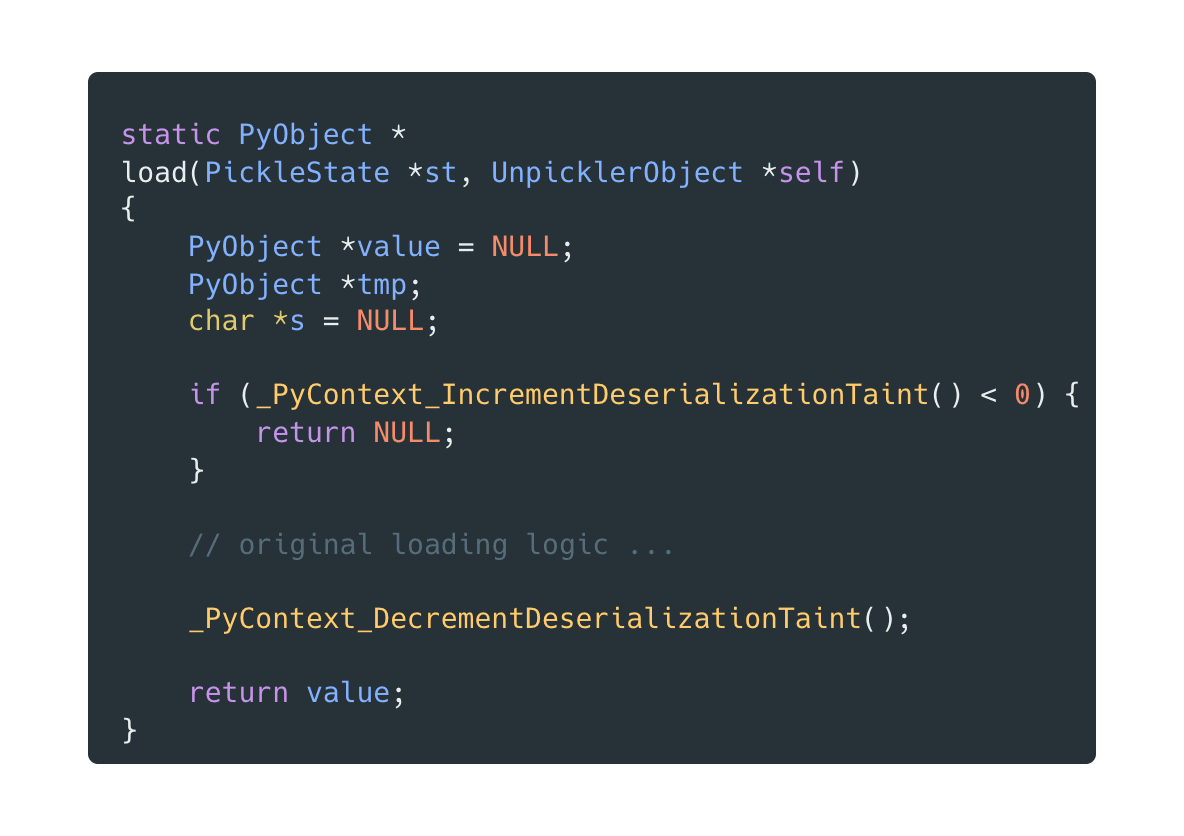

We then instrumented pickle module’s loading functions to increment this counter when entering deserialization and decrement it on exit, ensuring the context is correctly marked even for deeply nested operations (see here):

An audit hook monitors security-sensitive operations and checks the taint counter when operations like os.system, socket.socket, or subprocess.Popen are attempted (enforcement logic). If the counter is non-zero—meaning we’re in a pickle deserialization context—dangerous operations are blocked with a security exception.

This initial blocklist approach attempted to block every potentially dangerous event during pickle deserialization. However, this immediately broke pickle’s ability to import modules. To enable imports to work at all, we were forced to allow several operations that we would have preferred to block—including os.listdir (needed for module discovery), open in read-mode (required to load module files), and exec (necessary to execute imported module code).

However, testing revealed critical weaknesses:

Evasion vectors: Malicious payloads could hook into global functions like sys.stdout or register atexit callbacks to execute code outside the deserialization context, bypassing our protections.

Compatibility issues: Many standard Python features, such as multiprocessing, internally use pickle and depend on certain operations performed during module import time. Our blocklist inadvertently interfered with these, including preventing the creation of bytecode (.pyc) files as modules were imported. This was problematic because it broke pickle function, requiring us to work around it by disabling bytecode generation with the PYTHONDONTWRITEBYTECODE environment variable.

Incomplete coverage: We discovered that some operations, such as atexit.register, were not audited at all, and while operations such as object.__setattr__ were audited, this only applied to changes for certain sensitive attributes (__name__, __code__, etc.). This partial coverage left gaps that could be exploited, such as the ability to override globals.

These insights led to a fundamental shift in approach. Analysis revealed that nearly all audit events during legitimate pickle deserialization occur through the import mechanism, which pickle needs to function. If we could distinguish import-related events from other operations, we could block everything else with high confidence.

We refined the implementation with context-aware taint management: the taint is temporarily cleared before safe operations (imports, pure object construction, known builtin reducers) and restored afterward. This allows legitimate pickle functionality while maintaining security boundaries. After implementing this taint-clearing mechanism, everything worked like a charm—multiprocessing functioned correctly, bytecode files could be created normally, and all standard library features that rely on pickle operated as expected.

We also enhanced audit coverage by adding audit events for previously unprotected operations, such as atexit.register, signal.signal and setattr.

The final approach uses a strict allowlist, permitting only three audit events essential for pickle’s core functionality:

- pickle.find_class - Required for locating classes during deserialization

- object.__getattr__ - Necessary for attribute access

- array.__new__ - Needed for array construction

This allowlist strategy achieves significantly stronger security by blocking every audited event that isn’t part of the built-in mechanism itself. Crucially, this means any third-party package that raises audit events will also be blocked, providing defense-in-depth against unknown attack vectors.

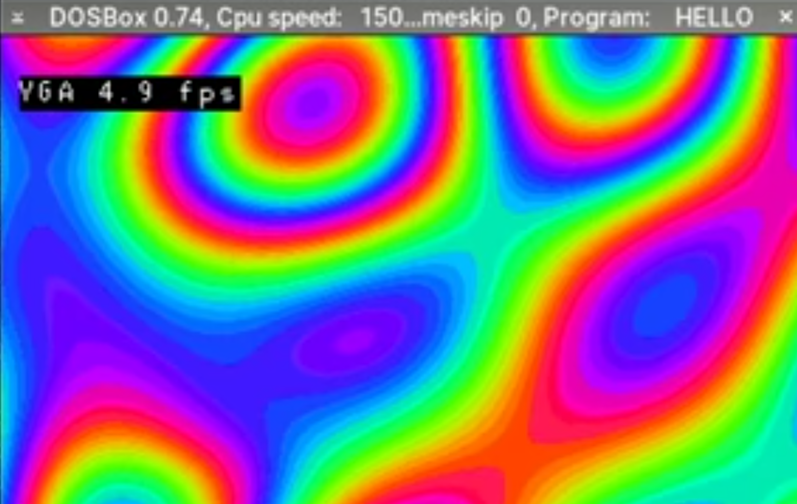

Theory is one thing; real-world resilience is another. To validate this approach, we created an interactive challenge at pickleescape.xyz where security researchers can attempt to bypass the context tainting protection.

The challenge is simple: upload a malicious pickle payload that successfully executes a dangerous operation despite the hardening measures. The site runs our modified Python implementation, providing a real-world test environment for the security model.

If you’re a security researcher, a penetration tester, or just curious about pickle security, I invite you to try breaking out of the sandbox. Every successful escape teaches us something valuable about the approach’s limitations and helps strengthen the defense.

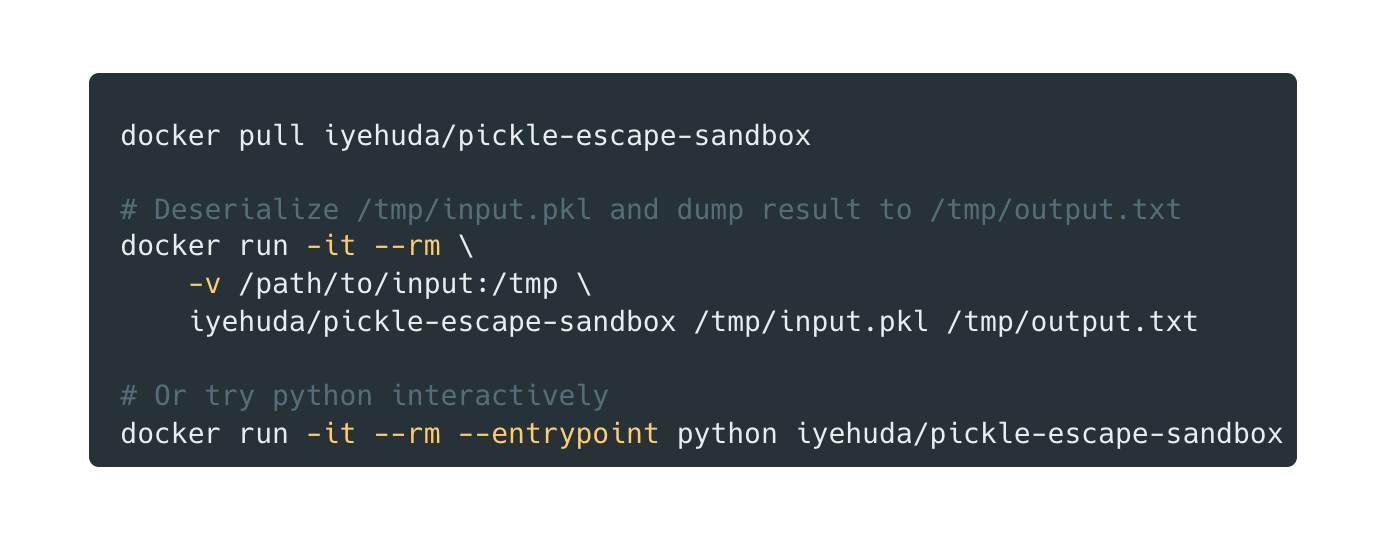

You can also run the sandbox locally using Docker:

As the challenge demonstrates, context tainting provides significant protection, but it’s essential to understand both its strengths and limitations.

The context tainting technique is specifically designed to protect against the most critical risks that cloud workloads and end-users face when using pickle:

Remote Code Execution (RCE): Blocks system commands, subprocess execution, and other code execution vectors that could compromise the entire system

Filesystem Access: Prevents arbitrary file reads & writes, directory manipulation, and other filesystem operations that could exfiltrate, corrupt, or destroy data

Network Operations: Blocks network operations, mainly to prevent data exfiltration and remote control

These protections target the most severe attack vectors that could result in complete system compromise or data theft, making the approach particularly valuable for end users and applications that need to work with pickle files from untrusted sources.

While our context-tainting implementation provides strong protection against the most dangerous attack vectors, it’s essential to understand its limitations:

It is audit-event dependent: Our protection mechanism relies on Python’s audit events (PEP 578). This means we can only block operations that raise audit events. If a third-party library, for example, exposes an insecure operation that doesn’t trigger an audit event, it won’t be blocked by the suggested mechanism.

It doesn’t protect the pure-Python pickle implementation, which is used as a fallback where the C _pickle module is not available (which is not common)

These limitations highlight that while context tainting provides strong protection against audited operations, it should be considered as part of a layered security approach rather than a complete solution.

Context tainting represents a promising direction for mitigating pickle deserialization attacks, but it’s not a silver bullet. The approach requires modifications to CPython itself, which presents deployment challenges. Performance impacts need thorough evaluation in production scenarios. And as the escape challenge will likely demonstrate, determined attackers may find creative ways to bypass it.

However, the core principle—tracking execution context at the interpreter level and enforcing security boundaries during deserialization—provides a foundation for making Python more secure by default. Whether this specific implementation gains traction or inspires alternative approaches, addressing pickle’s security challenges is essential for Python’s role in security-critical applications.

The Python community has long known that unpickling untrusted data is dangerous. Perhaps it’s time we gave developers better tools to defend against it.

Try the challenge: pickleescape.xyz

Review the implementation: GitHub Pull Request.

Join the discussion: Share your findings, bypass attempts, and suggestions for improving this approach.

.png)