In November of 2024 Anthropic released the Model Context Protocol (MCP) and an accompanying blog post. MCP is a new protocol for AI agents, i.e. language models with access to tools (functions) which run in a loop.

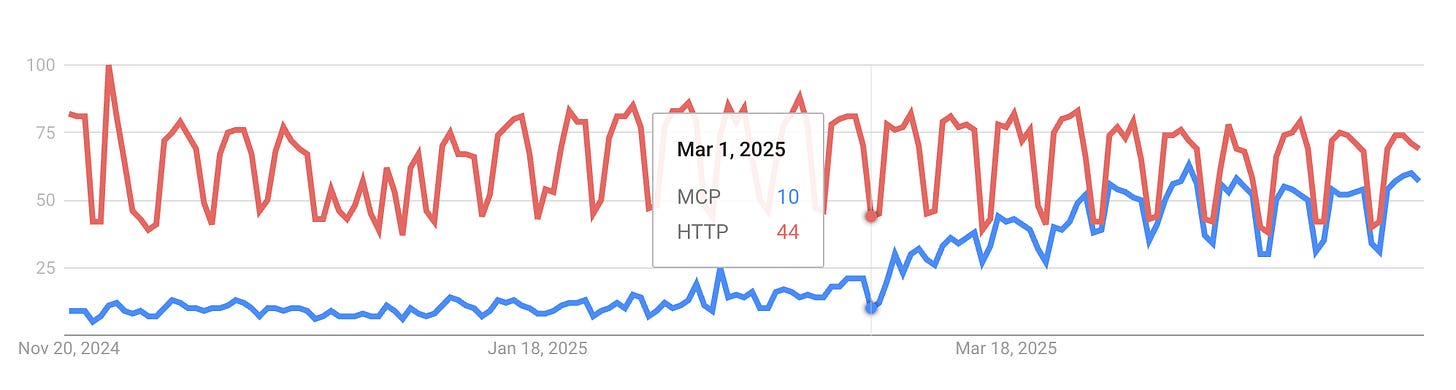

The community quickly became obsessed with it. We can see interest in MCP really exploded since March of this year.

Google trends showing MCP (blue) searches vs HTTP (red) searches

A lot of people were under the impression that MCP meant that the big problems with AI agents were ‘solved’ and we were about to see large scale deployments of them across the Internet.

While MCP does solve some important problems with respect to AI agents, we’ve yet to see any large scale deployments of web agents. Just like with pretty much everything else, the pattern has been slow but steady progress. MCP does, however, establish a standard for how AI agents might navigate the web. Let’s motivate the need for such a standard.

Let’s say you run a news site and you want to expose some search functionality to an AI agent through an API so that it can answer questions like “what happened with the White House this week?”. You might want an AI agent to be able to search for news articles by a keyword string. You write a search function with a doc string to enable the agent to search your site:

def search(keyword: str) → List[str]: """ search through news articles with a keyword query returns a list of headlines """ passWith the plumbing set up we reach the crux of the problem: what’s the best way to show AI agents these functions?

You might write a reasonable prompt explaining to an agent that it’s looking at a news site, and it has access to these functions with these signatures and doc strings:

prompt="""you are an AI agent browsing the web to find information for your user. You have access to a search function to find news articles: def search(query: str) → List[str]: # search through news articles with a keyword query # returns a list of headlines pass Use this API to extract the information you need based on your user’s original query."""This setup is reasonable, but reasonable doesn’t mean it’ll work in practice. Without knowing how the various agents browsing your site were trained, it’s hard to say if they’ll be able to effectively use the search API you set up. Worse yet, the various agents might be trained in very different ways, meaning there might not be a single format for your search API that every agent knows how to use effectively. For example, we provide the above tool as a python function, but what if an agent was trained on tools in a JSON format?

Something surprising I’ve learned is that LM’s can be pretty sensitive to formatting in certain scenarios. This is largely due to the fact that post-training recipes try to standardize formatting across the recipe, with the assumption that this will happen at inference time as well. This assumption is generally true for chat applications where the model provider can easily manipulate the final prompt given to the model.

But with web agents, model providers are at the mercy of API providers when it comes to the ways tools are formatted. The API providers are at the mercy of the model providers with respect to what format their agents are trained on.

At least without a shared protocol.

When everyone’s doing different stuff and you want everyone to do the same thing, the solution is a standard. And that’s exactly what MCP is. It’s a standard way to format tool calls and prompts such that every model provider and API provider can be on the same page, and so that every AI agent can use every web API it encounters effectively.

The abstraction employed to achieve this should be very familiar to anyone who’s built a web service: a client-server architecture.

An MCP client is the module that acts on behalf of a user. The client is where the language model lives in addition to any user-specific logic for ultimately prompting the LM. Together, these can be thought of as the AI agent.

An MCP server is what the client interacts with which serves tool (function) signatures, executes tools, and provides server-specific prompts.

The MCP protocol ultimately defines the format of the tools the servers provide and the way in which these tools are fed into the LM agents which are controlled by the client.

MCP takes the guess work out of writing tool definitions. Writing a tool is as simple as writing a FastAPI POST endpoint:

from mcp.server.fastmcp import FastMCP @mcp.tool() def search(query: str) → List[str]: # search through news articles with a keyword query # returns a list of headlines # execution logic goes hereAnd that’s about it. The FastMCP framework will handle the rest of the logic for serving the tool definition in a standard way to an MCP client.

As I mentioned above, not having standardized formats tends to confuse language models and leads to degraded performance in most tasks. Web agents are no exception. We can expect models trained with a standardized format for tool use to perform better. The standardized format also means the model should more easily navigate API’s which are quite different than those it’s seen during training (in theory).

When a client establishes a connection to a new MCP server, it can query the server for the available tools. These tools will be served to the client in a standard (JSON) format, after which the client can decide which tools to call to achieve its goal on behalf of the user.

Scraping MCP servers and creating synthetic function calling data based on real servers is an exciting direction for training general tool-calling models. This is something we’re playing with at Ai2 — if this works I can write a blog post on it, and you can expect to see it in a tech report at some point.

A standardized protocol and format for tool calling is clearly useful for developing AI agents and the API’s they interact with. MCP has tons of other great features that I didn’t talk about here, but you can explore on the official docs. This blog post really just scratches the surface of what MCP has to offer and how to build within the framework. Just as TCP and HTTP have evolved tremendously with tons of new features over the years, we can expect agent protocols to similarly evolve with the field.

It’s entirely possible that in the not-so-distant future most interactions on the internet involve an AI agent. This internet will look pretty different from the one we have today, and just as TCP and HTTP enable today’s internet, so will MCP (or something like it) enable this new agent-centric internet.

It’s worth noting that MCP is not the only standard in town. There’s Google’s A2A and IBM’s ACP, just to name a couple. The protocol which “wins” will likely be determined by momentum behind it, early adoption and network effects. It’s not clear which protocol will win but MCP certainly has a head start on these fronts. I’ll end with a timeless xkcd

.png)