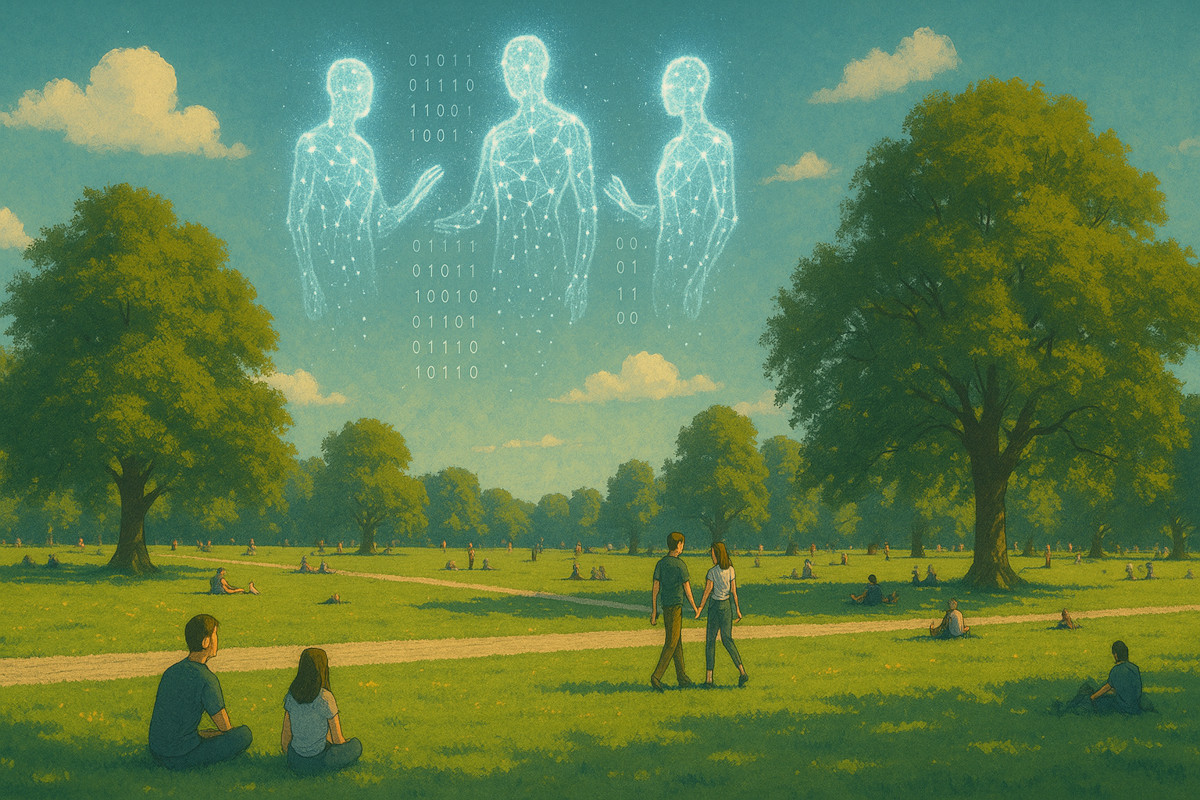

Image by AI, obviously.

A lot of smart, credible people think that superintelligent AI is just around the corner.1 Whether or not they’re right, enough people believe this that it’s worth taking seriously.

But what does that even mean? It’s such an incomprehensible concept that it’s hard to know how to even start planning for it.

I’ll be honest, the idea of superintelligent AI freaks me out. Yes, it seems like the inevitable next step in humanity’s progression as a species. Yes, it could lead to remarkable progress in science, medicine and technology and make all our lives better. And yet, in my heart of hearts, part of me is still kind of hoping it doesn’t happen.

I think it’s because I have trouble figuring out my own self-worth in a world with superintelligence.

For as long as I can remember, a big part of my identity has been that I’m “smart”. I’m not a genius or anything, but I’m good at figuring stuff out. I always did well at school without much effort. And I’ve generally thrived in every job I’ve had.

But once we have superintelligent AIs… being smart… kind of won’t matter anymore? Like—a phone with an internet connection will be smarter than me. Smarter than all of us. And so all those accumulated years of knowledge and learning will basically be obsolete. Everything I’ve taken pride in—my ideas, my ability to reason, synthesize, and so on—will no longer be useful in the presence of this super-machine.

Where does that leave me?

Concretely, my current job—which involves building and selling a codebase—is definitely gone. No one needs to pay me for code when the AIs can do it faster, better, and cheaper.

So what do I do? It probably has nothing to do with code—unless we need people to guide the AIs that write the code. But why would humans guide the AIs when the AIs are also, presumably, better at that? More generally, is there any world in which an AI that is smarter than me at everything is not also better than me at everything? Or at a minimum, everything I might do on a computer?

With that lens, it’s hard to understand what happens not just to my work, but to work, generally. You’d expect that most white-collar jobs won’t need people at all. Maybe we’re still doing them, because it makes us feel useful, and otherwise there’d be global civil unrest or something. But we’re not needed. The AIs can do everything we can do, faster and better than us.

That’s a pretty weird future! And at the societal level, predicting things from there gets fuzzy quite quickly. Will white-collar work be eliminated, and half of society forced to rely on universal basic income? Or will we find some reason why us inferior people still need to work alongside our superior AIs? It’s hard to guess what might happen, so I won’t even bother trying.

A possibly less impossible question is “where will my sense of self-worth come from?”

My opening thought was something like, “if my intelligence is meaningless then I am meaningless.” But that’s not true. The most obvious way it’s false is through my kids. My kids don’t care how smart I am. They care that I’m me. That I love them, support them, and help them figure out the world. My kids are already the most meaningful part of my life, and superintelligent AIs won’t change that. Thank god.

Of course, the general version of “my kids” is “important relationships.” Our important relationships will still matter. Does that mean that our collective sense of purpose shifts away from things that are more career-oriented to things that are more people-oriented? I think it probably does. And while I’ve always been better with numbers than I am with people, maybe that’s not such a bad thing.

I wonder if superintelligences will eventually make relationships the only focus of our lives. The AIs do all the practical stuff—all the work—and we just worry about each other. That might be okay even if it’ll be—in the words of every AI tech CEO on this topic—“a bit of an adjustment.”

On the other hand, you can imagine those lives feeling more like the lives of well-cared-for pets. We’re comfortable. We eat well. We have fun, and all our needs are met. And yet the most important thing we achieve in a given day might be sniffing a new butt or two at the park. Meanwhile, our AI caretakers are busy running society and shaping the future in ways we hardly think about.

Would that be a bad future? I don’t know. The adjustment of going from “a person who makes things happen” to “a person who goes about their life while superintelligences make things happen” will definitely bruise the ego a bit. On the other hand, maybe it was always silly to derive self-worth from how good I was at solving puzzles or doing good work. Maybe it’s an opportunity to make peace with the fact that being “smart” really never mattered that much.

So I guess that’s where I am. Still kind of freaked out. Still secretly hoping superintelligence isn’t right around the corner. But also trying to make peace with whatever’s coming next. AI might take my job. It might take my ego. But there is something about being human that it can’t touch. That thing isn’t easy to describe, but is the thing you feel in the joyful moments of life when you’re with people that you love. And maybe superintelligent AI will help us remember that that thing was always the most important thing.

Thanks to Rowena Luk for reading a draft of this (and for being a meaningful human in my life).

Notes

.png)