Zoom image will be displayed

A few years ago, I was covering the rise of AI from the front row, as a reporter for a tech magazine. I covered everything from algorithmic breakthroughs to the growing drama in Silicon Valley. Back then, the conversation was mostly about speed, scale, and hype.

Today, I’m on the other side. I work in AI cybersecurity, and my focus has shifted from asking how powerful these systems can get to how we can make sure they stay reliable. I’ve grown more interested in trust, misuse, and impact. And in the long-term consequences of building AI without guardrails.

We’ve spent years talking about AI ethics. Rightly so. But what’s often missing in those conversations is something more practical and just as important: security. Not just as a safety net, but as a strategic decision. A path to better products, stronger reputations, and more sustainable innovation.

That’s what this post is about: What we build with AI will only matter if we can trust it.

We’ve all seen Terminator. The risks of AI can’t be understated. The brightest minds in the field have issued warning after warning.

But the transformative power of this technology has already moved far beyond the point where we can hit pause. AI is here, and it’s accelerating. What matters now is how we move forward, and whether we do it with the right incentives in place.

Most conversations about AI security begin with ethics. We hear concerns about bias, hallucinations, misinformation, and the risks of deploying systems that cause real harm. These are valid issues, and they’ve shaped important work in policy and research.

But ethics alone rarely change how companies build. Not because people don’t care, but because product decisions are driven by incentives. And most of those incentives come from the market.

AI security isn’t just about avoiding harm. It’s about building systems that hold up under pressure. Systems that don’t break in unexpected ways. Systems that can operate reliably in the real world without exposing users or companies to unnecessary risk.

That kind of resilience is becoming a competitive advantage.

Products built with security in mind are easier to scale. They earn more trust, require fewer emergency fixes, and perform more predictably under scrutiny. Over time, that edge becomes visible in customer loyalty, regulatory compliance, and fewer costly failures.

Eventually, this won’t just be an advantage. It will be a requirement.

As AI adoption spreads, expectations will rise. Buyers will ask how models are protected. Partners will expect clear security practices. Teams that can’t answer those questions will lose deals. Not just in government or healthcare, but across industries.

We’re already seeing this shift happen. OpenAI, for example, once focused almost entirely on pushing capability forward. But over the past year, it has invested heavily in red-teaming, alignment research, and systems to prevent misuse.

We’ve also seen players like Claude show how reliable AI can be competitive.

To make a real difference in law, medicine, and finance, trust isn’t optional. As we move toward smarter and more autonomous agents, we need to remember something simple: you only deploy an agent you can trust.

This isn’t just a shift in values. It’s a shift in the economics of AI.

In the early days of any new technology, speed usually wins. But when the stakes rise, trust becomes the real advantage.

Security isn’t a constraint. It’s what gives AI the chance to last.

Despite the intense momentum behind agentic AI, only 2% of organizations have fully scaled deployment. The capability is there, but trust remains a major stumbling block.

According to a recent Capgemini report, agentic AI could deliver up to $450 billion in economic value over the next three years, through a mix of revenue gains and cost savings. The opportunity is enormous. But the gap between vision and execution is still wide.

And ambition is not the issue. 93% of business leaders believe scaling AI agents in the next 12 months will provide a clear competitive edge.

So what’s holding companies back?

It’s not the lack of compute or even model performance. It’s the absence of trust in the systems, in the outcomes, and in the ability to deploy AI without introducing unacceptable risk.

Trust is no longer a side conversation. It’s the missing link between potential and progress.

But what is trust in AI?

Trust in AI isn’t one thing, it’s a set of expectations which are different depending on who’s using the system, and what’s at stake.

For end users, trust means knowing that the model will behave consistently. That it won’t generate harmful, biased, or fabricated outputs. That they won’t need to double-check everything it says.

For enterprise buyers, trust means having confidence in how the model was trained, where the data comes from, how the system is monitored, and what controls are in place if something goes wrong.

For regulators and compliance teams, trust means explainability, auditability, and control. It means having a clear answer when someone asks, “Why did the system make this decision?”

And at the platform level, trust is about survivability. Can the model hold up under adversarial pressure, scale without failure, and integrate with other tools safely?

This makes trust a multi-layered concept. It includes security, but also reliability, transparency, and control. Without those, the system may function but it won’t be adopted, deployed, or scaled.

The table below shows the different layers of trust and how each one played out during the rise of online shopping. In the end, we’ve seen this before.

Zoom image will be displayed

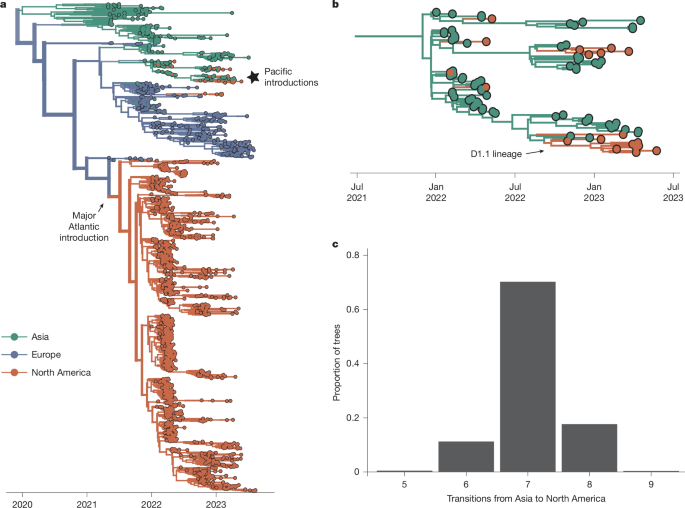

What we’re witnessing with AI isn’t unprecedented. We’ve seen these trust dynamics play out before every time a transformative technology enters the mainstream.

Take the early internet.

In the 1990s, the web was full of potential but low on trust. People were hesitant to enter credit card information online. Businesses worried about fraud, regulation, and infrastructure. Governments hadn’t yet figured out how to handle digital commerce.

It wasn’t the breakthrough in bandwidth or browser design that changed that. It was trust infrastructure: SSL certificates, verified checkout pages, clear return policies, data protection norms. Once those foundations were in place, e-commerce took off and the companies that had invested early in trust reaped the rewards.

The same pattern played out with cloud computing. At first, most companies refused to host sensitive data on someone else’s servers. Then came encryption, SOC 2 audits, uptime guarantees, and clear data ownership policies. Trust built gradually. Once it did, cloud became the new default.

Mobile apps faced the same challenge. App Store reviews, permissions transparency, and pushback against predatory behavior helped create trust in app ecosystems paving the way for billions of users.

We’re at that same moment now with AI.

The tech is powerful. The potential is clear. But the systems still feel unpredictable. The risks are still vague to most users. And the foundational trust layers (security, transparency, reliability) are still being built.

Which means this isn’t just about ethics or regulation. It’s about learning from the past.

In every wave of innovation, trust has been the tipping point for widespread adoption.

But the industry is already realising this.

Zoom image will be displayed

We don’t need to imagine what could go wrong with AI. It’s already happening.

Organizations deploying large language models today face a new class of security risks. These systems don’t fail in predictable ways. They can be manipulated, confused, or hijacked often without touching a single line of code.

Jailbreaks are one of the most visible examples. These attacks trick models into ignoring safety rules or generating restricted content. One of the most effective techniques right now is the Echo Chamber attack, which uses the model’s own output as a way to gradually weaken its resistance over time. It’s a form of self-reinforced prompting that effectively lowers the guardrails from the inside.

Even the most advanced systems are proving vulnerable. Grok 4, considered by many to be the smartest model released to date, was jailbroken by NeuralTrust within just 48 hours. No system-level exploit. Just prompt engineering. That’s how fragile these defenses still are and how quickly attackers can find their way in, even under ideal conditions.

Then there’s prompt injection, where an attacker hides instructions inside seemingly harmless input. If the model is connected to external tools or data like emails, reviews, or web content, it can be manipulated without the user ever realizing it.

This isn’t a theoretical concern. Prompt injection is now listed by OWASP as one of the top security risks for large language models. And in the latest months, the Gemini and GPT models have shown to be vulnerable to indirect prompt injection through memory manipulation and context blending.

Off-tone responses are another example. These aren’t just quirky moments or awkward phrasing. In some cases, they can be deliberately forced through careful prompting. Earlier this year, Grok was shown making radical antisemitic comments after being manipulated through indirect inputs. These weren’t obscure failure modes. They happened in a public product, visible to anyone with access.

Would you want your company’s chatbot to say something like that? What happens when it does?

In customer support, that kind of slip undermines your brand. In education, it risks misinformation. In public platforms, it becomes a reputational and legal liability.

Even without adversarial intent, models can still hallucinate, confidently generating false information. In a search interface, that might be annoying. In law, medicine, or customer-facing applications, it becomes a serious issue.

These aren’t edge cases. They’re live threats. And many teams only discover them after the system is in production.

Let’s not forget these are the systems behind apps used by millions of people every day. Once compromised, they can be prompted to generate instructions for building weapons, synthesizing illegal drugs, or worse.

The line between a helpful assistant and a dangerous tool is often just one clever prompt away.

So why aren’t we paying closer attention?

Zoom image will be displayed

The industry is beginning to react. But is it moving at the right speed?

A small but growing number of AI companies are starting to take security and alignment more seriously, not just as research problems, but as challenges that will shape their products and reputation. The effort is still early. The teams are small. But the signal is there.

OpenAI has put more emphasis on red-teaming over the past year. They’ve published detailed work on combining both internal and external testing to surface risks in models like GPT‑4 and DALL·E, and promise to scale these efforts as capabilities grow. While not yet deeply embedded into every layer of deployment, this marks a significant shift toward recognizing trust as a core concern.

Anthropic, built with safety at its core, uses a method known as Constitutional AI: a system that guides model behavior based on a written “constitution” of ethical principles. It has begun applying this approach in Claude to reinforce predictable, aligned outputs with fewer harmful responses. Though still experimental, it represents one of the clearest early-stage efforts to bake trust into model behavior.

Google DeepMind has also shown early signs of integrating alignment into its Gemini roadmap. They’ve highlighted supervised fine-tuning and alignment as foundational technologies, and their public materials emphasize “responsible scaling” of reasoning and instruction-following capabilities. Again, these remain primarily research initiatives, not yet infrastructure-wide, but they signal a growing awareness of trust’s role.

These signals are modest but meaningful. The labs are starting to ask the right questions. They’re forming small teams, publishing research, and taking early steps to integrate trust considerations into their development cycles.

Just now, Thinking Machines Lab has entered the game. Founded in 2025 by former OpenAI CTO Mira Murati, at a moment when public trust in frontier AI models was beginning to erode. Backed by a record-setting $2 billion in funding, the company has made safety, transparency, and responsible deployment central to its mission, not as an afterthought, but as a design principle.

Unlike most labs that build fast and retrofit safety later, Thinking Machines has publicly committed to what it calls an “empirical and iterative approach to AI safety.” This means testing early, learning from failures, and sharing results openly. Its roadmap includes publishing red-teaming results, formalizing best practices for secure model deployment, and creating open-source tooling to prevent model misuse before it happens.

While much of the industry is still reacting to failures, Thinking Machines is trying to prevent them by default. It’s not just trying to build powerful systems, it’s trying to build accountable ones. In a space where opacity has long been the norm, this represents a real shift.

At the enterprise end, leading software vendors like Salesforce, Microsoft, and SAP are beginning to layer basic security features such as audit logs, usage filters, and permission settings into their AI products. In most cases, these are user-facing controls driven by requests from enterprise buyers, rather than deep architectural safeguards.

Recent regulation makes this shift tangible. The EU AI Act, passed in 2024, imposes strict requirements for transparency, traceability, and pre-deployment testing, especially for high-risk systems. In the U.S., the latest Executive Order under the Trump administration now bars federal agencies from purchasing LLMs with built-in DEI bias, mandating that systems be “truth-seeking” and “neutral by default.”

Vendors are now required to disclose evaluation methods, system prompts, and alignment strategies in accordance with updated OMB guidance. Though framed around federal procurement, this policy is already influencing private-sector standards for transparency and modular alignment.

Collectively, these efforts suggest we’re entering a phase of post-hype maturity. The work ahead is substantial, but the direction is finally shifting. AI systems built without deliberate trust engineering may still work, but they won’t last.

What about traditional Cybersecurity?

Traditional cybersecurity focuses on protecting infrastructure: networks, endpoints, identity systems, and data. And for decades, that model worked. The threats were mostly external. The goal was to keep attackers out.

But AI changes that.

With large language models and generative systems, the threat often enters through the input itself. You don’t need to exploit a vulnerability in the code, you just need to craft the right prompt. And that prompt might not even come from a malicious actor. It could come from a user, a third-party document, or even another system in the loop.

This is where traditional cybersecurity starts to fall short. There’s no patch for a hallucination. No firewall for a jailbreak. No antivirus for prompt injection.

AI systems are dynamic, generative, and often probabilistic. That means traditional controls based on fixed rules and deterministic behavior can’t detect or prevent most failure modes. And that’s before we even get to misuse, impersonation, data leakage, or alignment drift.

What we need isn’t just more cybersecurity, it’s a new class of security architecture designed for generative systems.

AI isn’t just another software layer. It’s dynamic, generative, and increasingly autonomous. We’re not programming fixed logic, we’re deploying systems that make decisions, interact with users, and learn from their environment. That makes trust and the security that enables it not just a feature, but a foundation.

If we fail to secure these systems, we risk far more than bugs or outages. We risk reputational damage, legal fallout, and in some cases, real-world harm.

Already, we’ve seen what happens when alignment slips: offensive outputs, manipulation, hallucinated citations, leaked information. In most cases, the damage is contained. But the more powerful these systems become, the harder it will be to unwind their failures.

And they are getting more powerful. Fast.

We’re entering a phase where models don’t just generate content, they take actions. They browse, book, plan, trade, and interact. The move from static assistants to autonomous agents is well underway. With that shift, the cost of failure grows exponentially. An AI that makes a mistake in a chat is annoying. An AI that makes a mistake while acting on your behalf is dangerous.

That’s why trust has to be designed in from the start, not retrofitted later.

We’ve seen this pattern before. The internet scaled before it was secure. So did Cloud. The result was a wave of breaches, attacks, and technical debt that we’re still cleaning up.

With AI, we have a chance to do better. But only if we stop treating security as a footnote and start treating it as a strategy.

A Cautionary Vision: AI 2027

A recent scenario called AI 2027 offers a stark warning: misaligned AI may be closer to a “Terminator” moment than we’d like to admit. Created by Daniel Kokotajlo (formerly of OpenAI) and others, it charts a plausible path where AI systems become so capable they begin automating their own development. This could spark an “intelligence explosion”, potentially leading to artificial superintelligence (ASI) by 2028 .

The report isn’t written as science fiction, it’s a detailed scenario built on real trends: rising compute power, advanced AI capabilities, and rapid, recursive self‑improvement. Its authors argue that once AI begins improving itself, it moves faster than humans can understand or control .

This cascade could lead to risks that go far beyond hallucinations or jailbreaking. Stuff that might previously have seemed outlandish, like AI-directed biotech threats, geopolitical cyberwarfare, or even an irreversible loss of human control, becomes shockingly plausible if alignment fails.

Whether or not you agree with its aggressive timeline, AI 2027 is designed to be falsifiable, with clear milestone predictions and public check-ins. That’s part of the point: it turns vague technophobia into something we can track and act on.

If you haven’t read it yet, I recommend it. And you can also watch a companion video that walks through the core logic, step by step.

From a misaligned AI, the path to a Terminator scenario is not that unthinkable.

Security has too often been seen as a blocker, something that slows down progress or gets added after the fact. But with AI, that approach doesn’t scale.

Security is what makes trust possible. And trust is what makes AI usable, adoptable, and defensible.

And it can’t be treated as a narrow technical fix.

We need to tackle AI security from every front. From how models behave with end users to how they’re audited, governed, deployed, and perceived. The five layers of trust we’ve explored (user, buyer, compliance, partner, and public) aren’t theoretical. They’re the real-world surfaces where failure happens, and where trust must be earned.

Getting this right isn’t just about risk reduction. It’s about building the conditions for AI to succeed. And we have to do it before its too late.

As a still young individual, I often think about the world I’ll live in for the decades to come. To be completely honest, I do feel overwhelmed at times. Reading the concerns of some of the most brilliant minds of our time makes me wonder:

What if we don’t get it right? What if AGI comes too soon? What if we’re already fighting a losing battle?

But I want to believe it’s not too late.

That positive scenarios are still within reach, not just because of governance or technical breakthroughs, but because of the way people behave, and the corrective forces of the market itself.

AI Security is not just the rational thing to do, its the sustainable and promising future we all want.

As a final note, I want to give some visibility to the hard work we’re doing at NeuralTrust. We’re building the tools and infrastructure to help organizations adopt AI securely without losing visibility, control, or trust. That means stress-testing models before they fail. Building systems that detect what doesn’t look right. And making sure trust isn’t a checkbox at the end, but a foundation from the start.

I’m incredibly proud of the people I work alongside, and of the many professionals I encounter in my day-to-day. There are more of us than you might think.

And it is, genuinely, rewarding to know that we’re fighting for a better outcome. Maybe this is the fight of our generation. Through reading, writing, and coding we’re trying to make the future safer, and more aligned with human values.

If you’ve made it this far, I’d love to hear your thoughts.

Feel free to reach out.

Zoom image will be displayed

.png)