Hey, everyone. It's another weekend, and I was exploring what to build. So I decided to build a simple yet completely functional load balancer. Let's discuss it in this post.

A load balancer is a component in a system that distributes requests evenly between servers. It is used in large-scale systems where a large number of requests need to be handled effectively.

In large applications several instances of the same application are deployed to provide horizontal scaling. In order to understand how a load balancer and multiple instances of an application can help in scalability, let's do some maths.

Total Requests: 1000000/second Application Instance: 1 Requests Per Instance: 1000000/1 = 1000000So a single instance will need to serve all those 1,000,000 requests. It may fail to serve, or it may crash. Who knows?

But what if we deploy 5 instances of the same application?

Total Requests: 1000000/second Application Instances: 5 Request Per Instance: 1000000/5 = 200000Now every instance needs to handle 200000 requests, which will sum up to 1000000 requests combined by 5 instances.

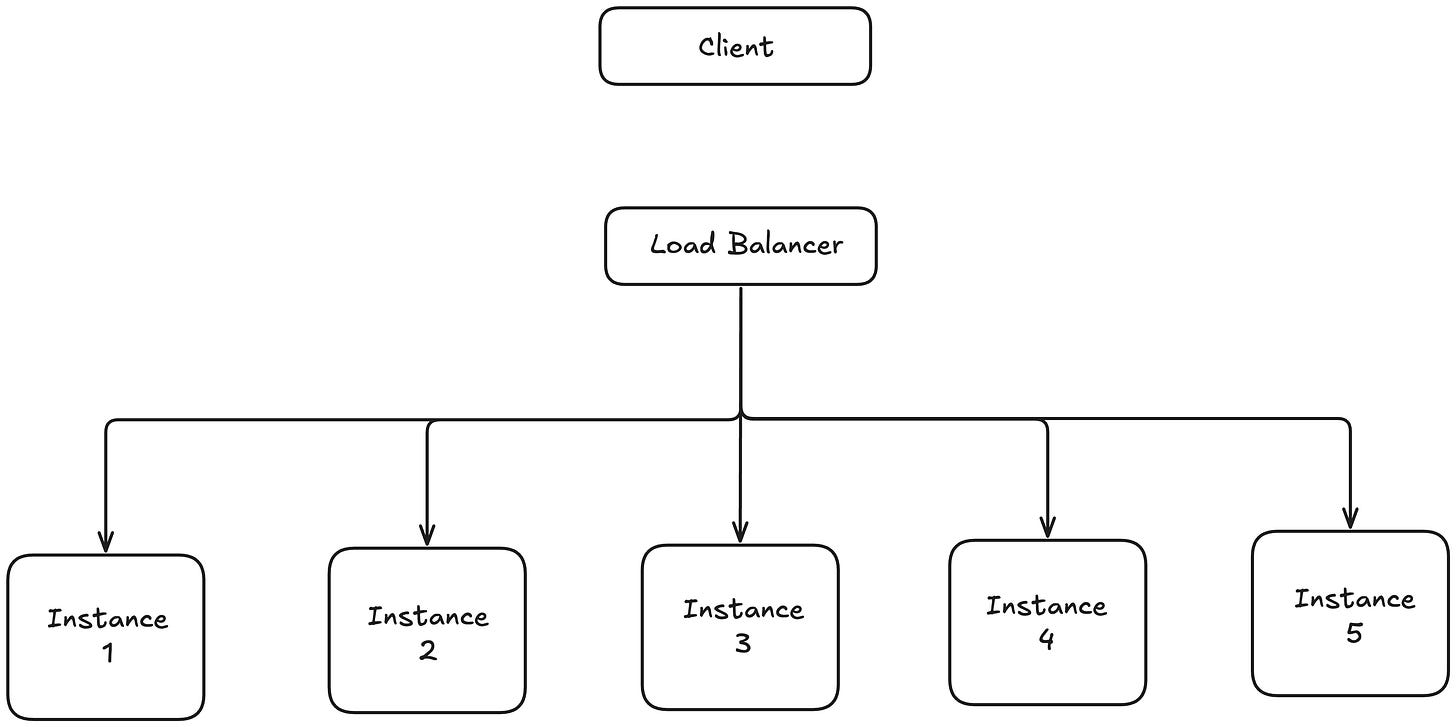

But the question is, how do we uniformly distribute requests to these 5 instances? This is where the load balancer comes into place. See the below diagram for more clarity.

Instead of making direct requests to the application, our client will make requests on the load balancer. After that, the load balancer will redirect that request to the appropriate instance to evenly distribute load.

There are several popular strategies that a load balancer can follow to distribute requests across instances. Each strategy has its own use case depending on the type of workload.

Round Robin: In this strategy, the load balancer sends each new request to the next server in line. Once it reaches the end of the list, it starts again from the beginning. This ensures that traffic is evenly distributed in a circular fashion.

Least Connection: Here, the load balancer forwards the request to the server with the fewest active connections at that moment. This works well when the load on each request can vary significantly.

IP Hash: With IP Hash, the client’s IP address is used to determine which server should handle the request. This helps in maintaining session persistence, as the same client will always be directed to the same server.

Weighted Round Robin: This is a variation of Round Robin, where each server is assigned a weight based on its capacity. Servers with higher weights receive more requests. It's useful when instances have different processing power.

In our code we will implement Round Robin. But in the end, you will write such a code that can be extended with any strategy.

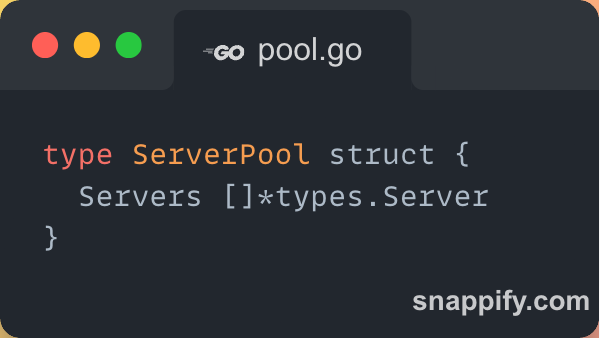

Below piece of code is responsible for managing our pool of backend servers. Instead of dealing with individual servers separately, we group them together using a structure called ServerPool.

Here, Servers is a slice of pointers to types.Server. That means every server we add to this pool will be stored here.

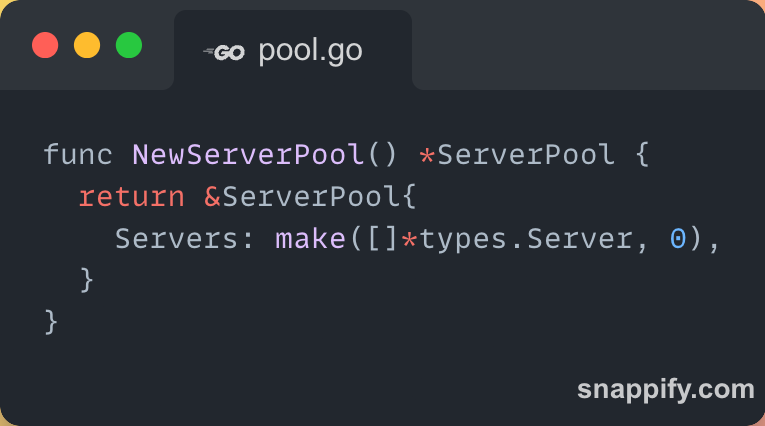

This function initializes a new empty list of servers and returns a pointer to the ServerPool. We'll use this function to create our server pool when the program starts.

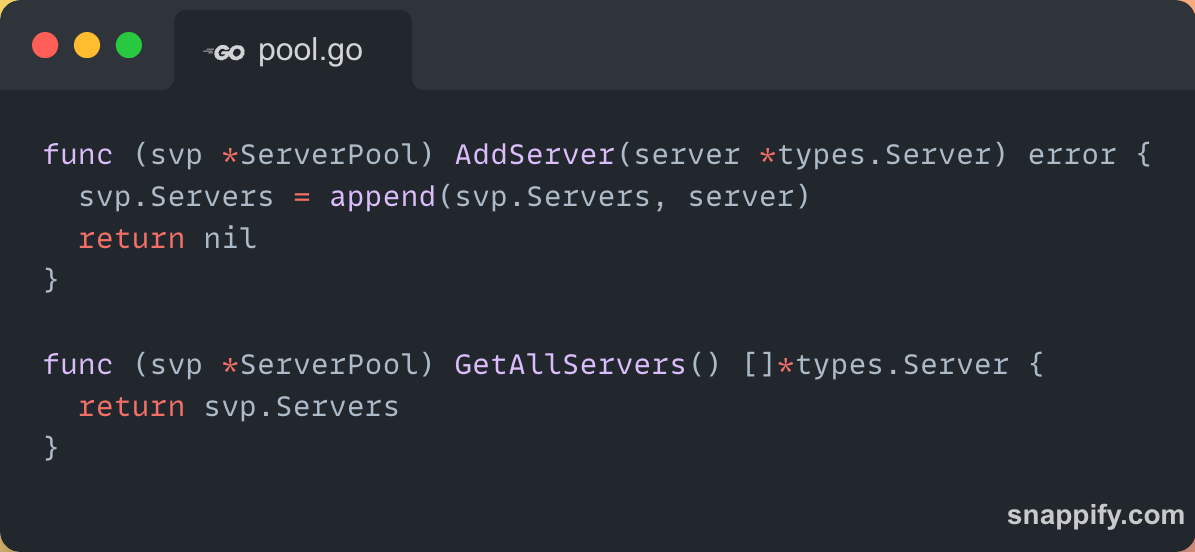

Every time we want to add a new backend server, we call this AddServer method. It simply appends the new server to our list.

Finally, if we want to get the list of all available servers, we use GetAllServers method.

This returns all the servers currently present in the pool. Later, when the load balancer needs to choose a server, it can pick from this list.

File Source: https://github.com/x-sushant-x/Balancer/blob/main/pool/pool.go

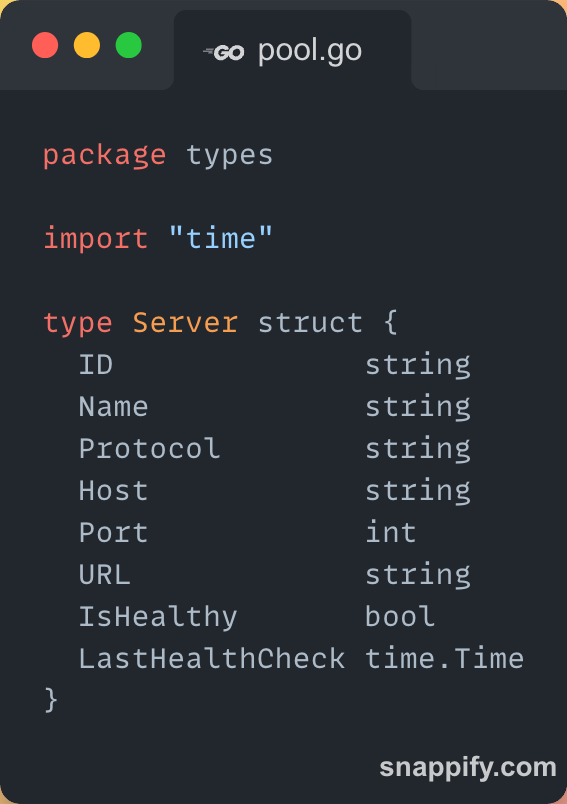

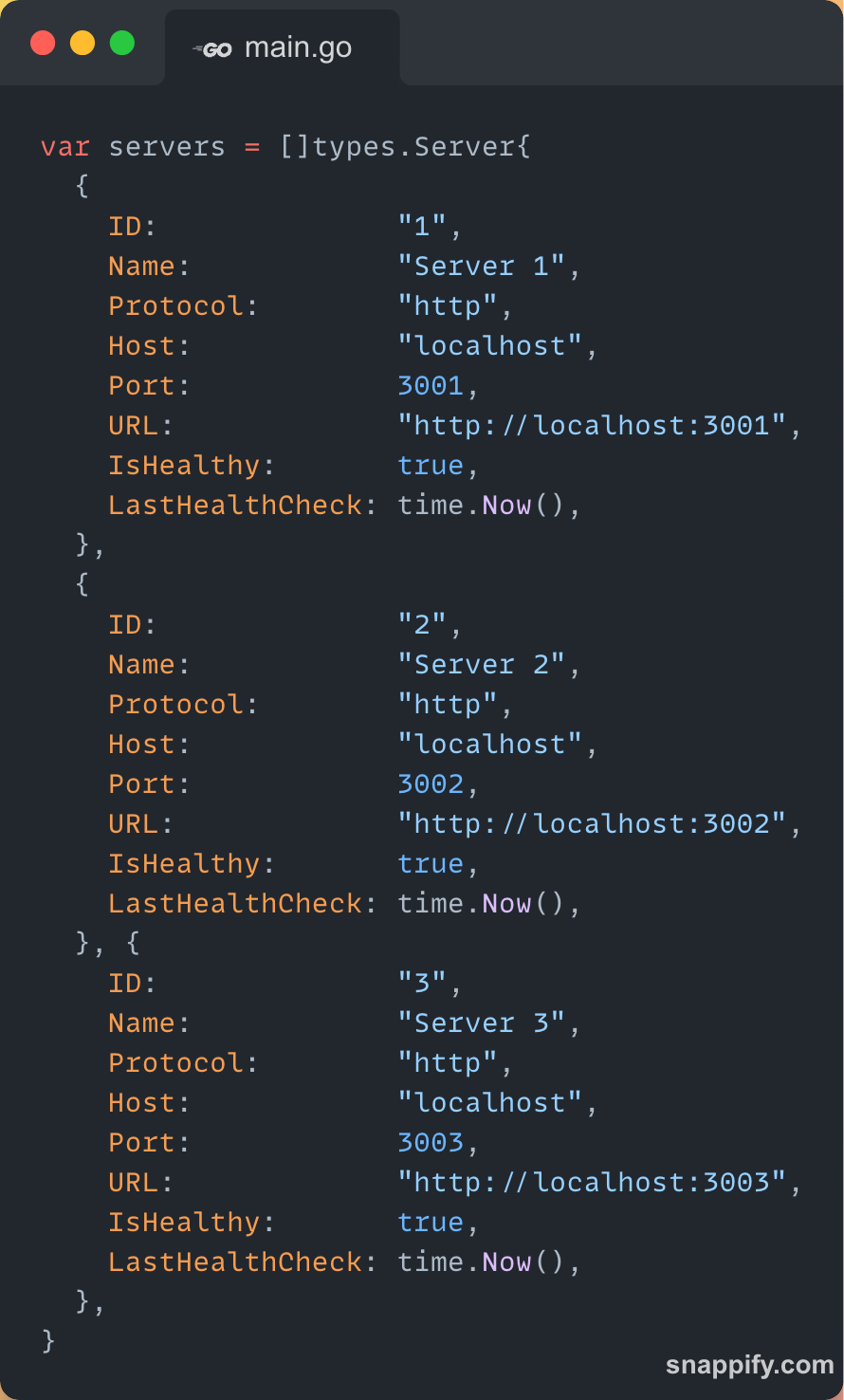

To keep track of each backend server, we define a Server struct. This struct holds all the important information related to a single server instance.

ID: A unique identifier for the server. This can help us distinguish one server from another in the pool.

Name: A human-readable name like "Server 1" or "Server A" to help us refer to the server more easily.

Protocol: This tells us whether the server is using http, https, or some other protocol.

Host: The address of the server, such as localhost or an IP like 192.168.1.10.

Port: The port number on which the server is listening. For example, 3000 or 8080.

URL: A full URL string that combines protocol, host, and port. This makes it easy to send requests to the server.

IsHealthy: A boolean flag that tells us whether the server is currently healthy or not. The load balancer can use this to avoid sending requests to servers that are down.

LastHealthCheck: This stores the time when the last health check was performed on the server. It helps us monitor when the server was last verified to be healthy.

File Source: https://github.com/x-sushant-x/Balancer/blob/main/types/types.go

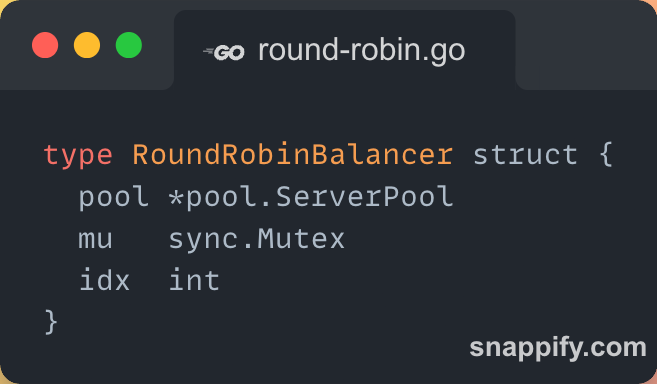

This piece of code implements the Round Robin strategy for our load balancer. The goal here is to pick the next server from the pool in a circular order—one after another—so that the load is evenly distributed.

pool holds the list of all available servers.

mu is a mutex that makes sure two requests don’t try to access or update the index at the same time. This is important because our load balancer might be handling multiple requests at once.

idx keeps track of the last server we selected. We’ll use this to pick the next one.

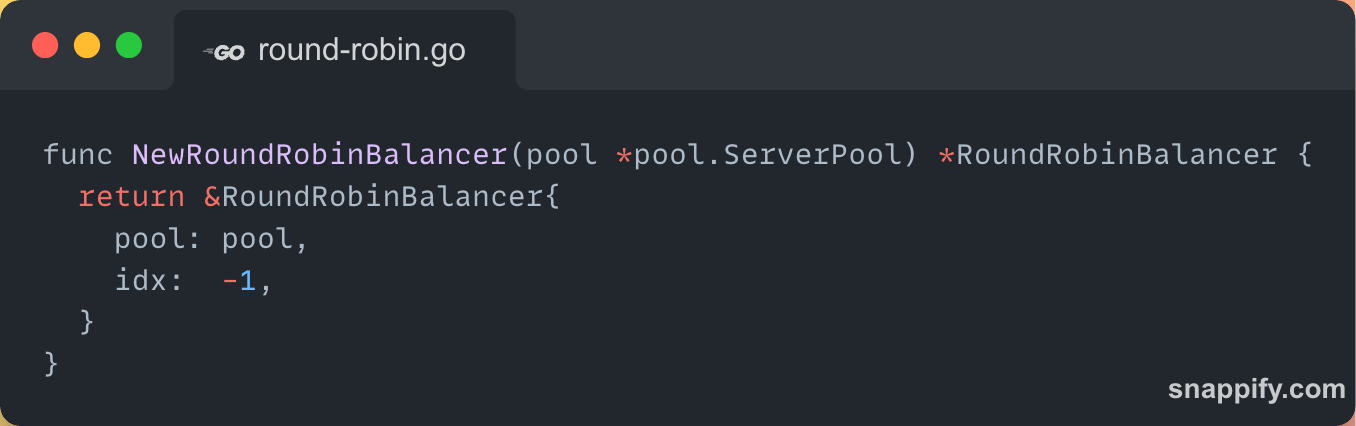

Here, we start with idx as -1, which means no server has been selected yet.

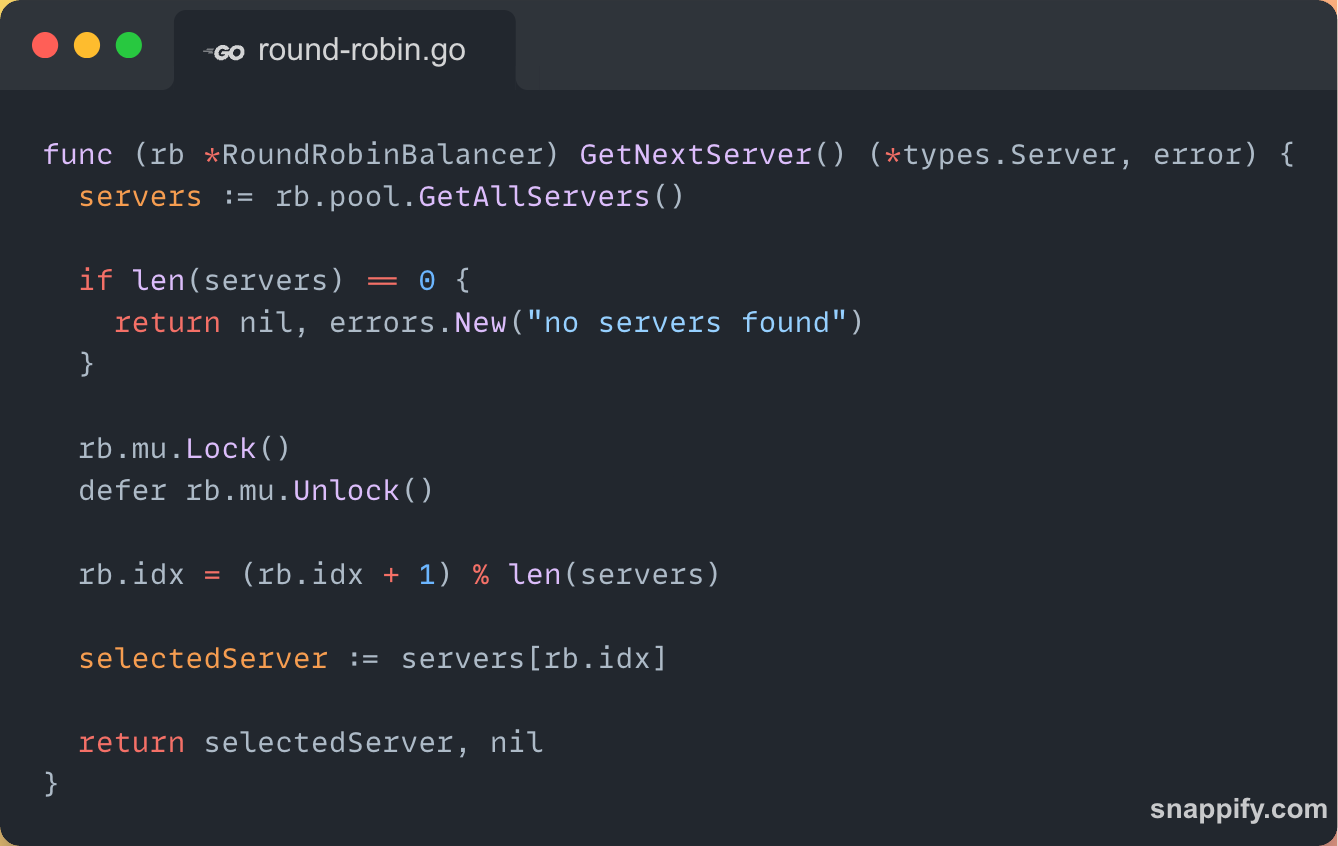

Now comes the core logic in the GetNextServer() method:

We first get the list of all servers. If there are none, we return an error.

We lock access to idx so that only one request can change it at a time. Once the method is done, it automatically unlocks (because of defer).

This line moves to the next server in the list. Once we reach the end, % len(servers) brings us back to the start—creating a circular loop.

So in simple terms:

Whenever a request comes in, the load balancer calls GetNextServer(), picks the next server in line, and sends the request there. This keeps the load evenly distributed across all available servers.

File Source: https://github.com/x-sushant-x/Balancer/blob/main/core/round-robin.go

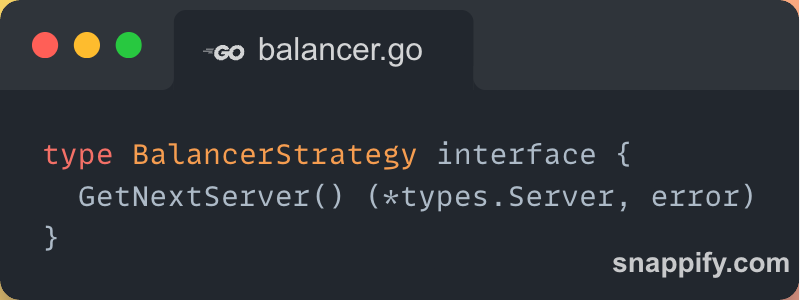

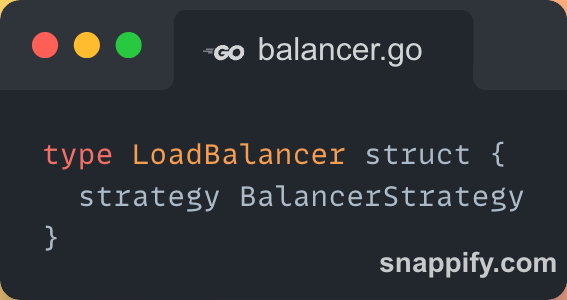

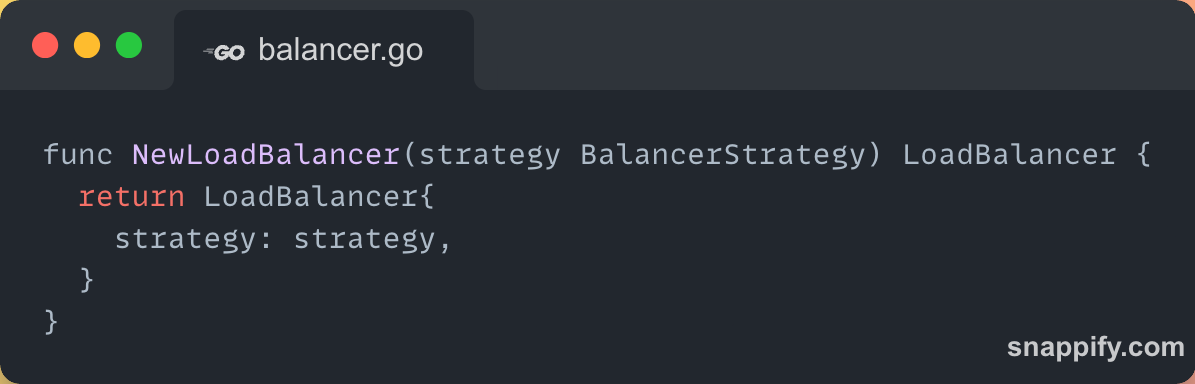

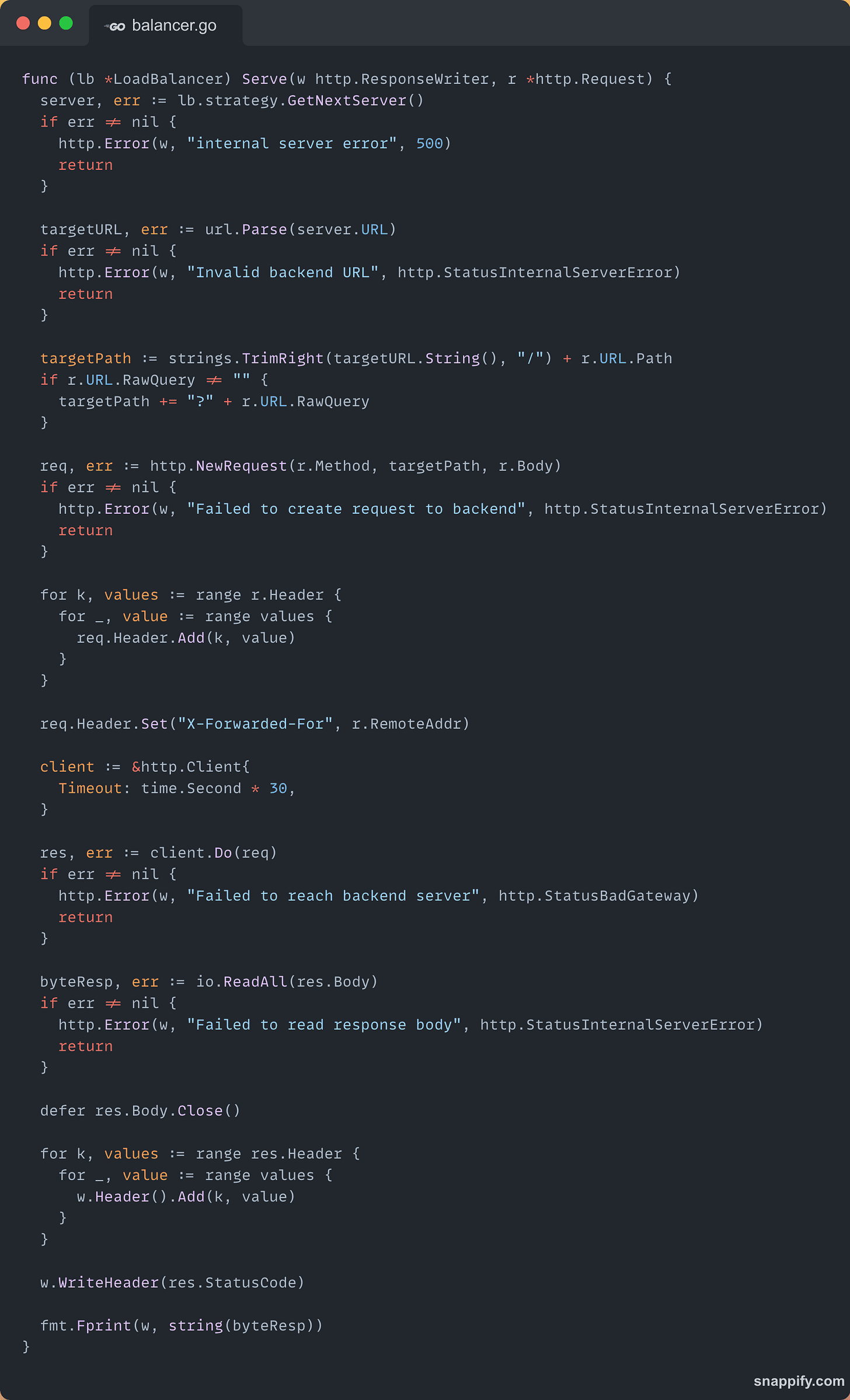

Now that we have a list of servers and a strategy (like Round Robin), the final piece is to actually forward incoming requests to those servers. That’s exactly what this file is doing.

Let’s understand how this works step by step.

This interface expects any load balancing strategy (like Round Robin) to implement a GetNextServer() method. This allows us to swap different strategies without changing the core logic.

This struct holds the selected strategy. It will use that strategy to pick which server the next request should go to.

We use this function to create a new instance of LoadBalancer, and we pass in the strategy we want it to follow.

The Main Logic: Serve Method

This is the heart of the load balancer. It handles each incoming request and redirects it to the right backend server.

We ask the strategy (like Round Robin) to give us the next available server.

We convert the server’s raw URL string into a proper URL object that we can work with.

We build the full path we want to send the request to, including any query parameters (like ?search=value).

We create a new HTTP request that mirrors the original one (same method like GET/POST, same body).

Then, we copy all headers from the original request.

We also add a special header X-Forwarded-For to keep track of the original client IP:

We send the request using Go’s http.Client. If the server is down or slow, we wait for up to 30 seconds before timing out.

We read the response body, then copy all response headers from the backend server to the original client.

We also make sure to return the same status code and response body.

So in simple words:

A request comes in.

We pick the next available server.

We build a new request and send it to that server.

We copy the response from the server and send it back to the client.

File Source: https://github.com/x-sushant-x/Balancer/blob/main/core/balancer.go

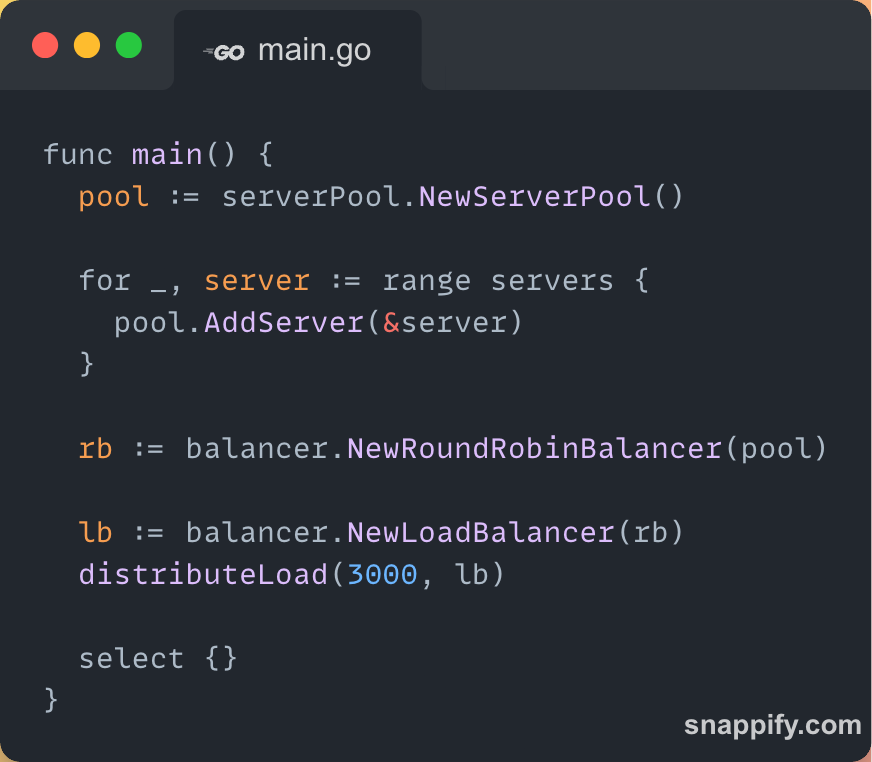

This is the entry point of our load balancer. Everything comes together here—from defining backend servers to starting the load balancer on a specific port.

Defining Our Backend Servers

Here we define three servers. Each server has a unique ID, name, and a URL where it's running (e.g., http://localhost:3001). These are the backend servers that will actually handle the incoming requests. We also set IsHealthy to true and record the current time as LastHealthCheck. For now, we’re manually marking them as healthy.

We create a new server pool to manage our list of backend servers. Think of this as a container that holds all the available servers.

Now loop through each server in our predefined list and add it to the pool. This makes them available for the load balancer to use.

Create a round robin balancer and pass our server pool to it. This will ensure that requests are evenly distributed to all servers in order.

Create the actual load balancer and tell it to use the round robin strategy.

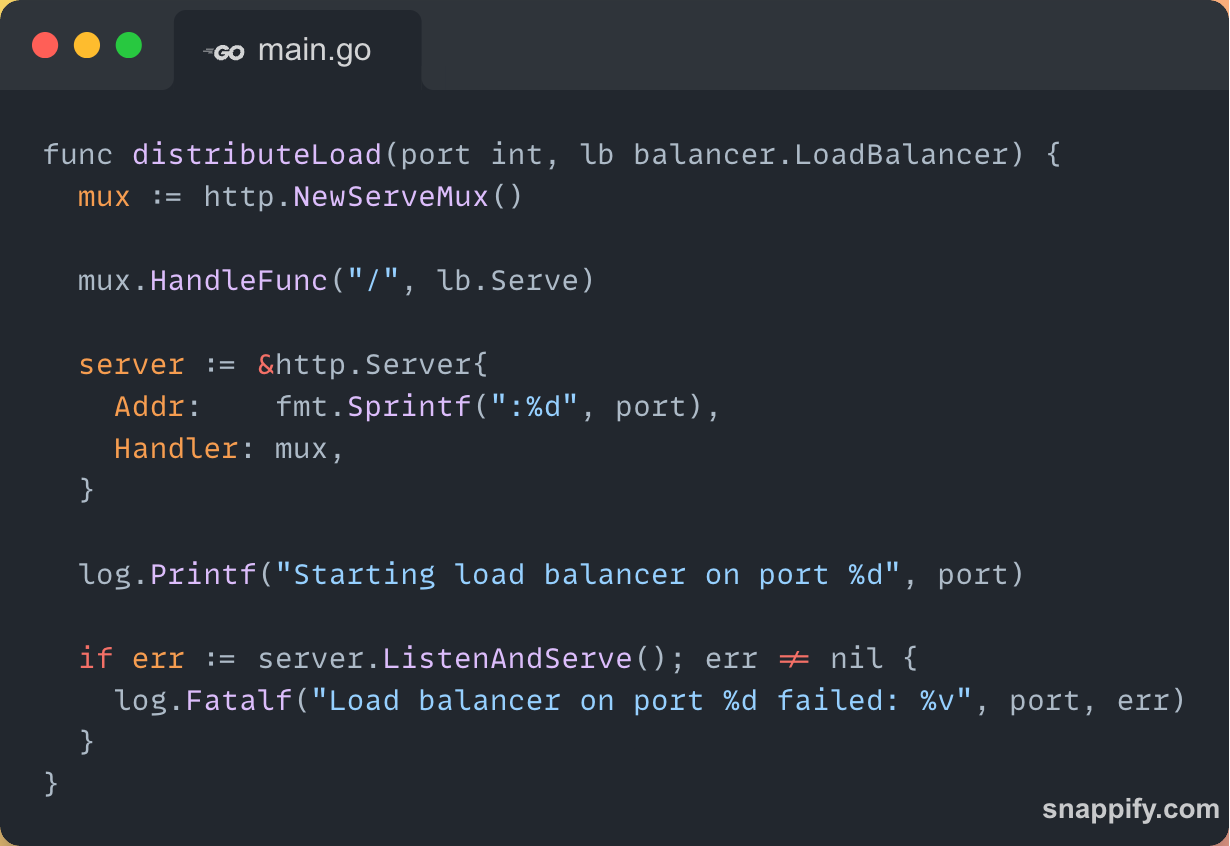

distributeLoad function sets up a new HTTP server on port 3000. This is the port where the client will send all incoming requests. The load balancer will receive those requests and forward them to the appropriate backend server.

We define a new HTTP router and tell it to handle all incoming requests ("/") using our load balancer’s Serve method.

Now let’s create a HTTP server bound to the specified port (3000 in our case) and attach our router to it.

File Source: https://github.com/x-sushant-x/Balancer/blob/main/main.go

Complete Source Code: https://github.com/x-sushant-x/Balancer

So what happens when a client makes a request to http://localhost:3000/?

The load balancer receives it.

It asks the Round Robin strategy to pick the next server.

It forwards the request to that server (like http://localhost:3001/).

The response from the backend server is sent back to the client.

There is one more thing that our load balancer is missing, and that is health checking. We need to make sure that our load balancer only sends requests to healthy instances.

We are not going to implement that in this same post. Instead I'll write another post for that.

You can SUBSCRIBE to my newsletter; it's completely free, and I'll make sure you receive health checking. post as soon as I publish it.

.png)