At Dell Technologies World 2025 this month, one laptop quietly stole the show, and it didn’t even have a GPU.

The new Dell Precision Pro Max Plus is a mobile workstation with a bold twist: It ditches the graphics card for an enterprise-grade discrete NPU.

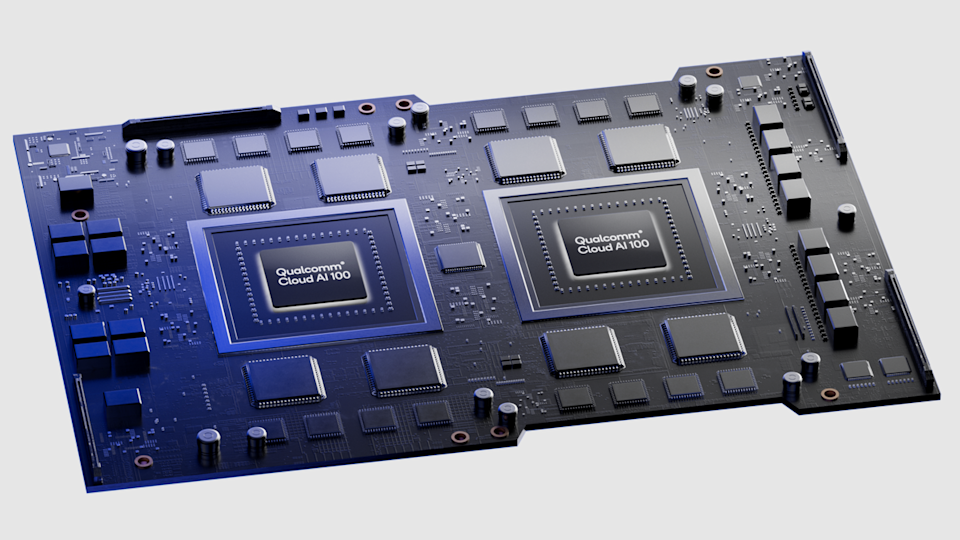

That might sound niche, but it’s a big deal. This is the first notebook we’ve seen that treats AI as the primary workload, not graphics, gaming, or CAD. It’s powered by a dual-chip Qualcomm AI 100 card with 64GB of dedicated memory. Dell ran a 109-billion-parameter Llama 4 model in a live demo on the laptop without an Internet connection or cloud server.

No GPU? No problem

The star of the show is the Qualcomm AI 100 PC Inference Card. It's a dual-SoC module based on Qualcomm's Cloud AI 100 chip, silicon that was originally designed for data centers.

In this configuration, you get 32 AI cores, 64GB of LPDDR4x memory, and around 450 TOPS (trillions of operations per second) of 8-bit AI compute. That’s an order of magnitude more AI power than even the latest Copilot+ PCs.

To make room for this monster, Dell removed the GPU entirely. Unlike other Pro Max Plus configs that offer Nvidia RTX graphics, this model swaps the discrete GPU slot for the Qualcomm card. That’s a trade-off for sure, but if you’re not rendering video or playing games, it’s a smart one.

How does it compare?

Let’s talk raw AI power. Apple’s M4 MacBook Pro has a 16-core Neural Engine that maxes out around 38 TOPS. Intel’s upcoming Lunar Lake CPUs hit around 48 TOPS. Snapdragon X Elite sits at 45 TOPS, and AMD’s best integrated NPUs are around 55 TOPS. Dell’s Pro Max Plus? 450. That’s ten times more TOPS than the Snapdragon X Elite.

Swipe to scroll horizontally

Platform | AI Accelerator | Peak TOPS |

Dell Pro Max Plus | Qualcomm AI 100 (discrete) | ~450 |

Apple MacBook Pro (M4) | Apple Neural Engine (integrated) | ~38 |

Snapdragon X Elite | Hexagon NPU (integrated) | ~45 |

Intel Lunar Lake | NPU 4 (integrated) | ~48 |

AMD Ryzen AI | AMD NPU (integrated) | ~55 |

This isn't just about numbers, though. Dell’s card has a 64GB memory pool, meaning it can keep huge models entirely in local RAM without pulling from system memory.

That’s essential for developers working with large LLMs, vision models, or local inference.

Built for advanced work

It’s important to be mindful of who this machine is actually for. It’s not for gamers, students, or your average office user. Rather, the Pro Max Plus, with its discrete NPU, is aimed at data scientists, AI engineers, and developers who need to run massive models offline, securely, and on the go.

At Dell Technologies World, the company demonstrated its new Pro AI Studio toolkit running on the device. It includes use cases like modifying code in a game engine with natural language and deploying fine-tuned models without touching the cloud.

There are apparent advantages here for high-security industries. Running sensitive data through a local model means no cloud leaks or latency risk.

This workstation is effectively an AI edge server you can throw in a backpack. That opens the door for field applications in defense, healthcare, manufacturing, or disaster response: Anywhere fast, private AI inference is needed on the edge.

Dell Pro Max Plus with Qualcomm AI 100 – Built for AI Power Users - YouTube

Looking under the hood

The Qualcomm AI 100 card is built on a 7nm process and uses two chips connected over PCIe. Each one offers 16 AI cores and 32GB of memory. Together, they act as a unified engine with enough bandwidth to handle some of the largest models available today.

In terms of thermal management, the card is designed to operate under a 75W thermal design power, which is considerably more than typical NPUs found in consumer laptops (usually under 10W). That means Dell had to account for additional cooling and power delivery, but in return, you get server-class performance without a server.

Apple’s M3 and Intel Lunar Lake are both fabbed on advanced 3nm TSMC nodes, while Snapdragon X Elite and AMD Ryzen AI are built on 4nm. Qualcomm’s 7nm tech may be older, but it’s optimized for efficiency under sustained AI load.

Sure, it won’t render a Pixar movie, but it will run Llama 4 Scout locally… and that’s arguably a more impressive flex nowadays.

What does this all mean for workstation laptops?

The arrival of discrete NPUs in laptops like the Pro Max Plus marks a major inflection point in mobile computing. Michael Dell summed it up during the opening keynote of Dell Technologies World 2025: “Personal productivity is being reinvented by AI. We’re ready.”

Historically, workstation-class machines have revolved around GPUs, but PUs are general-purpose compute units that weren’t purpose-built for deep learning inference. With the Pro Max Plus, it’s clear Dell isn’t just ready: It’s pushing the workstation category into uncharted territory.

Dedicated NPUs, especially ones with large memory pools and optimized interconnects, change the game. They’re smaller, more power-efficient, and deliver far more performance per watt for AI-specific tasks.

This unlocks use cases previously restricted to datacenter servers or cloud instances for professionals building and deploying AI models.

Expect more vendors to follow suit. We’re already seeing Copilot+ PCs push integrated NPU performance, but Dell’s move hints at a future where premium workstations could offer GPU, NPU, or hybrid configurations.

If you're building the future of AI, your next workstation might not have a GPU. And with laptops like this, it might not need one.

.png)