If parsing and validating the request significantly contributes to the processing time, there might be room to optimize your FastAPI REST API. The key is to use directly Starlette at one or two spots and to leverage some of Pydantic’s magic to accelerate validation.

Part of FastAPI’s merit is how well it leverages other tools to serve its purpose. FastAPI adds the layers it needs on top of them to deliver all the niceties that make the framework so popular these days. Of course, one of them is right in the name: It’s fast. Such speed comes to a great extent from Starlette, a minimal web framework used by FastAPI under the hood and Pydantic, used for data validation, serialization, etc.

It turns out that sometimes we can tweak those tools directly (even inside of a FastAPI application) in order to make things go even faster. I will show a few pretty simple tricks to speed up your app without adding much complexity, provided we can compromise a bit of FastAPI’s ergonomics in return.

Most of what you’re about to read I learnt in conversation with Marcelo Trylesinski at Europython 2024. He’s deeply involved with all above mentioned projects and generously took some time to code and debug stuff with me.

Thank you, Marcelo! 🧉

Let’s create a little FastAPI app to demonstrate the optimizations. Our fake app will receive a list of items, each item having a name and a price. For the sake of just doing some computation with the request content, our “microservice” will re-calculate the price for each item and return the list with new prices (just random changes).

Importantly, we want to validate both the input and the output data. This is one of the niceties of FastAPI: By defining response_model and passing the type of the request argument, FastAPI will know what we want and will use them automatically.

I will repeat my self a bit in the definitions of each app. The idea is to have self-contained code in each case that will be written to a file to be run from there in the command line.

We launch the app in the background with

so that we can timeit from here:

That’s our baseline.

We can do better.

We will read the bytes directly from the request body and build the Pydantic model ourselves. The reason for that is that Pydantic can directly parse the bytes into a Pydantic model, thus skipping the deserialization into a Python dict first. We will also let Pydantic serialize the model and wrap it as a Starlette’s response.

We cut around 10ms – not bad!

But we can still do better.

This solution I’d argue is a bit more involved, so you might really first make sure that you really need/want to optimize further. In any case, I think the complexity is not that much higher, so it might be definitely worth trying it.

We will drop the BaseModel from Pydantic (which is what gives us the data validation under the hood). Instead we’ll use TypedDict and Pydantic’s TypeAdapter.

Fasten your seatbelt! 🚀

We cut the response more or less by half! 🤯

I think that’s pretty impressive.

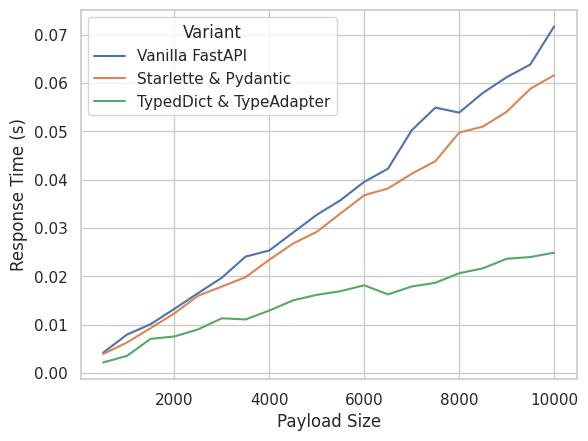

Here’s the global comparison of response times:

| 0 | Vanilla FastAPI | 0.070603 | 0.075128 |

| 1 | Starlette & Pydantic | 0.060742 | 0.066975 |

| 2 | TypedDict & TypeAdapter | 0.023436 | 0.026576 |

Fair question. I presented here a simplified, toy example of the REST API that I actually had to run production.

The real-world use case included a lookup step on a Sqlite database which took around 30/40ms. So the speed up I just showed can be equivalent in time to skipping the lookup altogether!

The reason why that’s big deal is that the real-world microservice was supposed to respond under 100ms (P99), thus cutting 50ms is saving ~50% of our response time “budget”.

Going back to the initial statement: These optimizations might not make sense if your processing time does not depend on the payload size (for example, because the payloads in your application are so small that you cannot squeeze much performance out of it).

To demonstrate that, we can run a quick experiment to compare the performance of the different variants of the app that we presented above. We will simply measure the mean response time as a function of the payload size.

What does that tell us?

We see that the Starlette & Pydantic optimization will reduce the processing time only by more or less a fix amount (at least for the tested range). So the larger the input, the less impact of the optimization, i.e. the less it will pay off to refactor the code.

On the other hand, the version TypedDict & TypeAdapter has really a different, better scaling relationship. This means that such optimization will always pay off, since the larger payload, the larger absolute time save.

Having said that, what I presented should simply be taken into account as an heuristic to search for optimizations in your code (only if you really need them!). You have to benchmark your code to decide if any of this makes sense for your use case.

Unfortunately, by stripping away the custom type hints and using the plain classes Request (from Starlette) and TypedDict+TypeAdapter, we loose the information that FastAPI uses to generate the automatic documentation based on the openapi spec (do notice though that the data validation still works!). It will be up to you to decide if you can live with that and/or the performance gain is worth it.

Here’s how this looks like (on the right is the version with all the optimizations):

/Fin

Any bugs, questions, comments, suggestions? Ping me on twitter or drop me an e-mail (fabridamicelli at gmail).

Share this article on your favourite platform:

.png)