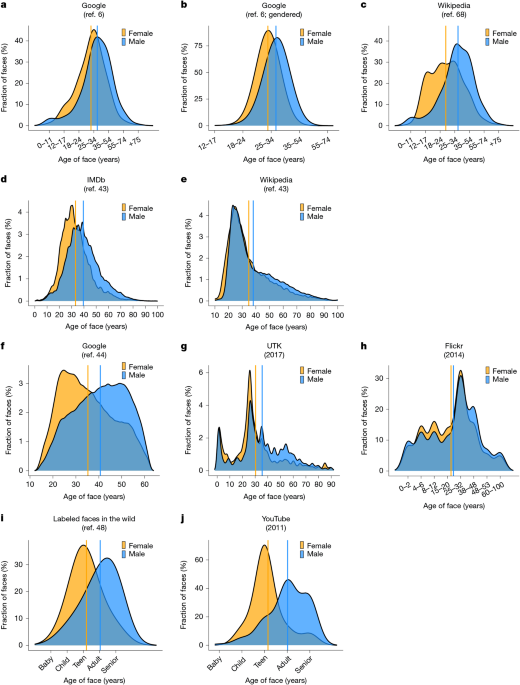

If you're an engineering manager, there's a good chance you've been handed a GitHub dashboard and told to measure performance with it. Maybe you've tried to make sense of PRs merged, commits pushed, or even lines of code changed. Maybe you've even been asked to rank your team off the back of it.

But here's the uncomfortable truth:

Most GitHub metrics don't tell you what you think they do.

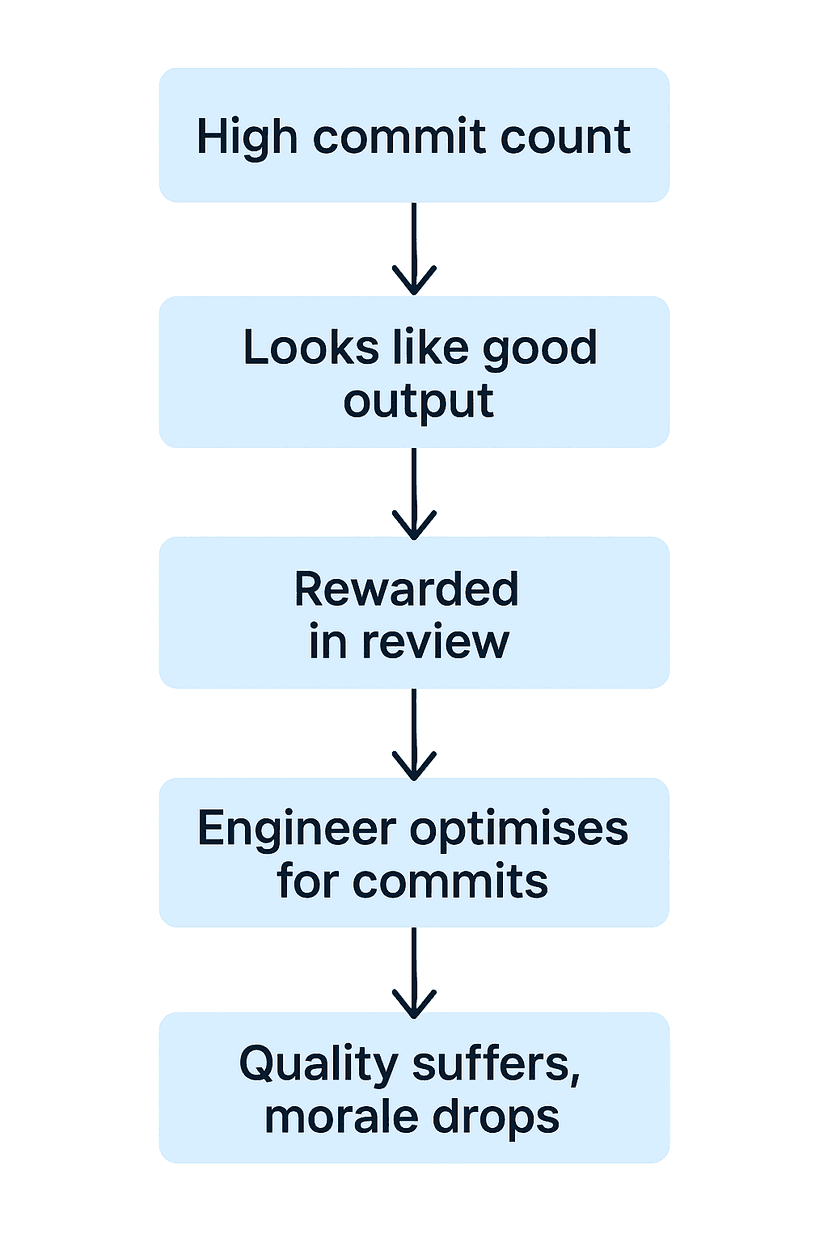

Take commit count. A high number of commits might mean someone's productive. It could also mean they're splitting a single change into 20 commits. Or running a commit bot. Or, in one real-world case:

Commit counts is one KPI… So I wrote a git committor that commits all changed files individually. My commits are soaring. — reddit user etrakeloompa

This is the problem: volume is easy to measure. Value is not. And so we gravitate toward what's measurable.

But software engineering is full of invisible work: architectural discussions, mentoring, investigations into flaky tests, de-risking future features. These rarely show up in GitHub, even though they're critical for delivery.

In fact, the most impactful engineers often appear less active in GitHub. Why? Because they spend more time thinking, aligning, unblocking others, and making systems simpler, not just writing more code.

Lines of code (LoC) measure how much was typed - not whether it was good, maintainable, or necessary. Worse, LoC punishes deletions, even though simplification is one of the highest forms of engineering maturity.

A 2020 study from Microsoft Research found:

More code typically increases complexity, maintenance burden, and bug surface… Metrics that reward verbosity create perverse incentives. — Microsoft Research: Productivity in the Modern Era)

If you're rewarding LoC, you're rewarding the opposite of refactoring, abstraction, and careful design. You're rewarding churn.

DORA metrics (like deployment frequency or lead time for changes) are a huge step up from raw GitHub metrics. They're team-level, tied to outcomes, and useful for understanding systemic performance.

But they're also blunt instruments.

DORA doesn't tell you if a team is dealing with unclear product direction. Or tech debt that leadership won't prioritise. Or that your only staff engineer is spending 60% of their time doing support handovers because no one else knows the legacy monolith.

These are the things that actually drag on velocity. But they're invisible unless you zoom in, talk to people, and combine metrics with narrative.

The question isn't "Are metrics bad?" - it's "What job are we hiring this metric to do?"

Good metrics do three things:

- Inform, not mislead - they should trigger good questions, not be used to justify bad decisions.

- Correlate, not define - they should be viewed alongside context, not treated as proof.

- Evolve - they should change as your team and product maturity changes.

For example, a spike in open PRs might signal review overload. A drop in deploy frequency might indicate a testing bottleneck. But both require qualitative investigation before you draw conclusions.

- Time in review vs time in progress - Where's the real bottleneck?

- PR review load by person - Are you burning out your most senior people?

- Revert rate - Are we sacrificing quality for speed?

- Cross-functional wait time - How often is a team blocked on design, data, or infra?

None of these live in GitHub alone - but all of them affect delivery.

There is one leading indicator that consistently predicts engineering effectiveness: developer satisfaction.

GitHub themselves found:

The best indicator of productivity isn't found in commit counts or deployment metrics… it's in developer satisfaction. — GitHub Research

This isn't just feelgood fluff. A team that feels empowered, aligned, and supported will:

- Move faster with fewer blockers

- Deliver higher quality

- Stick around longer

You can't ship a feature with someone who just rage-quit. And you can't measure their impact with commit counts when they're busy coaching a junior through a tough problem.

The instinct to measure is natural. But the moment a metric becomes a performance proxy, it stops being useful.

What you need is a culture of visibility, trust, and honest reflection. One where GitHub metrics are part of the conversation - not the entire conversation.

Ask yourself:

- Do engineers understand how their work connects to customer outcomes?

- Do they feel safe raising technical risks early?

- Are you rewarding people for unblocking others - or just for the most visible wins?

- Can you trace big architectural decisions to actual business impact?

If you're answering "yes" to these - you don't need metrics to prove it. And if you're answering "no" - no metric will save you.

.png)