A while ago I saw a person in the comments to Scott Alexander's blog arguing that a superintelligent AI would not be able to do anything too weird and that "intelligence is not magic", hence it's Business As Usual.

Of course, in a purely technical sense, he's right. No matter how intelligent you are, you cannot override fundamental laws of physics. But people (myself included) have a fairly low threshold for what counts as "magic," to the point where other humans (not even AI) can surpass that threshold.

Example 1: Trevor Rainbolt. There is an 8-minute-long video where he does seemingly impossible things, such as correctly guessing that a photo of nothing but literal blue sky was taken in Indonesia or guessing Jordan based only on pavement. He can also correctly identify the country after looking at a photo for 0.1 seconds.

Example 2: Joaquín "El Chapo" Guzmán. He ran a drug empire while being imprisoned. Tell this to anyone who still believes that "boxing" a superintelligent AI is a good idea.

Example 3: Stephen Wiltshire. He made a nineteen-foot-long drawing of New York City after flying on a helicopter for 20 minutes, and he got the number of windows and floors of all the buildings correct.

Example 4: Magnus Carlsen. Being good at chess is one thing. Being able to play 3 games against 3 people while blindfolded is a different thing. And he also did it with 10 people. He can also memorize the positions of all pieces on the board in 2 seconds (to be fair, the pieces weren't arranged randomly, it was a snapshot from a famous game).

Example 5: Chris Voss, an FBI negotiator. This is a much less well-known example, I learned it from o3, actually. Chris Voss has convinced two armed bank robbers to surrender (this isn't the only example in his career, of course) while only using a phone, no face-to-face interactions, so no opportunities to read facial expressions. Imagine that you have to convince two dudes with guns who are about to get homicidal to just...chill. Using only a phone. And you succeed.

So if you think, "Pfft, what, AI will convince me to go from hostile to cooperative within minutes, after a little chit-chat?" well, yes, it just might.

Examples 2 and 5 are especially relevant in the context of controlling AI. So if you are surprised by these examples, you will be even more surprised by what a superintelligent AI can do.

Intelligence is not magic. But if even "natural" intelligence can get you this far, imagine what an AGI can do.

One person having an extreme level of a skill is already on the boundary of "business as usual". Having all of them at the same time would be a completely different game. (An on top of that we can get the ability to do N of those things at the same time, or doing one of them N times faster, simply by using N times more resources.) So when you imagine how powerful AI can get, you only have two options: either you believe that there is a specific human ability that the AI can never achieve, or it means that the AI can get insanely powerful. As an intuition pump, imagine it as a superhero ability: the protagonist can copy abilities of any person he sees, and can use any number of those abilities in parallel. How powerful would the character get? It seems that this model requires a lot of argumentation that is absent from post and only implicit in your comment. Why should I imagine that AGI would have that ability? Are there any examples of very smart humans who simultaneously acquire multiple seemingly magical abilities? If so, and if AGI scales well past human level, it would certainly be quite dangerous. But that seems to assume most of the conclusion. Explicitly, in the current paradigm this is mostly about training data, though I suppose that with sufficient integration that data will eventually become available. Anyway, I personally have little doubt that it is possible in principle to build very dangerous AGI. The question is really about the dynamics - how long will it take, how much will it cost, how centralized and agentic will it be, how long are the tails? It occurs to me that acquiring a few of these “magical” abilities is actually not super useful. I can replicate the helicopter one with a camera and the chess on by consulting an engine. Even if I could do those things secretly, i.e. cheat in chess tournaments, I would not suddenly become god emperor or anything. It actually wouldn’t help me much. 5 isn’t that impressive without further context. And I’ve already said that the el chapo thing is probably more about preexisting connections and resources than intelligence. So, I’m cautious of updating on these arguments. Why should I imagine that AGI would have that ability? Modern LLMs are already like that. They have expert or at least above-average knowledge in many domains simultaneously. They may not have developed "magical" abilities yet, but "AI that has lots of knowledge from a vast number of different domains" is something that we already see. So I think "AI that has more than one magical ability" it's a pretty straightforward extrapolation. Btw, I think it's possible that even before AGI, LLMs will have at least 2 "magical" abilities. They're getting better at Geoguessr, so we could have a Rainbolt-level LLM in a few years; this seems like the most likely first "magical" ability IMO. What makes you think that those people were able to do those things because of high levels of intelligence? It seems to me that in most cases, the reported feat is probably driven by some capability / context combination that stretches the definition of intelligence to varying degrees. For instance I would guess that El Chapo pulled that off because he already had a lot of connections and money when he got to prison. The other examples seem to demonstrate that it is possible for a person to develop impressive capabilities in a restricted domain given enough experience. We are exactly worried about that though. It is not that AGI will be inteligent (that is the name), but that it can and probably will develop dangerous capabilities. Inteligence is the word we use to describe it, since it is associated with the ability to gain capability, but even if the AGI is sometimes kind of brute force or dumb does not mean that it cannot also have dangerous enough capabilities to beat us out. The post is an intuition pump for the idea that intelligence enables capabilities that look like "magic." It seems to me that all it really demonstrates is that some people have capabilities that look like magic, within domains where they are highly specialized to succeed. The only example that seems particularly dangerous (El Chapo) does not seem convincingly connected to intelligence. I am also not sure what the chess example is supposed to prove - we already have chess engines that can defeat multiple people at once blindfolded, including (presumably) Magnus Carlsen. Are those chess engines smarter than Magnus Carlsen? No. This kind of nitpick is important precisely because the argument is so vague and intuitive. Its pushing on a fuzzy abstraction that intelligence is dangerous in a way that seems convincing only if you've already accepted a certain model of intelligence. The detailed arguments don't seem to work. The conclusion that AGI may be able to do things that seem like magic to us is probably right, but this post does not hold up to scrutiny as an intuition pump. The only example that seems particularly dangerous (El Chapo) does not seem convincingly connected to intelligence I'd say "being able to navigate a highly complex network of agents, a lot of which are adversaries" counts as "intelligence". Well, one form of intelligence, at least. This point suggests alternative models for risks and opportunities from "AI". If deep learning applied to various narrow problems is a new source of various superhuman capabilities, that has a lot of implications for the future of the world, setting "AGI" aside. Perhaps you think of intelligence as just raw IQ. I count persuasion as a part of intelligence. After all, if someone can't put two coherent sentences together, they won't be very persuasive. Obviously being able to solve math/logic problems and being persuasive are very different things, but again, I count both as "intelligence". Of course, El Chapo had money (to bribe prison guards), which a "boxed" AGI won't have, that I agree with. I disagree that it will make a big difference. I intentionally avoided as much as possible the implication that intelligence is "only" raw IQ. But if intelligence is not on some kind of real-valued scale, what does any part of this post mean? I think that high levels of intelligence make it easier to develop capabilities similar to the ones discussed in 1 and 3-5, up to a point. (I agree that El Chapo should be discounted due to the porosity of Mexican prisons) A being with an inherently high level of intelligence will be able to gather more information from events in their life and process that information more quickly, resulting in a faster rate of learning. Hence, a superintelligence will acquire capabilities similar to magic more quickly. Furthermore, the capability ceiling of a superintelligence will be higher than the capability ceiling of a human, so they will acquire magic-like capabilities impossible for humans to ever preform. Example 1: Trevor Rainbolt. There is an 8-minute-long video where he does seemingly impossible things, such as correctly guessing that a photo of nothing but literal blue sky was taken in Indonesia or guessing Jordan based only on pavement. He can also correctly identify the country after looking at a photo for 0.1 seconds. To be clear, that video is heavily cherry-picked. His second channel is more representative of his true skill: https://www.youtube.com/@rainbolttwo/videos Example 4: Magnus Carlsen. Being good at chess is one thing. Being able to play 3 games against 3 people while blindfolded is a different thing. It isn't. To be good at chess your brain needs to learn to present game states in your head. To be good you need to read ahead many moves. That's the same skill you need to play blindfolded. People who never tried to play blindfolded or are not good overestimate the amount of skill it takes. Some people who try to play blindfolded are surprised about how easy it is. I would expect that explaining a complex situation of business politics to current o3-Pro and asking it "from a game theoretic perspective" what are smart and maybe unconventional moves I can make in this situation, is already going to give let you make political moves that are much stronger than what many people currently do. Most of the time, humans are not really trying to use intelligence to make optimal well thought out choices. You already get a huge leg up, by actually trying to use intelligence to optimize all the choices you make.

Superhuman forecasting could be the next one, especially once LLMs become good at finding relevant news articles in real time.

Identifying book authors from a single paragraph with 99% accuracy seems like something LLMs will be able to do (or maybe even already can), though I can't find a benchmark for that.

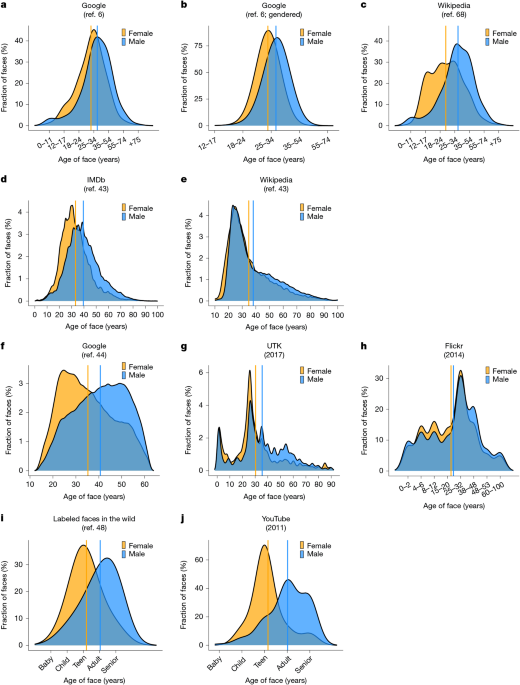

Accurately guessing age from a short voice sample is something that machine learning algorithms can do, so with enough training data, LLMs could probably do it too.

Curated and popular this week

.png)