The Model Context Protocol (MCP) has become the standard for AI agent tool connectivity, adopted by major platforms like OpenAI, Microsoft, and Google. However, it hasn't solved the core challenges of tool hallucination and exponential token costs in production AI systems.

The core problem remains: when you expose more tools to an AI agent, performance degrades. Your sales AI agent might struggle with simple tasks like "get all leads" while burning through expensive tokens processing irrelevant tool descriptions. This is where the "Less is More" principle becomes crucial for MCP design — minimizing context window usage while maximizing relevant information delivery.

The Problem

When designing tools for AI agents, context window space is your most valuable resource. Every tool you expose requires:

- A descriptive name and purpose statement

- A detailed schema of all parameters (required and optional)

- Examples and usage constraints

- Error handling documentation

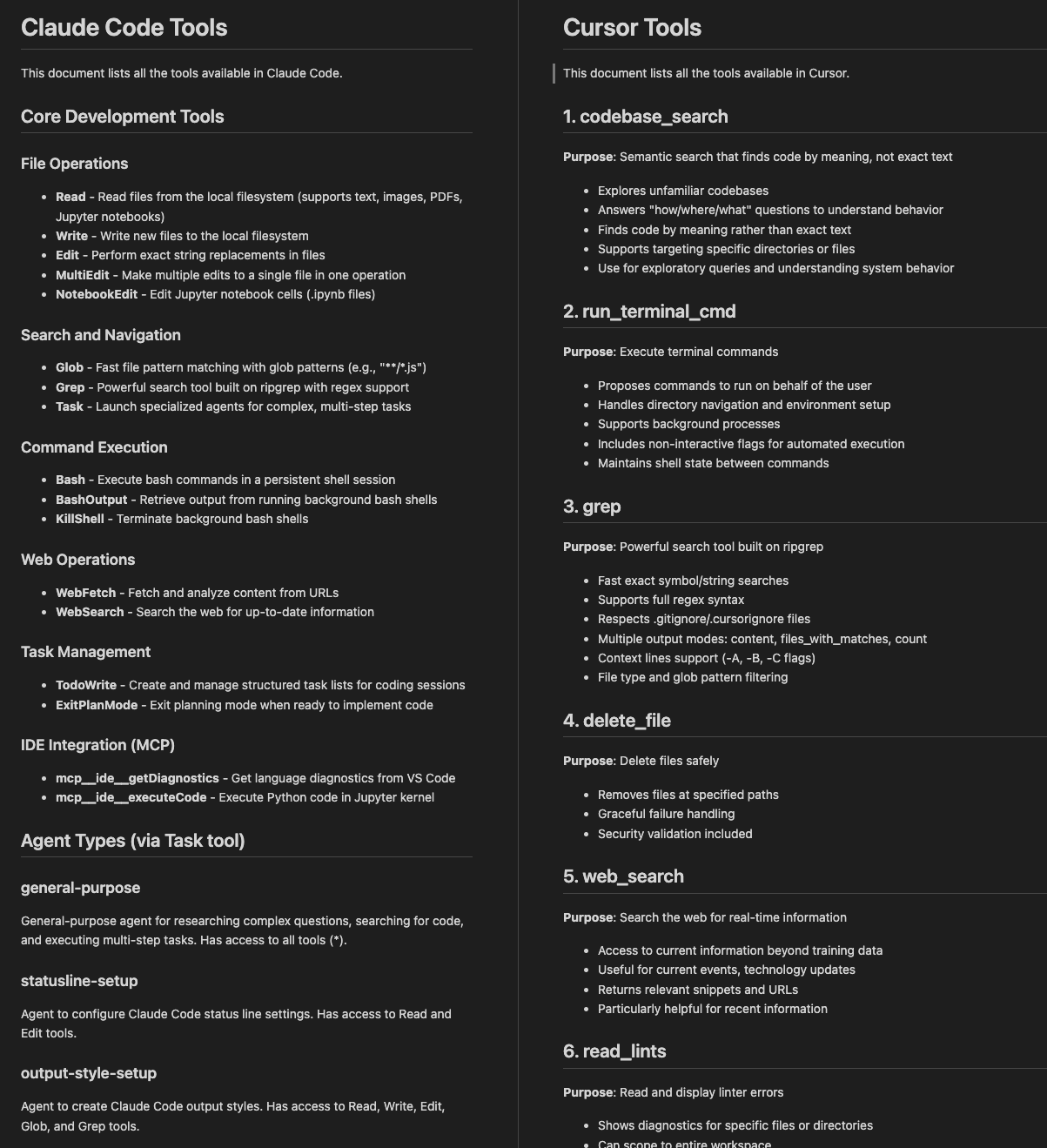

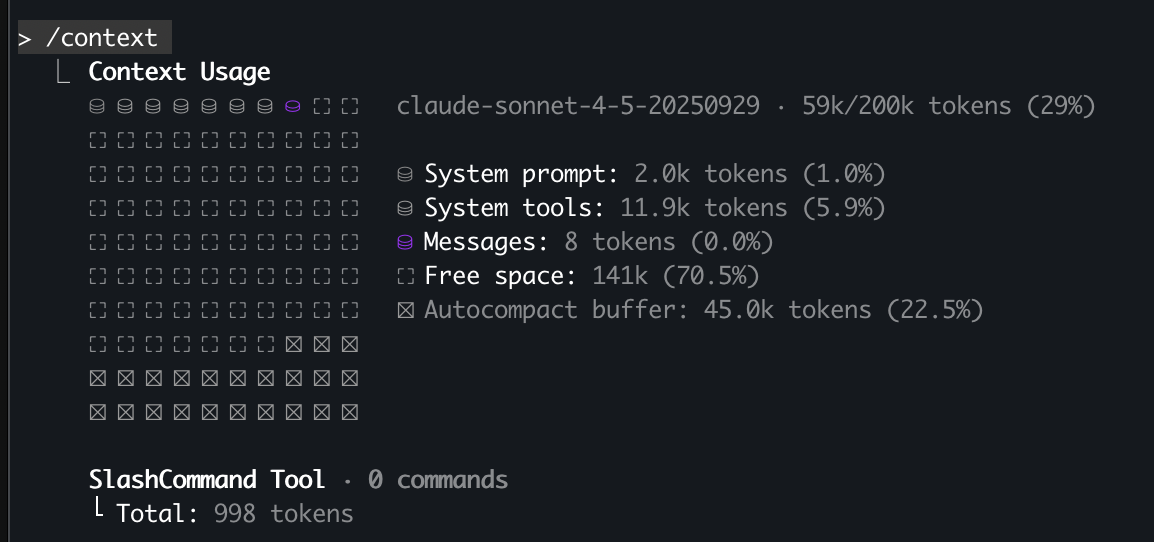

To illustrate this, we prompted Cursor and Claude Code: "list all the tools you have access to, write them to a file named tools.md". As of today (Oct 12, 2025), they already expose 18 and 15 tools respectively to their coding AI agents (see the tools comparison), and these tool definitions already consume 5-7% of your context window before you even write your first prompt.

* Cursor and Claude Code already expose 18 and 15 tools to AI agents.

* Tool definitions consume 5.9% of context window before any user prompt in Claude Code.

The Solution

1. Semantic Search

The most straightforward approach involves building a semantic search layer on top of your tool catalog. Instead of exposing all tools upfront, the system dynamically retrieves relevant tools based on the user's query using vector similarity search.

Implementation Example:

Moreover, you can indexing all avaliable tools in a vector/graph database for fast retrieval or build RAG systemon top of it.

Pros:

- Easy to implement and optimize, scales well with large tool catalogs

- Leverages existing vector database infrastructure, can incorporate user feedback to improve relevance

Cons:

- Search quality becomes a bottleneck, semantic search is notoriously difficult to perfect

- May miss relevant tools due to embedding limitations and requires continuous tuning and evaluation

When to use: Best for scenarios with large number of tools where tools have distinct, well-defined purposes.

2. Workflow-Based Design

This approach, championed by teams at Vercel and Speakeasy, focuses on building tools around complete user goals rather than individual API capabilities. Instead of exposing multiple granular tools, create single atomic operations that handle entire workflows internally.

Traditional API-Shaped Approach:

Workflow-Based Alternative:

The workflow-based tool handles the complete process internally and returns conversational updates instead of technical status codes. As Vercel's engineering team notes: "Think of MCP tools as tailored toolkits that help an AI achieve a particular task, not as API mirrors".

Pros:

- Significantly reduces token usage and call overhead, easier for models to understand and use correctly

- More reliable execution with fewer failure points, better user experience with conversational responses

Cons:

- Requires predefined workflow analysis

- Not suitable for exploratory or highly customizable operations

When to use: Perfect for well-defined, repeated workflows with predictable steps. Ideal for production tools serving specific use cases.

3. Code Mode

Cloudflare recently introduced "Code Mode" as a paradigm shift in how AI agents interact with tools. Instead of sequential tool calls, agents write complete programs that use available APIs, which then execute in secure sandboxes.

At Klavis, we've deployed similar concepts with our customers. We've seen CRM tools where AI made 50+ sequential calls to fetch_leads with pagination, resulting in 200K+ tokens consumed, multiple conversation rounds leading to hallucination, and degraded user experience with slow responses.

Code Mode Solution with MCP:

Instead of exposing individual fetch_leads, fetch_opportunity, etc. tools, we exposed a single execute_code tool:

The LLM generates code and invokes the execute_code tool to run it in the secure sandbox:

Pros:

- Extremely powerful and flexible, handles complex data operations and reduces token usage for data-heavy operations

- LLMs are good at writing code than tool use

Cons:

- Security concerns (code execution, especially write operations)

- May use more tokens for simple operations

- Debugging failures is harder than with structured tool calls

When to use: Best for data processing, batch operations, complex workflows with many steps, or scenarios where the output is primarily for the user (not the LLM) to consume.

4. Progressive Discovery

This approach fundamentally changes how AI agents discover and access tools by guiding them through a logical discovery process—much like how a human would approach an unfamiliar system. This is the approach we've built into Strata.

When an AI agent needs to accomplish a task, it works through below intelligent stages:

discover_server_categories_or_actions: Based on user intent, the agent identifies which services are relevant (e.g., GitHub for code, Slack for messaging) and receives their categories (e.g., Repos, Issues, PRs for GitHub; Channels, Messages for Slack)

get_category_actions: The agent selects relevant categories and receives available action names and descriptions, but not full schemas yet!

get_action_details: Only when the agent selects a specific action does it receive the complete parameter schema

execute_action: The agent performs the actual operation with validated parameters Additional Support Tools:

We also include helper tools like documentation search and authentication handling. Our evaluation against official MCP servers showed significant improvements and we also human evaluated the results across multi-app workflows.

Pros:

- Scales to any number of tools without context overflow, dramatically reduces initial token usage, reduces decision paralysis and hallucination.

- Works seamlessly with any external MCP server (official, community, or custom implementations)

- Leverages model's natural reasoning abilities rather than searching.

Cons:

- Adds slight latency compared to direct tool access.

- For simple operations that can be done with a single tool call, it may not be worth the overhead.

When to use: Essential for platforms with large number of tools, multi-app workflows, or when building AI agents that need access to diverse capabilities without knowing exactly what users will request. Particularly valuable for B2B SaaS platforms where different customers have different integrations enabled.

Key Takeaways

The "Less is More" principle isn't about limiting what AI agents can do, it's about strategically managing context to maximize what they can do reliably.

At Klavis AI, we're actively building and evaluating these hybrid architectures. Our mission is let your AI agent from knowing things to doing things.

Ready to Build Better AI Agents?

Try Strata's progressive discovery for free: Sign up at Klavis AI

Have questions or want to discuss your specific use case? book a call with us.

Explore our open-source implementations on GitHub.

.png)