I had a stressful experience a few weeks ago while talking to Claude about taxes. Claude told me about a form I was supposed to have filed last year (Form 5472), which carries a late fine of $25,000!

There was panic for 10 minutes and I was about to email my accountant when Claude noted, deep into our back and forth, that the form doesn’t apply to US tax residents. Luckily, I'm a tax resident.

I clarified that to Claude, who hit me with a classic

Ah – this is crucial information! ...

This follows a pattern I’ve noticed over and over in my conversations with AI:

- I ask AI a question

- AI gives me a confident answer but which happens to not apply to me

- I give more context to the AI

- AI goes "This changes everything" and backtracks what it had said

I bet you’ve had similar interactions too. The tricky part is identifying when the AI answers apply. I often can’t tell.

AI today has an overconfidence issue. Models like to make confident statements even when they lack the necessary context. Instead of asking more questions, they make assumptions, which they often forget to state. [1]

This tendency translates into friction for us, the end users. It forces us to figure out whether to trust what AI says, whether it has all the information it needs etc. This is what I'm calling the "burden of steering".

It's hard not to draw comparisons with human experts here. In contrast to AI, experts seem much better at pulling information from us, especially in the context of providing a service.

Take going to the doctor, for example. It does not require any medical expertise on our part as patients. We show up; the doctors and nurses take the lead. They ask us questions, they tell us when to lay down, they know where to examine us, they know what exams to order etc. They bear the burden of steering.

The same is true for virtually every expertise we contract as private citizens. Teachers, lawyers, financial advisors, and accountants, all take on the responsibility of asking for the information they need. [2] [3]

Today we have AIs that sound like an expert and know much of what experts know, but it doesn’t always behave like one. They make reckless assumptions where experts would instead ask for clarification. This is a dangerous mismatch: expert knowledge without expert conduct. [4]

This mismatch didn't worry me back in 2022, when chatGPT was still a toy in the hands of the tech-literate. But that's no longer the case. Earlier this year, chatGPT reached 400 million weekly active users. It already has mass adoption.

How many millions of people are already using AI for medical advice? Or to decide whether to divorce their spouse? What can happen when their AI assumes too much?

Thanks to Abhi for the feedback.

Notes

-

To say that LLMs like to make assumptions is somewhat misleading. The nature of transformers is that they don’t hold crisp beliefs about anything until they generate text (tokens) that confirms those beliefs. To use my tax anecdote for example, Claude never held that “Felipe is a tax non-resident” in any part of its hidden state. But it still generated an answer that assumed this to be true.

-

I had a laugh imagining my accountant telling me that they had actually filed Form 5472 on my behalf, because I had forgotten to mention I was a tax resident.

-

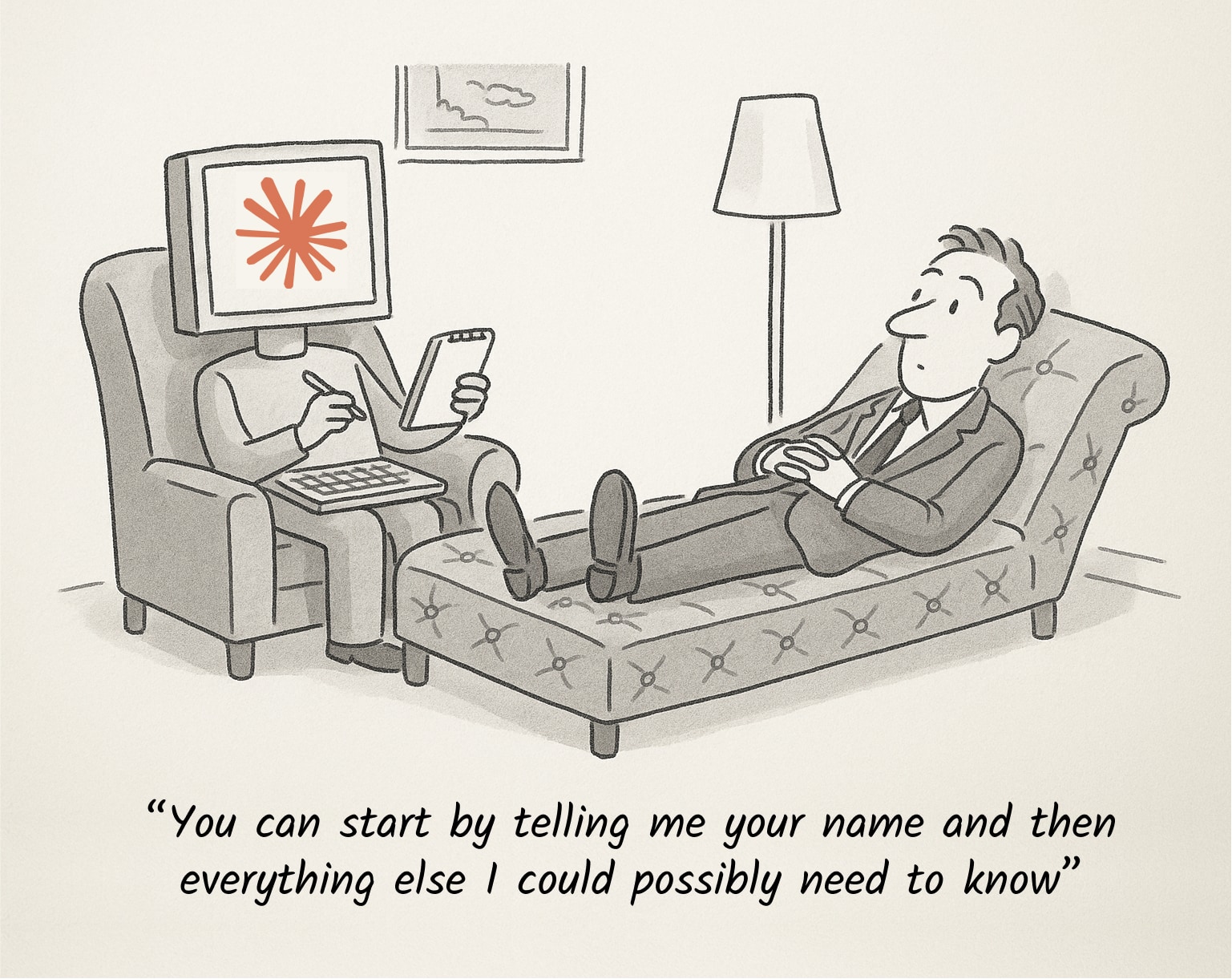

It's interesting to note that some types of human expertise are entirely about asking questions. For example, talk therapy. In some approaches, patients are the only ones doing the talking. Therapists are supposed to ask questions that guide patients towards their own insights. These therapists may provide no answers at all; that's part of their value prop!

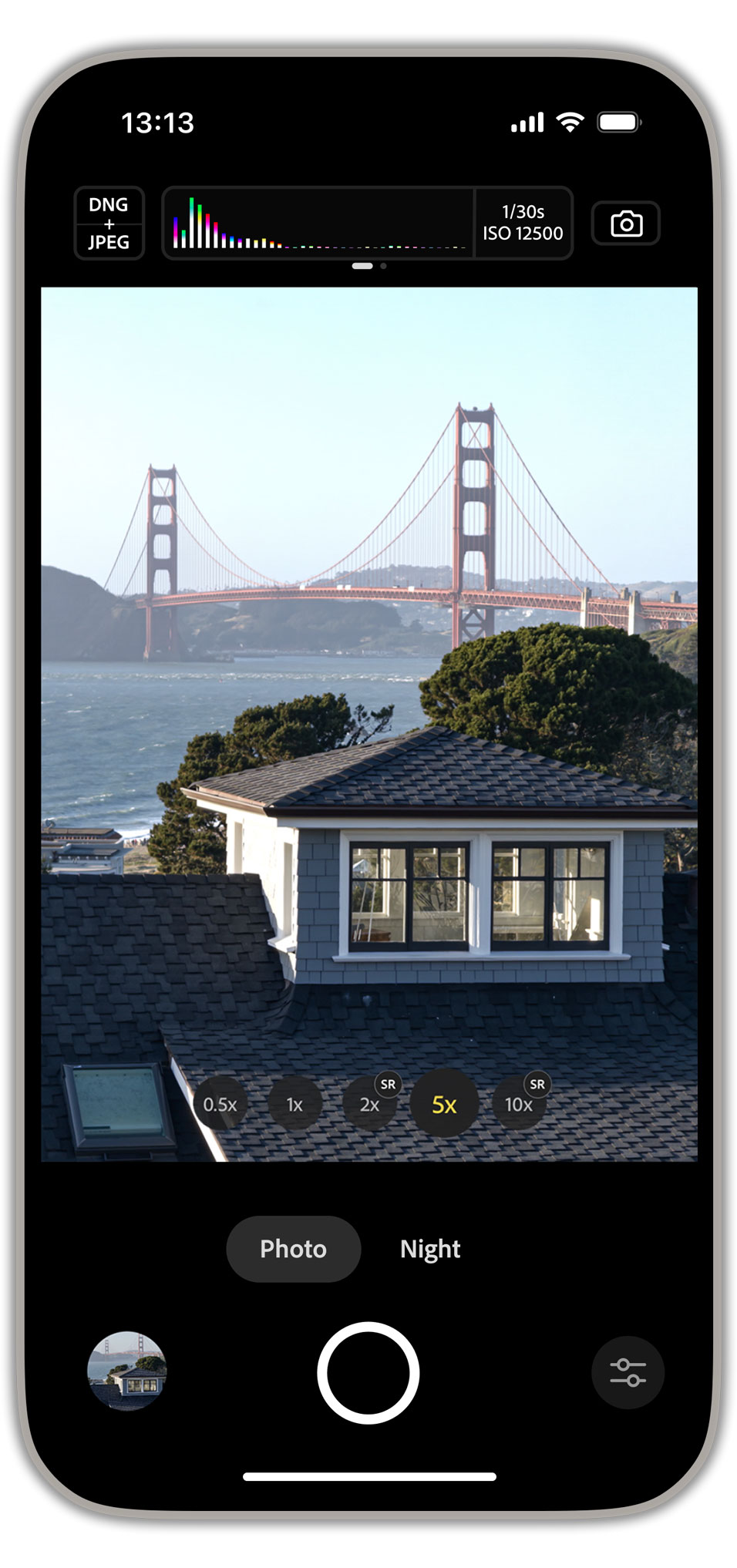

AI therapist, made by 4o. Provide all the details upfront.

.png)