If you work in AI, you're familiar with the magic of embeddings: the idea of turning complex data, like text, into a rich, numerical vector that captures its semantic "meaning." For years, the standard approach for documents was to create a single, "monolithic" vector. We'd take an entire 10,000-word report, push it through a model, and get a 1536-dimension vector that supposedly was the document.

This approach, however, is fundamentally flawed for the tasks we care about most today.

We must first ask what "representation" truly entails in this new context. The rise of Retrieval-Augmented Generation (RAG)—the technique of feeding a Large Language Model (LLM) snippets of retrieved data to ground its answers—has exposed a deep crack in this monolithic foundation. A RAG system doesn't need the average theme of a 100-page document; it needs the specific fact from page 73. And for that, the monolithic vector is a catastrophic failure.

The Allure and Failure of the Monolithic Vector

The monolithic approach comes from a different era, one focused on high-level classification. The task was to determine if a document was "spam" or "not spam," "legal" or "financial." For this, a single vector capturing the broad, averaged-out theme was perfectly sufficient.

But when you try to use this vector for precision retrieval, you encounter the "Curse of the Average Vector."

Think of it this way (to borrow from the "Hinton Mode"): imagine taking a book about World War II, a detailed cookbook, and a calculus textbook and putting all three into a blender. The resulting "soup" is a meaningless average of all three. You can't retrieve the recipe for coq au vin or the details of the Schrödinger equation from it.

This is what a monolithic embedding does. It averages out every distinct idea, nuance, and fact into a single, diluted representation. A paragraph on Alan Turing's codebreaking work is completely drowned out by the broader themes of battles and geopolitics. The final vector is useless for answering a specific query about the "Enigma machine."

And here's the "Karpathy Mode" gotcha: this approach is often impossible in practice. Most modern embedding models, like those from OpenAI, have a finite context window (e.g., 8191 tokens). If your document is longer than that, your "monolithic" vector isn't even of the whole document—it's just an embedding of the first few pages. The rest is simply ignored.

Thinking in Chunks: The Granular Revolution

If the monolithic vector is a failed "summary," the solution is a "searchable index of ideas." This is the core of the granular approach, better known as chunking.

Instead of one blurry vector, we break the document into a collection of smaller, semantically-focused pieces. Each chunk—a paragraph, a section, or a group of sentences—is embedded individually. The result is a vector database not of documents, but of ideas.

This is how a human researcher actually works. You don't recall the "average" of a paper; you recall the specific insight from the "Methodology" section and another from the "Conclusion." Chunking builds an AI system that mimics this precise, targeted retrieval.

For RAG, this isn't just an improvement; it's the entire mechanism. The "Retrieval" in RAG is the act of finding the most relevant chunk (or chunks) to feed to the LLM as context.

But How to Chunk? A Practical Guide

The "gotcha" is that how you chunk is now the most critical design decision you'll make. A bad chunking strategy can be just as harmful as a monolithic vector by, for example, splitting a sentence in half.

Here's the breakdown, from a simple baseline to the state-of-the-art:

- Fixed-Size Chunking: The "dumb baseline." You split the text every N characters. It's simple and fast, but it has no respect for grammar or logic. It will happily slice sentences—and even words—in half, creating incoherent chunks that poison your retrieval results.

- Recursive Character Splitting: The "robust baseline." This is the "don't be a hero" starting point. You provide a prioritized list of separators (e.g., ["\n\n", "\n", ". ", " "]) and a target chunk size. It tries to split by paragraph first. If a paragraph is too big, it recursively tries to split it by line break, then by sentence, and so on. It's a good balance of simplicity and semantic awareness.

- Semantic Chunking: The "SOTA" approach. This is where things get really clever. Instead of using arbitrary rules, this method uses the embeddings themselves to find the right split points. It provisionally splits the text (e.g., by sentence), embeds each piece, and then measures the semantic similarity between adjacent pieces. When it detects a sharp drop in similarity—a "topic boundary"—it creates a new chunk. This ensures every chunk is maximally coherent and focused on a single idea.

Beyond the Chunk: The New Frontier

Of course, chunking creates its own problems. This is the central challenge: we've introduced a new tension between precision and context.

The Context-Precision Dilemma

We've now entered a new dilemma. Small, focused chunks (e.g., a single sentence) are highly precise but lack the context to be fully understood. Larger chunks (e.g., multiple paragraphs) have full context but are "noisy," just like the old monolithic vectors.

This isn't just a theory. A 2025 study by Bhat et al. empirically confirmed that there is no single best chunk size.

- For fact-based Q&A, smaller chunks (64-128 tokens) were optimal.

- For narrative summaries, larger chunks (512-1024 tokens) performed far better.

Your chunk size is a critical hyperparameter that must be tuned to your specific task and data.

The Solution: "Situated Embeddings"

So, how do we get the best of both worlds? The most exciting new research points to an idea you might call "situated embeddings."

Let's use an analogy. Imagine reading a single, precise sentence, but with all the surrounding sentences visible in your peripheral vision. The sentence's meaning (the "focus") is sharpened and clarified by its neighbors (the "context").

This is what models like SitEmb (a 2025 paper) aim to do. The technical insight is to decouple the unit of retrieval from the context of representation.

- The model's goal is to embed a short, precise chunk (our retrieval target).

- But during the embedding process, the model is also shown the surrounding context (e.g., the paragraph before and after).

- The final vector represents the precise chunk but is aware of its place in the larger document. It combines the precision of a small chunk with the context of a large one.

The Problem of Scale: Hierarchical Indexing

There's one final "gotcha." What if you have 10 million documents, which explodes into 100 million chunks? A "flat" search across all 100 million vectors for every query is computationally brutal.

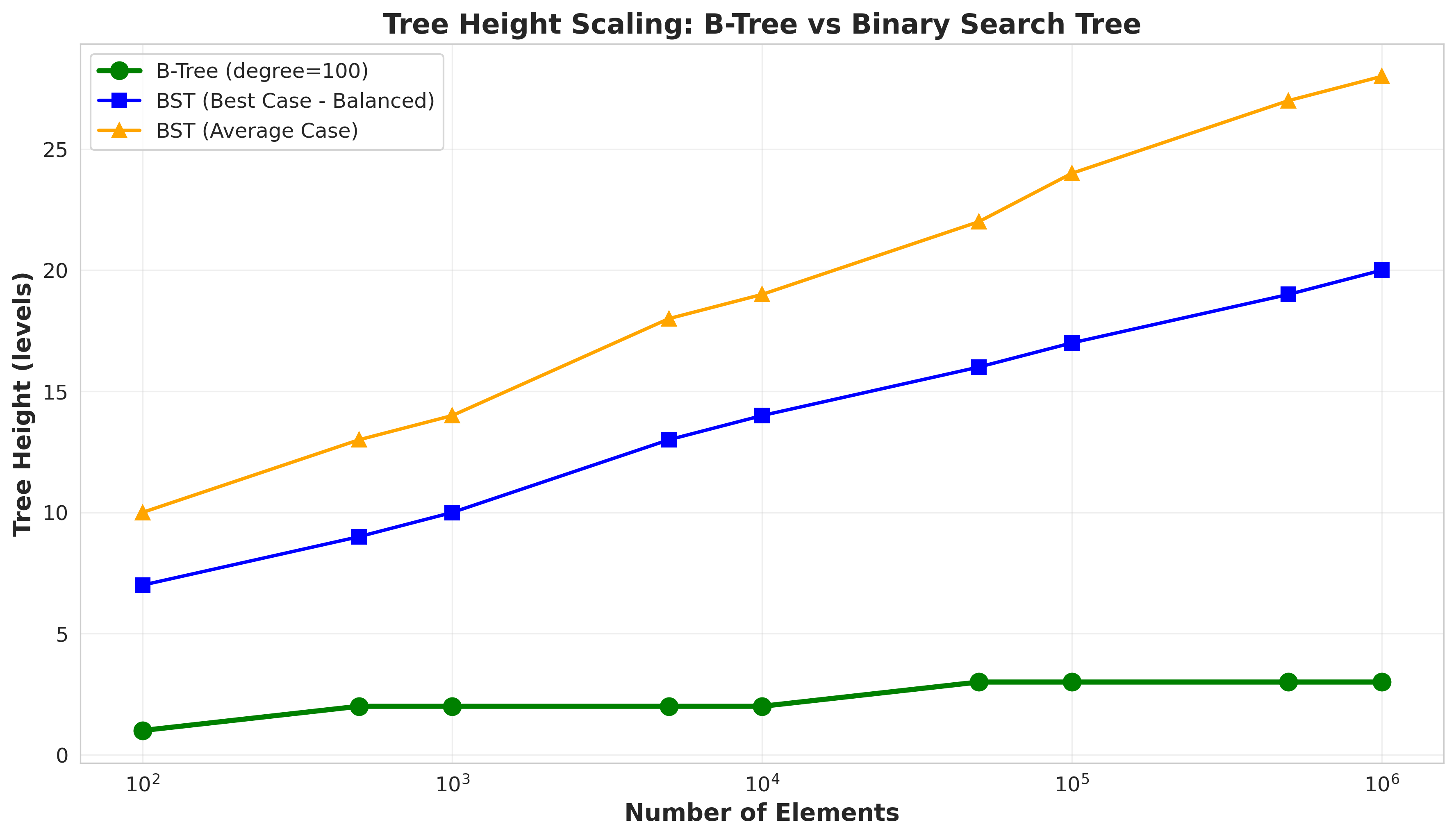

The solution is an architectural one: Hierarchical Indexing. Instead of a flat index, we build a multi-level tree.

- Level 1 (Top): Embed high-level summaries of entire documents.

- Level 2 (Middle): Embed summaries of sections or chapters.

- Level 3 (Bottom): Embed the final, granular chunks.

When a query comes in, the system performs a top-down filter. It first searches the tiny L1 index to find the most relevant documents. Then, it searches the L2 index only within those documents to find the most relevant sections. Finally, it searches the L3 chunks only within those sections to find the precise answer. It's a massively efficient way to navigate a vast sea of information.

Key Takeaways

The journey from sparse vectors to situated chunks mirrors our own understanding of language—moving from simple averages to a rich, contextual, and hierarchical network of ideas.

Here's what you should take away:

- Stop using monolithic embeddings for RAG. The "Curse of the Average Vector" will kill your performance.

- Embrace chunking. Your default should be a robust Recursive Character Splitter. If you need SOTA performance, explore Semantic Chunking.

- Your chunk size is a hyperparameter. Treat it as such. Tune it based on your data and the types of questions you expect.

- Watch this space. The future isn't just "chunking." It's in context-aware embeddings (like SitEmb) and scalable architectures (like Hierarchical Indexing) that move us closer to how we, as humans, actually understand and retrieve knowledge.

.png)